Introduction

In continuation of our ongoing labs, we have demonstrated how to let ArduPilot make full use of the Intel Realsense T265, a new off-the-shelf VIO tracking camera that can provide accurate position feedback in GPS-denied environment, with and without the use of ROS.

This blog is a direct next-step from part 4. We will further enhance the performance of our non-ROS system by taking into account other factors of a robotic platform that are oftentimes ignored. Specifically, we will look at:

- Camera position offset compensation

- Scale calibration

- Compass north alignment (beta)

Let’s dive in.

1. Prerequisite

If you have just started with this series, check out part 1 for a detailed discussion on hardware requirements, then follow the installation process until librealsense is verified to be working.

In summary, basic requirements for this lab include:

- An onboard computer capable of polling pose data from the T265, i.e. USB2 port. A Raspberry Pi 3 Model B has been proven to be sufficient for our labs.

- A working installation of librealsense and pyrealsense2.

- Newest version of

t265_to_mavlink.pyand verified that the script is working as established in part 4. - This lab is about improving the performance of the system introduced in part 4, thus it’s best if you have completed some handheld / flight tests so you can have a good sense of before-after comparison throughout the process.

2. Camera Position Offset Compensation

What is the problem?

If the camera is positioned far away from the center of the vehicle (especially on large frames), the pose data from the tracking camera might not entirely reflect the actual movement of the vehicle. Most noticeably, when the frame does a pure rotation (no translation at all) the feedback will become a combination of rotation and translation movement on a curve, which is reasonable since that’s how the camera actually moves. Conversely, for small frames this effect is neglectable.

To fix that, we need to take a step back and see how all the coordinate systems are related:

What we actually want is the pose data of IMU body frame {B} (also called IMU frame or body frame). What we have from the tracking camera, after all of the transformations performed in part 4, is pose data of camera frame {C}. Up until now, we have treated the camera frame {C} and IMU body frame {B} as identical, and that’s where the problem lies.

General solution

Suppose the pose measurement provided from the tracking camera at any given time is Pc, and the camera - IMU relative transformation is H_BC (transforming {C} into {B}), then the desired measurement in the IMU body frame Pb is:

with H_BC being a homogeneous transformation matrix, complete with rotation and translation parts.

Solution in our case

Figuring out all of the elements of H_BC can be quite tricky and complicated. Thankfully, in our case, the rotation portion has been taken care of in part 4 of this series. That is to say, {C} and {B} are ensured to have their axes always point to the same directions, so no further rotation is required to align them. All we need to do now is to find out the camera position offsets d_x, d_y, d_z.

- Measuring camera position offsets: x, y and z distance offsets (in meters) from the IMU or the center of rotation/gravity of the vehicle, defined the same as in ArduPilot’s wiki page Sensor Position Offset Compensation:

The sensor’s position offsets are specified as 3 values (X, Y and Z) which are distances in meters from the IMU (which can be assumed to be in the middle of the flight controller board) or the vehicle’s center of gravity.

* X : distance forward of the IMU or center of gravity. Positive values are towards the front of the vehicle, negative values are towards the back.

* Y : distance to the right of the IMU or center of gravity. Positive values are towards the right side of the vehicle, negative values are towards the left.

* Z : distance below the IMU or center of gravity. Positive values are lower, negative values are higher.

-

Modify the script: Within the script

t265_to_mavlink.py:- Change the offset values in the script

body_offset_x,body_offset_yandbody_offset_zaccordingly. - Enable using offset compensation:

body_offset_enabled = 1.

- Change the offset values in the script

-

Testing: Next time you run the script, notice the difference in pure rotation movements. Other than that, the system should behave the same.

# Navigate to and run the script

cd /path/to/the/script

python3 t265_to_mavlink.py

Note: Similar to the note of sensor offset wiki: In most vehicles which have all their sensors (camera and IMU in this case) within 15cm of each other, it is unlikely that providing the offsets will provide a noticeable performance improvement.

3. Scale calibration

What is the problem?

At longer distance, the output scale in some cases are reported to be off by 20-30% of the actual scale. Hre are some Github issues related to this problem:

- T265 Inaccurate Scale · Issue #4174 · IntelRealSense/librealsense · GitHub

- Issues with T265 Camera Reporting Position · Issue #4450 · IntelRealSense/librealsense · GitHub

Solution

We need to find a scale factor that can up/downscale the output position to the true value. To achieve that, we will go with the simple solution of measuring the actual displacement distance of the camera, then divide that with the estimated displacement received from the tracking camera.

Once the scale factor is determined, position output from the camera will be re-scaled with this factor. Scale factor can then be saved in the script for subsequent runs.

Procedure:

Note: In the steps below, I would recommend doing the tests in the same environment, same moving trajectory (for example, walks on a 2m x 2m square every time). If the environment changes to a new location or frame configuration changes, it’s best to first restore the scale factor to default value (

1.0) and calibrate again if the scale is incorrect.

-

Do at least a few tests to determine whether the scale is arbitrarily changing, or if it stays the same (however incorrect), between runs. The behavior will determine the action in the last step.

-

Run the script with scale calibration option enabled:

python3 t265_to_mavlink.py --scale_calib_enable true -

Perform handheld tests and calculate the new scale based on actual displacement and position feedback data. Actual displacement can also come from other sensors’ reading (rangefinder for example), provided they are accurate enough.

-

Input new scale: By default, the scale factor is

1.0. Scale should be input as a floating point number, i.e.1.1is a valid value. At any time, type in new scale into the terminal, finish by pressing Enter. The new scale will take effect immediately. Note that scale should only be modified when the vehicle is not moving, otherwise local position (quadcopter icon on the map) might diverge. -

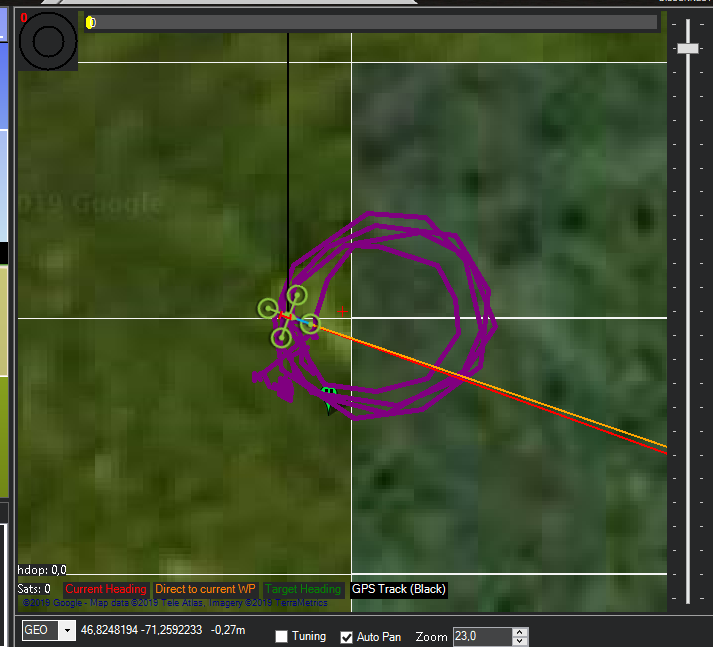

Observe the changes on Mission Planner’s map. Below are some walking tests by @ppoirier in the same trajectory, with two different scale factors.

-

Flight tests: Once you have obtained a good scale, flight tests can be carried out like normal.

-

Save or discard new scale value: if the original scale is incorrect but consistent between runs, modify the script and change the default

scale_factor. If scale changes arbitrarily between runs, you might have to perform this calibration again next time.

4. Compass north alignment (BETA)

Note 1: Of the new features introduced in this post, compass north alignment is verified to work with internal compass, with and without optical flow. However, more evaluations are needed in various cases where multiple data sources are being used at the same time (multiple compasses, GPS, optical flow etc.). Any beta testers are thus much appreciated.

Note 2: In outdoor testing, optical flow can really help to improve stability, especially against wind.

What is the problem?

If you wish to have the heading of the vehicle aligned with real world’s north, i.e. 0 degree always means facing magnetic north direction, then this section is for you.

General solution

The main implementation idea comes from this post, adapted to our robotic application, workflow and other modules.

Solution in our case

-

Assumptions:

- Suppose that the Realsense T265 and compass are rigidly attached to the frame, meaning the translation and rotation offsets won’t change over time. Furthermore, compass’s heading and pose data (already transformed into NED frame) are assumed to align with the vehicle’s forward direction.

- Compass data is available through MAVLink

ATTITUDEmessage.

-

Procedure:

- Compass yaw data is updated in the background by listening to MAVLink

ATTITUDEmessage. - In our main loop, we will capture the latest T265’s raw pose, perform other submodules, before multiply by latest compass’s transformation matrix, which only takes into account the rotation. The translation offsets do not contribute to our solution and will be ignored.

- The final pose with rotation aligned to magnetic north will be used.

- Compass yaw data is updated in the background by listening to MAVLink

-

ArduPilot parameters:

# Enable compass and use at least one of them

COMPASS_ENABLE = 1

COMPASS_USE = 1

Optical flow can also be enabled. The use of other systems (multiple compasses, GPS etc.) requires more beta testing.

- Enable compass north alignment in the script:

# Navigate to the script

nano /path/to/t265_to_mavlink.py

# compass_enabled = 1 : Enable using yaw from compass to align north (zero degree is facing north)

# Run the script:

python3 /path/to/t265_to_mavlink.py

-

Verify the new heading: Now the vehicle should point toward the current actual heading. Other than that, everything should work similarly.

(Same vehicle position and heading in real world. Initialization without compass on the left, with compass on the right) -

Ground test, handheld test and flight test: This feature is still in beta since consistent performance across systems is still a question mark.

-

Ground test: Once the system starts running, let the vehicle grounded and see if final heading is drifting. It should align north even when vision position starts streaming. If it drifts, it’s a bad sign for flight tests. See if compass data is stable and not jumping around, retry again.

-

Handheld test: move the vehicle in a specific pattern (square, circle etc.) and verify that trajectory follows on the map. For example, if you moved in a cross you should see a cross and not a cloverleaf.

-

Flight test: If all is well, you can go ahead with flight tests. Note that things can still go wrong at any time, so proceed with caution and always be ready to regain control if the vehicle starts to move erratically.

-

Here are some outdoor tests by @ppoirier, with all of the offsets, scale and compass settings that are introduced in this blog.

[Development Update]

Voice and message notification for confidence level with Mission Planner

With the latest version of the script t265_to_mavlink.py, the confidence level will be displayed on Mission Planner’s message panel, HUD, as well as by speech.

- Notification will be sent only when the system starts and when confidence level changes to a new state, from

MediumtoHigh, for example. - If there are some messages constantly displayed on the HUD, you might not be able to see the confidence level notification.

- To enable speech of Mission Planner: Tab Config/Tuning > Planner > Speech > tick enable speech.

5. Open issues and further development

As a relatively new kind of device, it’s not surprising that there are multiple open questions regarding the performance and features of the Intel Realsense T265. A good source of new information and updates is the librealsense and realsense-ros repos. Interesting info can be found in some of these issues:

- Potential for a more versatile, intended towards embedded platforms version: T265 adding an auxiliary Serial & Power port for embedded systems. · Issue #4408 · IntelRealSense/librealsense · GitHub

- T265 vs GPS: GPS vs T265 position data project · Issue #4412 · IntelRealSense/librealsense · GitHub

- In-depth discussion about the inner working of tracking confidence and covariance: T265 publish covariance · Issue #770 · IntelRealSense/realsense-ros · GitHub

- Exposure control is now available through

Viewerapp and code: T265 manual exposure by dorodnic · Pull Request #3992 · IntelRealSense/librealsense · GitHub - Plan to use the device as a pure odometry sensor? That might be possible in future version by disabling loop closure: Disable Relocalization/Loop Closure for T265 · Issue #779 · IntelRealSense/realsense-ros · GitHub

- It’s not possible to run multiple T265s at the same time (for now, at least): Multiple T265 cameras · Issue #706 · IntelRealSense/realsense-ros · GitHub

- The T265’s firmware is bundled with

librealsense, that means updating firmware = updatinglibrealsenseto latest version: Latest T265 Firmware? · Issue #3746 · IntelRealSense/librealsense · GitHub - Did you know there is an example to detect AprilTag pose using the T265’s fisheye streams? This provides a simple way to track a target without too much computing resources. Check it out: https://github.com/IntelRealSense/librealsense/tree/development/examples/pose-apriltag

All in all, check the repos regularly if you are developing applications using this device, or just want to learn and explore as much as possible.

6. Conclusion and next steps

In this blog, we have taken the next steps to improve and enhance the performance of our system, which incorporates a VIO tracking camera with ArduPilot using Python and without ROS. Specifically, we have added support for:

- camera position offsets compensation,

- scale calibration and correction, with a step-by-step guide

- a working (beta) method to align the heading of the system with real world’s north.

We have now completed the sequence of weekly labs for our ArduPilot GSoC 2019 project. But we still have even more exciting developments on the way, so stay tuned! Here are a few Github issues to give you a glimpse of what’s next: #11812, #10374 and #11671.

If you are interested in contributing, or just keen on discussing the new developments, feel free to join ArduPilot’s many Gitter channels: ArduPilot and VisionProjects.

Hope this helps. Happy flying!

It does track but occasionally burps on simple missions. I have so many questions, but lets start with a fun one. Does the rover firmware have the same T265 capabilities as the copter versions y’ll been using? I’m running the latest MP Beta and am unable to see the confidence level on my HUD.

It does track but occasionally burps on simple missions. I have so many questions, but lets start with a fun one. Does the rover firmware have the same T265 capabilities as the copter versions y’ll been using? I’m running the latest MP Beta and am unable to see the confidence level on my HUD.