In this blog, I will attempt to apply the awesome works that have been done by @anbello, @ppoirier, @chobitsfan with a new fiducial marker system: using AprilTags to facilitate indoor non-GPS vision-based positioning.

For those interested, here are the references to related articles:

- Indoor autonomous flight with Arducopter, ROS and Aruco Boards - wiki page and blog post.

- Indoor Autonomous Flight with Cube, ROS and Aruco Board.

- Vision Positioning Experiments using SKYVIPER.

- DetectionExperiment with Visual Odometry - ROVIO part 1 and part 2

- Indoor flight with external navigation data.

1. Before we start

In order to make it work, you will need to know about ROS, Linux, Python, how to configure wifi network, ssh, etc. A plethora of problems can arise with these things, so if you are not familiar with these topics, head over to the dev wiki page as well as tons of other resources on google to get started.

One thing I have learned during the process of working on this lab is that details matter. Depends on your particular hardware, firmware and software setup, you will need to modify the commands to run, packages to install, and parameters to configure. Even the order in which you run commands can give you different results. In case the steps in this blog do not work for you, take a look at the abovementioned articles and the discussion in the blogs, which can give you some ideas on what to try.

To keep the blog brief, below I will lay out the main steps and refer to the relevant wiki pages where possible.

2. System setup

My hardware setup includes:

- A small quadcopter equipped with Pixhack Autopilot, CUAV PX4FLOW, TeraRanger One. With this setup, you can follow the wiki to achieve non-GPS flight with PX4FLOW.

- Raspberry Pi 3 Model B with a 16GB micro SD card running Ubuntu Mate 16.04, connected to a USB camera down-facing to the ground, with the x-axis of the camera to the right.

- Note: There is no restriction on the mounting orientation of the camera beside it being down-facing, but we need to know the camera’s x-axis to modify the parameters later on.

Software setup on RPi:

- install ROS

- install MAVROS

- If the connection between RPi - ArduPilot is established via the UART serial port: change the setting in /boot/config.txt

- connect RPi to ArduPilot with MAVLink

- connect RPi to ArduPilot with MAVROS

- synchronize clock/time.

- Install ROS driver for the camera: I use usb_cam package (sudo apt-get install ros-kinetic-usb-cam). If you use RPi camera module, follow this instruction to enable the camera, then install raspicam_node.

- Install AprilTag library and AprilTag ROS wrapper. I have put together the steps needed here.

- Clone my repo vision_to_mavros, which will listen to the output of AprilTag (tags’ pose in the camera frame), perform necessary transformations, then publish the body pose to a topic that can be remapped to

/mavros/vision_pose/pose.

Prepare the tags board:

- Here is the pdf file of the A3 paper that I used. Depends on your printer setting, the actual size and location of the tags might be slightly different.

- You can design your own tags, print out, measure the true dimension and modify the layout file accordingly.

Camera calibration:

- Follow the ROS wiki to obtain the calibration file for your camera.

- Afterward, replace the

camera_info_urlparam in the launch file with the correct path to the calibration file.

Configure parameters on Mission Planner:

- Params:

AHRS_EKF_TYPE = 2

BRD_RTC_TYPES = 2

EK2_ENABLE = 1

EK3_ENABLE = 0

EK2_GPS_TYPE = 3

EK2_POSNE_M_NSE = 0.1

EK2_VELD_M_NSE = 0.1

EK2_VELNE_M_NSE = 0.1

GPS_TYPE = 0

COMPASS_USE = 0

COMPASS_USE2 = 0

COMPASS_USE3 = 0

SERIAL5_BAUD = 921 (the serial port used to connect to Raspberry Pi)

SERIAL5_PROTOCOL = 1

SYSID_MYGCS = 1 (to accept control from mavros)

VISO_TYPE = 0

- For large frame, the offset between the position of the camera and vehicle’s center-of-gravity can be taken into account through VISO_POS params. I have not tried these params myself but you can read more about this in the wiki.

3. Ground test

First, let’s test each ROS nodes separately and fix any problems that might arise:

- Camera node:

- On RPi:

roslaunch usb_cam usb_cam-test.launch - If RPi is not connected to a display, view the raw image on PC with Linux Ubuntu:

export ROS_MATER_URI=http://<rpi-ip>:11311 && rqt_image_view - Verify that there are images from the camera.

- On RPi:

- MAVROS node:

- On RPi:

roslaunch mavros apm.launch fcu_url:=<tty-port>:<baud-rate> - On another terminal: `rostopic echo /mavros/

- Verify that MAVROS is running OK. For example,

/mavros/statusshould show that FCU is“CONNECTED”.

- On RPi:

- AprilTags node and vision_to_mavros node:

- Make sure the camera_info_url points to the correct path to your camera’s calibration file.

- On RPi:

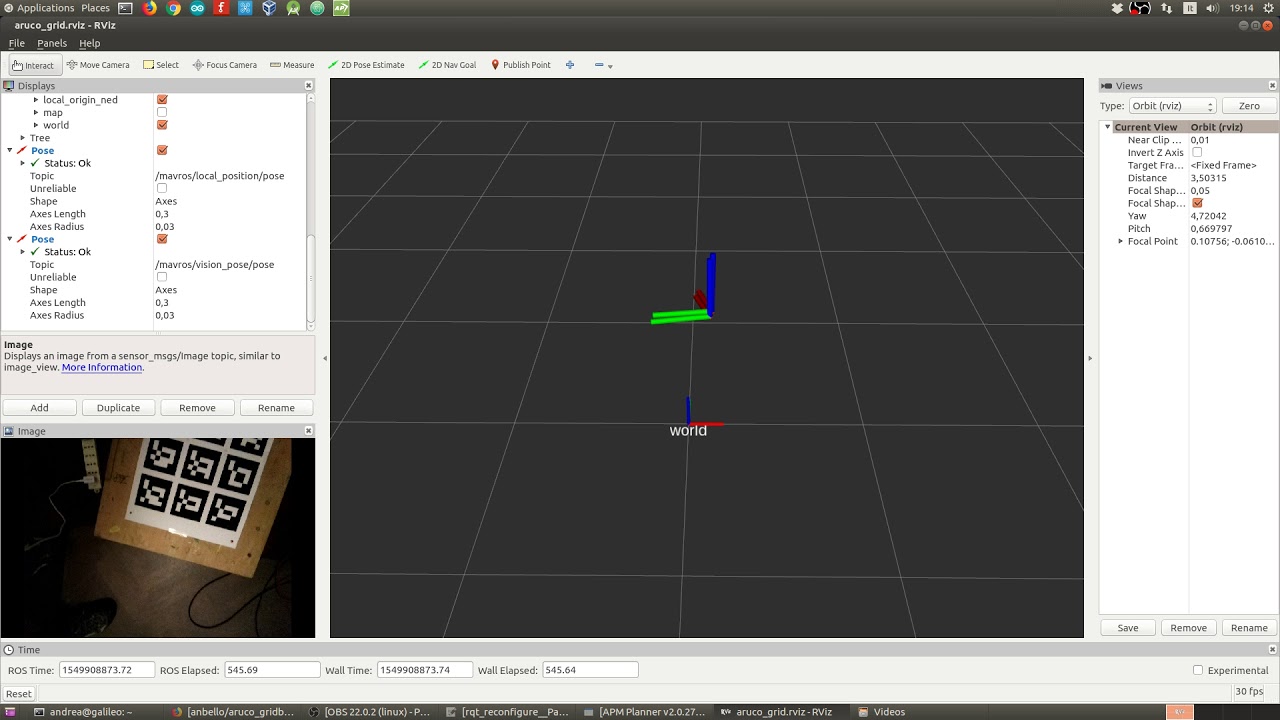

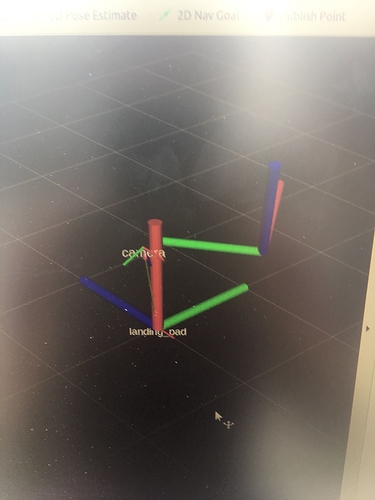

roslaunch vision_to_mavros apriltags_to_mavros.launch - Open up RViz, view topics

/tfand/mavros/vision_pose/poseto the display. With/tf, you should see the camera pose in the tags’ frame, with z-axis pointing downwards. If your camera’s x-axis is pointing to the right, then/mavros/vision_pose/posewill be aligned with body frame. If the camera’s x-axis is pointing in a different direction, you need to modify the params of vision_to_mavros accordingly.

If each node can run without any problem, you can perform ground test:

- On RPi:

roslaunch vision_to_mavros apriltags_to_mavros.launch - View the

/mavros/vision_pose/poseon RViz. Move the vehicle around and see if the pose changes according to the movement, as demonstrated in the video by @anbello :

- Additionally, you can verify vision_pose data on MP or QGC Analyse Widget with the topic

VISION_POSITION_ESTIMATE.x and y. You should see the data coming in. - Before you can use the system, you need to send

SET_GPS_GLOBAL_ORIGINandSET_HOME_POSITIONmessages. There are a few ways to do this:

- On Mission Planner, right click on a point in the map and click

Set EKF Origin Here. If data from/mavros/vision_pose/poseis available, the vehicle icon will appear on the map.

- Send a SET_GPS_GLOBAL_ORIGIN message, to set the origin for the EKF. You will have to hard-code the lat/long coordinates you wish to send. @anbello has a script that does exactly this. The commands to run this script can be found here.

- After the origin of the EKF is set, hold the vehicle up, move around and observe the trajectory of the vehicle on MP.

If the last step is successful, you can go ahead with flight test.

4. Flight test

For external navigation data to be accepted by EKF, the data rate needs to be higher than a certain threshold (the number I saw from another discussion is minimum 10Hz, but I have not found the portion of the code to verify this). If you are also using RPi, firstly we need to tune the params related to computational costs to achieve higher detection rate. In my experiments, the RPi can run AprilTags algorithm at 11Hz when the camera is stable but when there are movement, the rate reduced to only 2-3Hz, which is not sufficient for hovering. The related tuning parameters for AprilTags algo are located in settings.yaml. Specifically:

- Increase tag_decimate will increase dectection rate, at the expense of lower accuracy, and vice versa.

- Increase tag_threads if you are not running anything else and have some CPU to spare.

Now for the flight tests, similar to what @ppoirier did in his blog on Visual Odometry - ROVIO:

- Takeoff in

Stabilizeto check whether the quadcopter is stable. - At a height that the camera can have a good view of the tags, switch to

Alt-Holdto adjust level position. Observe the feedback on RViz as well as Mission Planner to see if tags are detected. - Take a look at MP Map, confirm that AprilTags is still tracking.

- Switch to

Loiter, but always ready to switch back toAlt-Holdif anything goes awry. - Otherwise, the quadcopter should hover stably above the tags.

Conclusion

We have replicated the works of @anbello, @ppoirier with a new fiducial tags system, AprilTags. Due to its limited computational power, the RPi is borderline usable for this type of experiments. Even after a lot of parameters are tuned to facilitate stable hovering, there are just too many limitations in terms of detection range and flight movement. If your RPi cannot handle 10Hz or you need to run something else at the same time, or in general the performance of your experiments are not up to your expectation, I would suggest the following solutions:

- Stream all images data to ground PC, perform all vision-related tasks on ground PC, then send commands back to ArduPilot. Note that the network bandwidth can be the next bottleneck in this case.

- Upgrade from the RPi to a better single-board computer. Up board, NVIDIA Jetson Nano, Google Coral etc. so many new choices coming out. Here is a nice comparison if you are ready to move on from the Raspberry Pi.

- Use a VIO sensor that incorporates both hardware and software, perform all algorithms and provide ready-to-use pose data. The new Intel Realsense T265 is a perfect candidate. And the procedure on how to use it with ArduPilot happens to be my 2019 ArduPilot GSoC proposal.

I hope this blog was helpful to anyone who is interested in this topic. As usual, feel free to comment and I am happy to help with what I know. Remember to check out the other blogs mentioned at the beginning for many, many more info that are not covered here.