Hi AP community! With the conclusion of this year’s Google Summer of Code, I’m excited to share my progress on my GSoC 2024 project.

Project Description

The objective of this project was to develop a High-Altitude Non-GPS navigation technique for UAVs, enabling them to estimate their positions and navigate effectively at high altitudes without relying on GPS. This project involved extensive research alongside intensive development. Multiple research papers were reviewed, and various computer vision and control filter algorithms were tested. Through these efforts, a semi-stable algorithm was successfully developed to navigate in a known environment at high altitudes.

Work Progress and Development

Below is a summary of the progress made during the GSoC program:

1. Development of a High-Altitude Non-GPS Navigation Technique Using SIFT Feature Matching and ORB-Based Map Generation

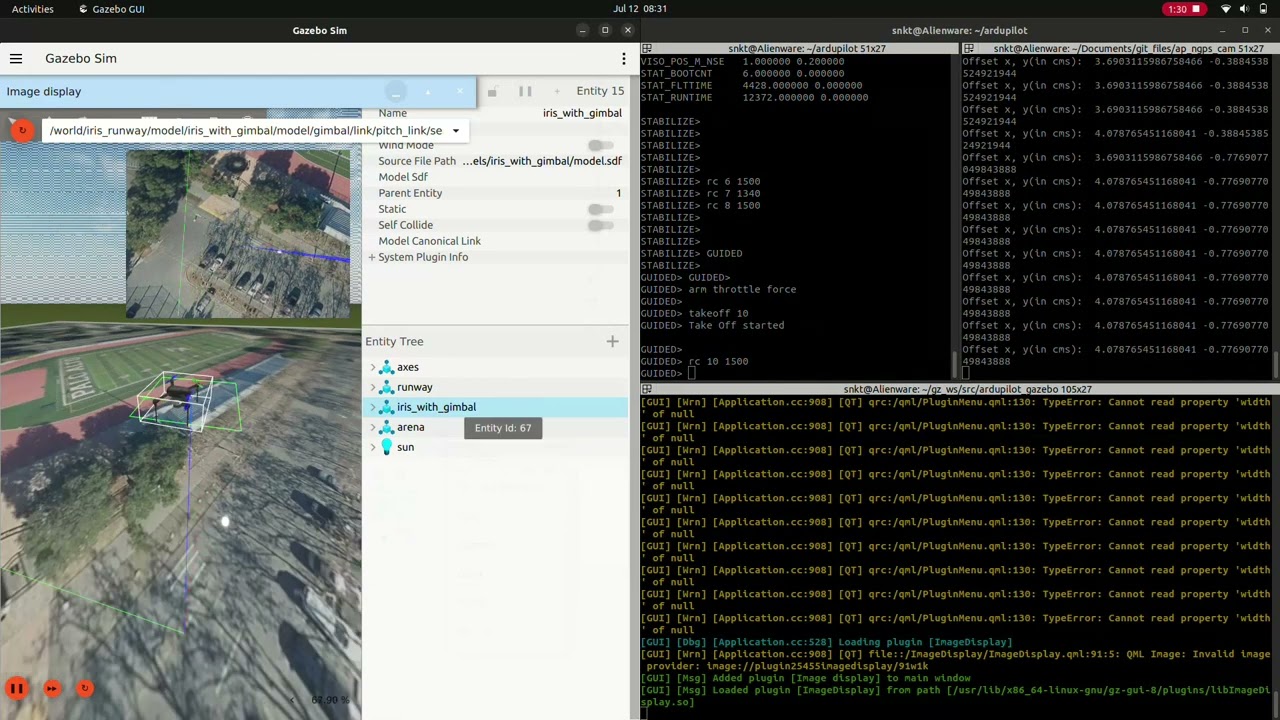

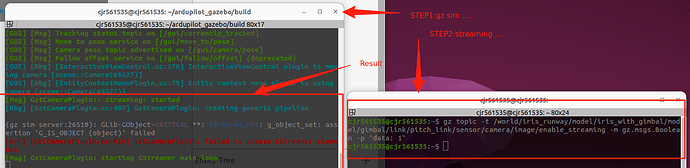

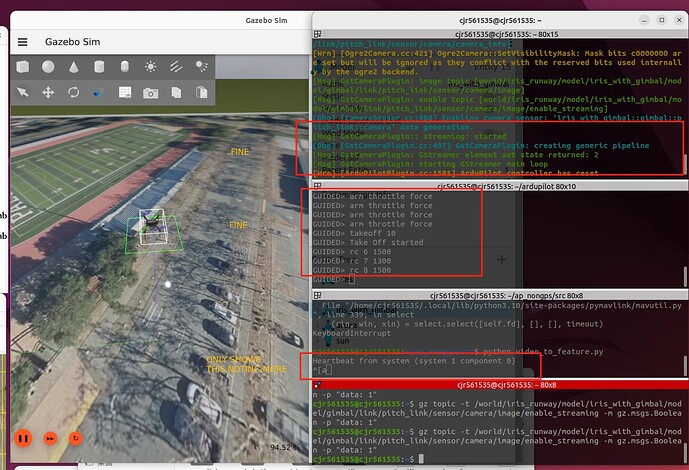

A stable algorithm has been developed that utilizes SIFT (Scale-Invariant Feature Transform) for real-time feature detection and matching to estimate the UAV’s position. This approach requires bottom facing gimbal stabilized cameras.

The algorithm generates a map by stitching together images from a dataset of terrain images in a known environment. Real-time camera frames are then matched with the created map to estimate current position. A pipeline is formulated to correct image distortions and enhance the feature matching process, ensuring that the UAV’s positional estimates remain accurate and reliable. This includes applying color corrections, geometric corrections and refining image alignment techniques to compensate for camera lens distortions and varying perspectives. Optimizations are also employed to get the required rate of output from the feature matching to satisfy the EKF (Minimum 4hz).

The final implementation enables the UAV to maintain stable position in environments where GPS signals are unavailable, significantly improving its capability for high-altitude operations.

Here’s a video of the implementation:

2. Optical Flow Method

During the program, efforts were made to implement an optical flow-based algorithm to estimate the UAV’s position relative to its home location. A modified version of the Lucas-Kanade method was implemented to enhance the efficiency of the computed flow vectors. This approach also requires the same camera setup as used in the SIFT-based method.

The modifications include using a multi-scale pyramid representation to capture larger movements and using outlier rejection techniques, such as RANSAC, to filter out noise in the flow vectors. In upcoming versions of this algorithm, integrating IMU data will also be implemented to improve position accuracy.

As soon as the drone takes off, flow is calculated, and the data is passed to the firmware using one of the two methods:

a. By directly feeding the flow values to firmware using OPTICAL_FLOW MAVlink message.

b. By calculating the position using heading data and calculated average flow vectors on the offboard computer and feeding as vision position estimates to the AP.

Above methods have shown promising results when tested on pre-recorded video frames captured by a drone and are currently being implemented in the simulation.

Thanks to @timtuxworth for providing these videosTo further improve the algorithm, feature matching and optical flow is fused. This approach is beneficial for maintaining the EKF’s accuracy by using optical flow to provide continuous position estimates at a high rate, while feature matching is employed to periodically reset the position estimates from optical flow. This helps to prevent drift that can occur due to the inherent limitations of optical flow.

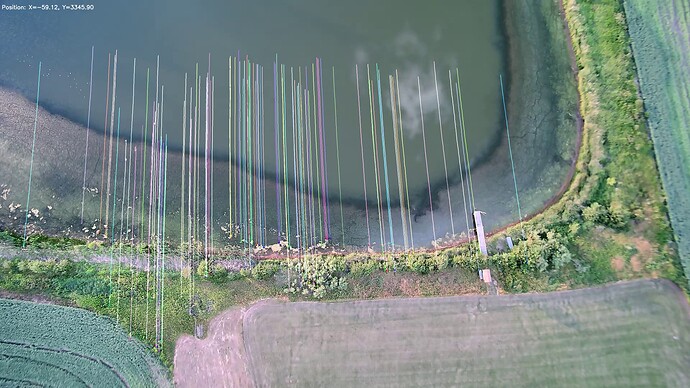

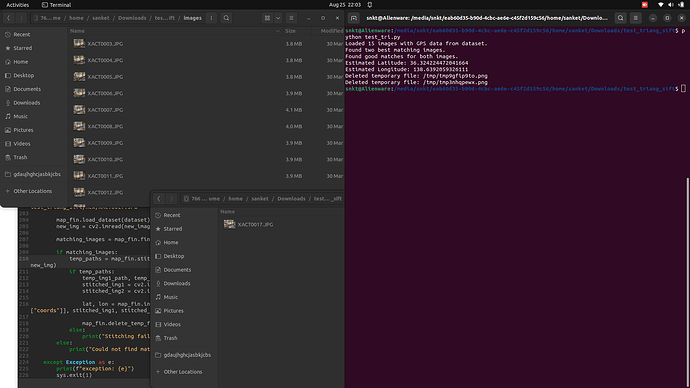

c. Location interpolation based on GPS EXIF data

The first implementation (described in point ‘a’) captures the terrain image at the initial takeoff position and uses that as a reference to calculate the local position. This approach leverages the GPS EXIF data from known location image dataset, combined with feature matching, to interpolate the position of an unknown location (real-time camera frame). When a camera frame is received, the algorithm searches for images in the dataset that might contain references matching the unknown image frame. The GPS EXIF data from these known images is then used to estimate the position of the unknown image through triangulation, providing absolute location data (latitude and longitude) for the drone.

While this technique is highly beneficial for estimating the absolute coordinates of the drone, it is too slow to meet the requirements of the EKF. A more efficient way to utilize this data is through dead reckoning. ArduPilot provides dead reckoning capabilities using wind estimates, which allows the copter to maintain stability for up to 30 seconds before becoming highly unstable.

To enhance stability and long duration flight, the calculated absolute location coordinates can be used to reset the EKF position externally using the MAV_CMD_EXTERNAL_POSITION_ESTIMATE message. This approach helps maintain the drone’s flight over longer durations and even enables navigation by resetting the EKF periodically, preventing drift over time. As highlighted in this pull request by Tridge, this method can handle much longer delays than normal, and alternative methods like Vision pose lag would fall beyond the fusion horizon. Importantly, this reset method only affects the position and leaves the velocity unaffected.

This method is still undergoing testing, and I will keep this thread updated with further developments.

Findings

Based on the research and development done on this project, some useful findings are listed below:

1. SIFT vs. ORB

-

SIFT proved to be highly reliable for accurate feature detection and matching, especially in scenarios with significant variation in scale and rotation. However, it comes with high computational cost and slower performance, which can be a limitation in real-time applications. SIFT is highly recommended in dynamic environments and setups where gimbal is not present.

-

ORB (Oriented FAST and Rotated BRIEF), while faster and less computationally intensive than SIFT, showed lower accuracy in challenging lighting conditions and when dealing with significant distortions. ORB is preferable in static environments, good resolution and gimbal based setups.

-

Akaze offers a balance between speed and accuracy, being more computationally efficient than SIFT and providing better feature matching than ORB in some cases. It could be a good alternative to the ORB based position estimation.

-

Neural Network-based Descriptors (e.g., SuperPoint) can offer high accuracy and robustness but require more processing power. These could be explored for future implementations, especially in high-performance UAVs equipped with powerful onboard computers like Jetson.

2. Integration of Optical Flow with Feature Matching

- Fusing optical flow with feature matching significantly enhances navigation stability. Optical flow alone is effective for high-rate position estimates, but it tends to drift over time. By periodically resetting the position estimate using feature matching, the overall drift can be minimized, making the system more reliable for longer-duration flights.

3. IMU-based Interpolation for position estimation

- Using IMU-based interpolation helps provide continuous position and orientation estimates between known states by leveraging high-rate IMU data. This approach fills the gaps between more accurate but less frequent position updates from GPS or feature matching, reducing drift and improving overall stability. By integrating IMU measurements, this method ensures smoother navigation and maintains reliable state estimation, especially in environments where direct positioning data is intermittent or unavailable.

Future Work

1. Hierarchical Localization Optimization

Incorporating a hierarchical localization approach can further enhance the system’s scalability and efficiency. By using a coarse-to-fine localization strategy, the UAV can quickly narrow down the search area using broader, less detailed information before applying detailed feature matching for precise localization.

It can be implemented using a two-step process involving coarse-level localization using a vector of locally aggregated descriptors, followed by fine-level localization using SIFT features. This hierarchical approach will streamline the matching process by quickly narrowing down the search area and then applying detailed feature matching to improve accuracy and computational efficiency.

2. Integration of Approximate Nearest Neighbour (ANN) Search Techniques

Implementing ANN search methods like Product Quantization (PQ) and Inverse File (IVF) can accelerate the matching process between real-time camera frames and the pre-computed map. These techniques reduce the complexity and memory requirements, making real-time processing more feasible. Instead of relying on a stitched map, the database will store visual words and descriptors, minimizing memory requirements.

These ANN methods use hashing techniques to optimize feature matching by efficiently indexing and retrieving feature vectors, enabling faster searches.

Reference to the approach can be found in this thesis. Thanks to @radiant_bee for mentioning this thesis.

I want to extend my thanks to my incredible mentors, @rmackay9 and @tridge, for their invaluable guidance. I also want to thank @rhys for his immense support in the gimbal testing and simulation parts.

Related Pull requests and contributions

This project is a work in progress, and I am committed to its successful completion, continuously refining and improving the techniques to achieve reliable high-altitude, non-GPS navigation for UAVs.