Hi,

I would like to propose a new packet scheduler for the frsky passthrough telemetry.

I will start describing the current scheduler and why proposing a new one.

I will briefly analyze the packet scheduler of the mavlinkToPassthrough project.

Since passthrough telemetry is decoded with LUA scrips it’s important to note that the screen on OpenTX radios is updated at max 20Hz, we’ll see below why this is important.

There are 2 versions of the current scheduler, the original one and the revised one.

All stable releases run the original scheduler, master as of now implements the “revised” version.

The revised one includes a patch I’ve submitted to prevent messages from stalling all telemetry except attitude.

Quick and simple intro on how frsky s.port telemetry works.

The receiver sends on the bus a polling signal every 12ms asking for data from a specific sensor ID.

The receiver will poll the IDs in sequence to find which one is present. If only one physical ID is found, the receiver will alternate the sensor polling and the search (present sensor, next ID to search, present sensor, next ID and so on).

If more sensors are found, the poll sequence returns almost to a normal search pattern.

There are a total of 28 sensors.

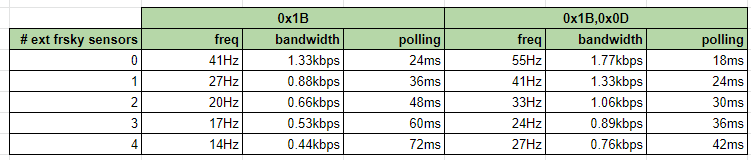

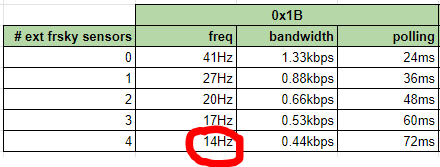

Ardupilot responds as sensor 0x1B.

If ardupilot is the only “sensor” on the bus (no other frsky physical sensors) than it will get a chance to send telemetry every 24ms i.e. every 2 polling (slots)

If there are other frsky sensors on the bus, let’s say 2 more sensors, than ardupilot will have a chance to send telemetry (free slot) every 48ms.

A polling sequence would be:

0x1B,S1,S2,AA,0x1B,S1,S2,BB,0x1B,S1,S2,CC

0x1B is polled every 4 slots i.e.48ms.

If ardupilot could use more sensors by responding to polling for 0x1B and let’s say 0x0D available slots would increase and bandwidth as well

please note that:

- as the number of external frsky sensors increase, the frequency of free slots and bandwidth decrease while the period between free slots increases.

- by responding to polls for sensors 0x1B and 0x0D bandwidth increases

The passthrough telemetry sends 9 packet types, for each packet except for GPS it uses 1 slot.

the packet types are:

- 0x800 GPS coordinates (1 packet for Lat and 1 packet for Lon)

- 0x5000 statustext messages (1 packet = 1 chunk, messages are split in chunks of 4 bytes)

- 0x5001 AP status

- 0x5002 gps status

- 0x5003 battery 1

- 0x5004 home status

- 0x5005 vel and yaw

- 0x5006 attitude and range

- 0x5007 parameters

- 0x5008 battery 2

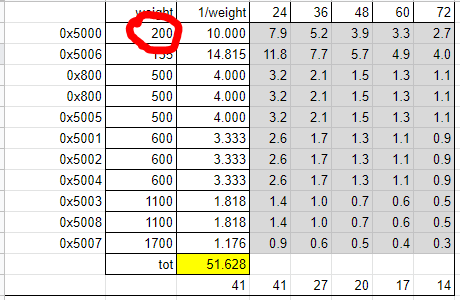

Packet scheduler in current stable releases

This is a simplified explanation of the logic behind the current scheduler:

50% of all available bandwidth is used for attitude packets

of the remaining bandwidth:

if there are messages send them otherwise share what’s left between all other telemetry packets.

if there’s nothing to send send attitude.

Note: messages are sent 3 times each

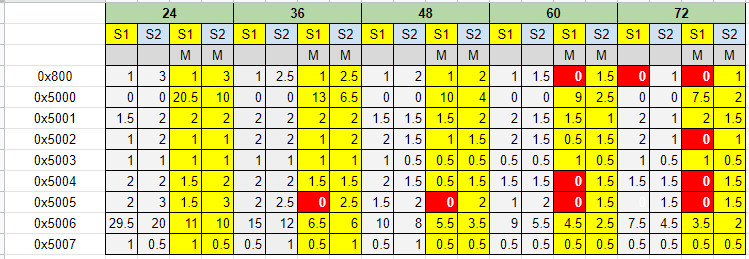

Packet troughputs with no external frsky sensors,1 battery, no pending messages to send:

- 0x800 1 packet/s

- 0x5000, 0 packets/s

- 0x5001, 1.5 packets/s

- 0x5002, 1 packet/s

- 0x5003, 1 packet/s

- 0x5004, 2 packets/s

- 0x5005, 2 packets/s

- 0x5006, 29.5 packets/s

- 0x5007, 1 packet/s

Packet troughputs with no external frsky sensors,1 battery, with pending messages to send:

- 0x800 1 packet/s

- 0x5000, 20 packets/s

- 0x5001, 0 packets/s

- 0x5002, 0 packet/s

- 0x5003, 0 packet/s

- 0x5004, 0 packets/s

- 0x5005, 0 packets/s

- 0x5006, 20.5 packets/s

- 0x5007, 1 packet/s

Notes:

- the scheduler saturates all available bandwidth

- if there are pending messages no other packets but parameters get a chance to be sent (stall)

- bandwidth used for attitude is very high and all packets in excess of 20Hz are wasted, so with no pending messages 25% of the bandwith is wasted.

- GPS requires 2 packets and there’s no guarantee they will be sent in a tight sequence, this leads to gps coordinates being sapled at different intervals

- no built in fairness, packets with high frequencies can stall packets with lower frequencies

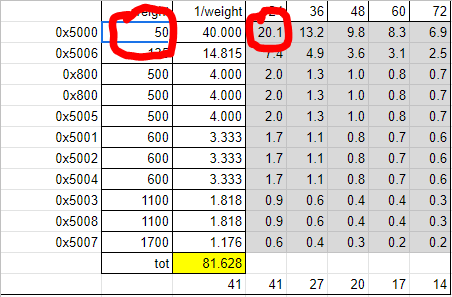

Packet scheduler in current master

This version of the scheduler has a patch to correct the biggest issue in the stable scheduler: telemetry stall while sending messages.

This is a simplified explanation of the logic behind the scheduler in master:

50% of all available bandwidth is used for messages only if there are pending messages.

of the remaining bandwidth:

50% is used for attitude packets

share what’s left between all other telemetry packets.

if there’s nothing to send send attitude.

Note: messages are sent 3 times each

Packet troughputs with no external frsky sensors,1 battery, no pending messages to send:

- 0x800 1 packet/s

- 0x5000, 0 packets/s

- 0x5001, 1.5 packets/s

- 0x5002, 1 packet/s

- 0x5003, 1 packet/s

- 0x5004, 2 packets/s

- 0x5005, 2 packets/s

- 0x5006, 29.5 packets/s

- 0x5007, 1 packet/s

Packet troughputs with no external frsky sensors,1 battery, with pending messages to send:

- 0x800 1 packet/s

- 0x5000, 20.5 packets/s

- 0x5001, 2 packets/s

- 0x5002, 1 packet/s

- 0x5003, 1 packet/s

- 0x5004, 1.5 packets/s

- 0x5005, 1.5 packets/s

- 0x5006, 11 packets/s

- 0x5007, 1 packet/s

Notes:

- the scheduler saturates all available bandwidth

- pending messages no longer stall other telemetry types

- bandwidth used for attitude is very high and all packets in excess of 20Hz are wasted, so with no pending messages 25% of the bandwith is still wasted.

- with pending messages bandwidth for attitude is around 10Hz

- GPS requires 2 packets and there’s no guarantee they will be sent in a tight sequence, this leads to gps coordinates being sapled at different intervals

- no built in fairness, packets with high frequencies can stall packets with lower frequencies

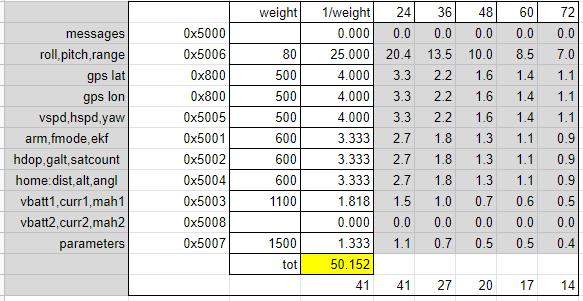

The teensy round robin scheduler

Thanks to the MavlinkToPassthroug project I had access to another well tested packet scheduler for passthrough telemetry.

To be able to compare Eric’s scheduler with the revised ardupilot one I implemented it in the current master branch.

This scheduler is round robin, each packet type is always given a chance to be sent in the same order. You can have slots that are not used but when used relative packet ordering is always guaranteed.

I slightly modified it to maximize message and attitude bandwidth by letting messages donate their slots to attitude when the message queue is empty.

Packet troughputs with no external frsky sensors,1 battery, no pending messages to send:

0x800 3 packets/s

0x5000 0 packets/s

0x5001 2 packets/s

0x5002 2 packets/s

0x5003 1 packet/s

0x5004 2 packet/s

0x5005 3 packet/s

0x5006 15 packet/s

0x5007 0.5 packet/s

Packet troughputs with no external frsky sensors,1 battery, with pending messages to send:

0x800 3 packets/s

0x5000 8 packets/s

0x5001 2 packets/s

0x5002 2 packets/s

0x5003 1 packet/s

0x5004 2 packet/s

0x5005 3 packet/s

0x5006 8 packet/s

0x5007 0.5 packet/s

Notes:

- does not saturate bandwidth (uses roughly 66%)

- very fair scheduler

- messages and attitude do not interfere with other packet rates

- inherent limit on max bandwidth for the round robin nature imposes to cycle all packet types before returning to the same one (I could not force it to have more than 15Hz for attitude for instance)

- much better GPS coordinates sampling

- simple and predictable

A note about messages

The current scheduler sends each message 3 times, in the idea of the original author sending each message 3 times would ensure transmission in case of bad link or low rssi.

I guess that this requirement imposed to dedicate a huge bandwidth to messages and model the original scheduler the way it is as a consequence.

But if we have a bad link and are experiencing packet loss why saturate what’s left of it with messages?

Wouldn’t this only make it worst?

With this in mind I propose to relax this constraint and send messages twice as an acceptable compromise.

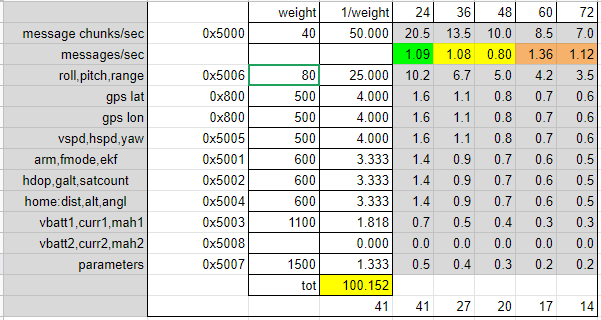

A new scheduler proposal

What to expect from a good scheduler?

- 20 packets/s for attitude but not any higher

- good data rate for messages without stalling other packet types

- 3 packets/s for GPS and vspeed for smooth vario performance

- 2 packets/s for AP,Home and GPS status

- message chunks sent 2 times

- user selectable rates or adaptive rates

My proposed scheduler tracks how long each packet has been waiting to be sent after the scheduled time and always sends the packet that waited the most.

If two packets are equally late send the one with the lower frequency (smaller chances to be sent).

Message slots can be donated to attitude

Battery 2 slots can be donated to battery 1

I implemented in ardupilot and this is what I got

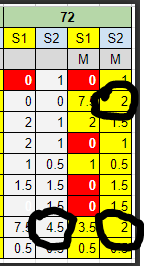

Packet troughputs with no external frsky sensors,1 battery, no pending messages to send:

0x800 (GPS) 3 packets/s

0x5000, 0 packets/s

0x5001, 2 packets/s

0x5002, 2 packet/s

0x5003, 1 packet/s

0x5004, 2 packets/s

0x5005, 3 packets/s

0x5006, 20 packets/s

0x5007, 0.5 packet/s

Packet troughputs with no external frsky sensors,1 battery, with pending messages to send:

0x800 (GPS) 3 packets/s

0x5000, 10 packets/s

0x5001, 2 packets/s

0x5002, 2 packet/s

0x5003, 1 packet/s

0x5004, 2 packets/s

0x5005, 3 packets/s

0x5006, 10 packets/s

0x5007, 0.5 packet/s

I bench and flight tested it

In order to compare the results I added a parameter to ardupilot to switch from one scheduler to the others at run time.

The numbers above have been obtained by using a lua script that measured the actual packets received by a modified version of my telemetry script.

feedback is welcome.