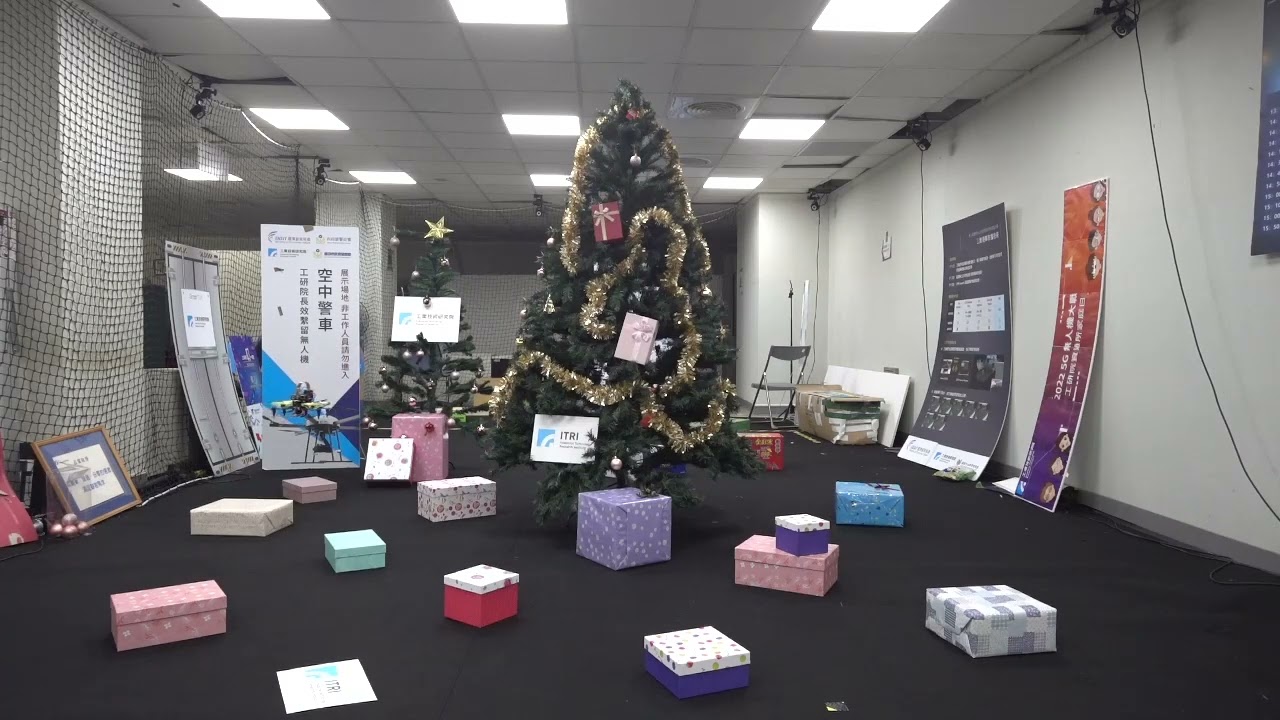

A low cost VIO system can be carried by a 149mm quad

loiter flight test video and log

guided mode waypoint

@LuckyBird wrote a very good post integrating ardupilot and Intel realsense T265 camera. However with T265 discontinued, we need other VIO solution. The goal of this project is to build a small and low cost VIO system with common hardware.

This project is based on VINS-Mono, it is a real-time SLAM framework for Monocular Visual-Inertial Systems created by HKUST Aerial Robotics Group. Thanks a lot for their great work.

Hardware

Raspberry Pi 4

Arducam OV9281 global shutter camera

Software

Raspberry Pi OS (Bullseye)

ROS Noetic

a slightly modified VINS-Mono

a slightly modified Ardupilot

a slightly modified libcamera-apps

mavlink-udp-proxy

Enable OV9281

add

camera_auto_detect=0

dtoverlay=ov9281

to /boot/config.txt

Connect Pi serial port to FC

add

enable_uart=1

dtoverlay=disable-bt

to /boot/config.txt and sudo systemctl disable hciuart

remove console=serial0,115200 from /boot/cmdline.txt

Ardupilot parameters

SERIALx_BUAD = 921600

VISO_TYPE = 1

VISO_DELAY_MS = 50

EK3_SRC1_POSXY = 6

EK3_SRC1_YAW = 6

EK3_SRC1_VELXY = 6

EK3_SRC1_VELZ = 6

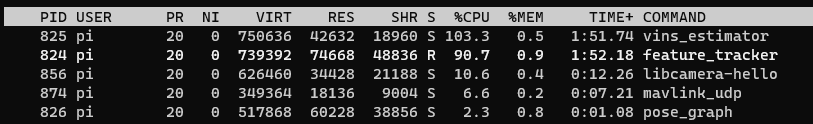

Start VINS-Mono

roslaunch vins_estimator chobits.launch

Start video

libcamera-apps/build/libcamera-hello --viewfinder-mode 640:400:8 --vflip --hflip --framerate 20 -t 0

There is no auto exposure control for ov9281, so you may need to adjust gain

Start mavlink-udp-proxy

just run mavlink_udp