@amilcarlucas just a quick update on the Xavier tests, I have performance issues with OpenCV releases building VINS-FUSION on the Jetpack 5.1. Best would be rolling back to previous Jetpack but I prefer resolving the conflicting package and that may take some time.

Hi, that’s interesting,

I’m planning to use RaspberryPi Global Shutter Camera (IMX296) and found two ways this can be achieved in the documentation

Hello @chobitsfan

Working with the OrangePI Zero 2w and an external triggererd USB OV9281 and I can get avg 6 HZ odometry signal. What is the speed of your configuration ?

Other question: Do you consume MOCAP signal ?

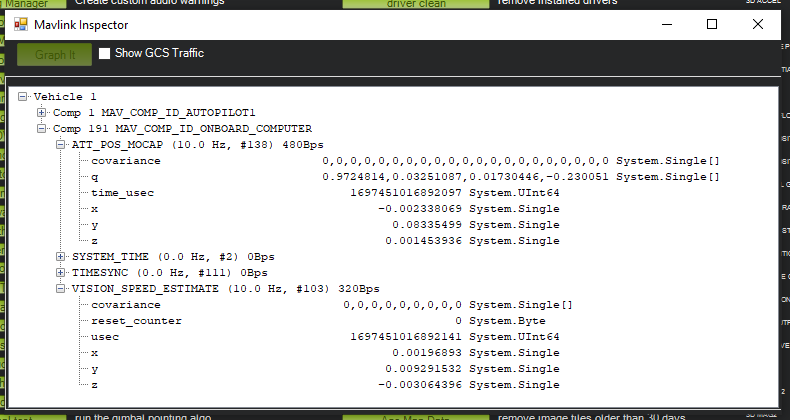

This is what I receive on Mavlink Inspector

Hello @ppoirier

Working with the OrangePI Zero 2w and an external triggererd USB OV9281 and I can get avg 6 HZ odometry signal. What is the speed of your configuration ?

10 hz on my pi 4, it is configured in VINS-Mono/config/chobits_config.yaml at 4cdd706fb65b4aafb0d8b70e6d3cf39b5ae7c75a · chobitsfan/VINS-Mono · GitHub

Do you consume MOCAP signal ?

I am sorry but I do not understand what you mean?

Hi @ppoirier & @chobitsfan

Thank you so much for the wonderful work. I really want to try something for the non-GPS flying using the PI camera facing downward. I think in this case I don’t need to change anything from the Ardupilot side. To fly indoor (without GPS), I need to calculate the ground drift velocity (x & y) and distance can be calculated via TF-mini.

Can you please point me in the direction of how can I do that? I want to know which library I should try to make it work like the other available optical flow sensors. As per I know there are many ways to calculate the drift velocity like using OpenCV, Optical flow, ROS, VIO and other. Which way would be good? I am really a new in this domain but trying my best to explore the things so sorry if I have written something wrong or my understanding is not correct.

And I think Ardupilot fuse the IMU and other data with the drifted velocity estimation to get the good estimate so I don’t have to write the code for that, just need to write the code for estimating the ground drift based on the pi camera data and then feeding it to the ardupilot.

Really looking forward to your valuable response. Thanks!

@chobitsfan looking at the MAvlink Inspector, the signal is ATT_POS_MOCAP

Using VISO we generally expect VISION_POSITION_ESTIMATE but I know you did a lot of work with MOCAP, so I guess the signal is compatible and ready to be used as is without having to transform?

@scientist_bala can you open a new thread please ?

Your questions are interesting but not related to VINS_Mono

Hi @ppoirier

Yes, ardupilot can process either ATT_POS_MOCAP or VISION_POSITION_ESTIMATE.

@chobitsfan I would like some advice on connecting Semi-Direct Visual Odometry (SVO) with ArduPilot. For hardware, I am using a Jetson Orin Nano and an HQ 477 monocular camera. How can I achieve this?"

Hi @ppoirier and @chobitsfan . I’m planning on doing something similar with OpenVINS and the Luxonis OAK-D Wide camera. Unfortunately i’m having some latency issues, and would like your advice on the matter :

- When using VISO what lattency would be too much lattency ?

- What is the Imu frequency of the VIO system you’re using here ?

- What is the frequency of the pose you’re obtaining on this setup ?

Thank you very much.

What is the Imu frequency of the VIO system you’re using here ?

100Hz

What is the frequency of the pose you’re obtaining on this setup ?

20Hz

Thanks @chobitsfan for your contributions.

I am doing a similar setup with OpenVINS.

Q1: I would like to know how you managed to install/build ros noetic on Bullseye. And, would ros noetic be installable on Raspberry Pi OS Bookworm?

Q2: How do you publish the camera images as a rostopic? Are you using a custom ros package to map between libcamera to ros?

Thanks

I would like to know how you managed to install/build ros noetic on Bullseye.

Debian Upstream has it own porting of ROS

How do you publish the camera images as a rostopic?

Hey @chobitsfan @ppoirier ,

Firstly, Thanks for your contributions . Congrats on a great achievement.

I have a question…

I have replicated this setup and have completed the ground test for VINS-Mono. (Used buster not bullseye).

For your setup, do you use a custom forked repo of ego-planner or the default one ?

You haven’t mentioned any details about ego-planner in your thread.

I’m concerned due to potential build & compute incompatibilities of ego-planner on raspberry pi OS

do you use a custom forked repo of ego-planner

Yes. I slightly modified it

Hey @chobitsfan @ppoirier ,

Thanks for the reply. I have exactly replicated your setup. The only difference is that I did it on Buster instead of Bullseye as I was facing some issues with ROS. Thanks again for sharing this setup.

I ran into some issues and I was hoping you could help me with them.

-

Position hold with VINS mono is good except when I yaw. It loses position as soon as I yaw. (even if the yaw is slow). I’ve made sure too keep the environment well-lit and feature rich. But position hold is lost everytime.

-

When using ego-planner, I am able to visualize the pointcloud on rviz (Master-slave ROS communication with base station(laptop)). Once I switch to guided mode (for ego-planner), the drone moves to a particular point (x =5, z=-1). I could observe this on Mavlink-Inspector via the TARGET-SETPOINT message type. This happens regardless of me choosing a point on rviz. (It tries to yaw towards the choosen point, but then ends up going to the same point).

I am actively testing the system and will implement any suggestion/changes quickly. Could you please provide some suggestions on how to solve this?

Thank you

Once I switch to guided mode (for ego-planner), the drone moves to a particular point (x =5, z=-1)

I think you can config it in ego-planner launch file, set flight_type to 1

Thank you for the response @chobitsfan, The flight type was 1 only, but still it was going to the same target. We tested with both flight types, in flight_type = 2 the drone still did not go to the way points defined in the launch file.

I have another doubt, where are you passing the target setpoint command back to ardupilot?

Any suggestion for ROS 2, humble setup?

Is OpenCV part of the setup? I face an issue getting ov5647 working with OpneCV 4.x. I am using Ubuntu Jammy 64-bit for tier 1 ROS 2 support.

Hello!

How did you managed to convert the ego-planner results to ArduPilot? In your repository I only see a socket but it’s not clear to me where the result’s are forwarded to