thank you @LuckyBird

@LuckyBird, @ppoirier Can you please describe about the outdoor test in detail i.e. height and distance of the bird from home. Moreover, i would like to know whether this methodology can be employed for navigation (without gps) for long distances like 3-5 km and heights of about 500-600 m. What could be the worst case scenario i.e. navigation errors over long distances flights in case of sensors (gyros) drifts? Your guidance in this regard will be highly appreciated.

Thankyou

Regards

Yasir Khizar

Hi @Yasir_khizar,

- Regarding the outdoor test conducted by @ppoirier, I think he can fill you in on the specific details.

- For such large scale scenario, the T265, or other vision-based SLAM techniques, might not be a good choice as your primary localization input. There are many outdoor conditions that would very likely render the system unusable (scenes with low-texture and high dynamic range, motion blur, repeated patterns, to name a few).

- And when it works, for such large scale scenarios, issues with drift, output latency or incorrect scale should be expected (see 1, 2, 3 and 4 for example issues).

- Worst case scenario, I believe, comes from abrupt changes in the localization, such as when tracking data gets lost then come back, or jumps around (in loop closure scenario), which is fed into the vehicle controller or any high-level applications, which then produces unwanted behaviors on the vehicle. Making a system that is robust to these kinds of wrong input data and unexpected failures is quite a challenge.

The T265 is designed for indoor use with a rich textured environment. Using it outdoors requires that you don’t expose to direct sunlight and have high contrast and well defined features.

I think you should reduce your expectations by a factor of 20 to make it realistic to the design profile : 150-250 M perimeter @ 25-30 M alt

More important is the lack of integration with the FC at the EKF level. We are missing parameters to correctly implement a robust vision estimation fusion within the EKF for the moment. This is causing the EKF to diverge easily and cannot recover elegantly, forcing the FC to switch to a lower level of control that keeps the attitude but carry the bad horizontal and vertical estimates thus causing a sudden vehicle runaway from which you have to manually recover…sometimes with cold sweat…

@rmackay9 is aware of the limitations and working on a plan to better integrate external estimation system with the EKF.

@ppoirier, @LuckyBird, thanks for the response/reply. Can you guide for some inss system which is alone enough for navigation/guidance in outdoors i.e. withour gps. Moreover, are there some implementations been carried out on arducopter for ins based navigation without gps. I have heard of some imu sensors like xsens which are good in quality and can be employed for navigation. Can u please advise on this matter.

Thanks in advance…

Regards

Yasir khizar

I suggest you open a new thread as we prefer to keep focus on T265 here.

@ppoirier More important is the lack of integration with the FC at the EKF level. We are missing parameters to correctly implement a robust vision estimation fusion within the EKF for the moment. This is causing the EKF to diverge easily and cannot recover elegantly, forcing the FC to switch to a lower level of control that keeps the attitude but carry the bad horizontal and vertical estimates thus causing a sudden vehicle runaway from which you have to manually recover…sometimes with cold sweat…

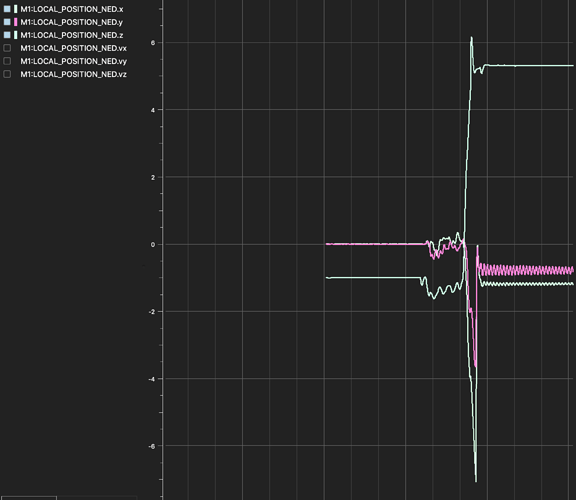

The T265 has been working relatively well for me, but there are few cases where the flight controller position rapidly diverges from the position being sent from the T265. Is this what you are talking about? Here is one example:

In this particular case, it looks like x and y are corrected shortly after the glitch, but this isn’t actually the case. The T265 was still sending positions something like [0, 0, -1] at the end of that plot. Sometimes all dimensions get shifted by a huge constant value and stay there in spite of continuous messages from the T265 which specify otherwise.

Yes that’s the issue sometimes it’s the T265 that is doing its realignment following a loop closing in its internal SLAM ; this can be disabled on T265 script.

Sometimes it just get lost and report erroneously its position (kidnapped) a few meters away but it crash the EKF because of the excessive variance

Sometimes it just send bad data while continuing display 100% confidence

In the case above, the T265 was actually sending good data the entire time, it was the flight controller that lost its position. I have pose jumping and relocalization turned off on the T265.

In either case, how are you guys planning on addressing it?

@dwiel just to be sure, can you also plot the T265 position data (VISO)? From my experience, the T265 data usually correlates with the observations local_position_ned data, given that there are no other positioning data.

At the moment there are developments on multiple fronts:

- It is now possible to use vision data with EKF3 and reported results seem ok so far. The EKF3 can also handle the position reset when it happens (the glitch).

- The position + attitude data (

VISION_POSITION_ESTIMATEmessage) is just one possible type of data. With EKF3 you can also useVISION_POSITION_DELTA(changes in position and orientation, now supported by the T265 script and this cpp implementation) and maybeVISION_SPEED_ESTIMATEorODOMETRYmight be available soon.

If you can have a try, maybe one of these will give you better results.

Thanks! I don’t have the logs from that particular run anymore, but I will be sure to get logs the next time it happens.

I have been using a modified arducopter version 3.7.0 dev. I will rebase my changes onto version 4.0.3, though it looks like perhaps vision data with EKF3 is only in master/dev at the moment? handling position reset cleanly will definitely be an improvement.

Such an awesome project from you guys. I just started a new project and currently ordering parts. Got a few questions. What is the smallest board usable without image throughput?

Would a pi zero work? Otherwise a USB stick board like Intel Compute Stick CS125, that even has USB 3.

Really good to see the support and detailed awnsers given here. I am ok with Python and Linux but completely new to drones and ardupilot. If the pi zero doesn’t work I’ll try the cs125 route.

I have a very small form factor and would love to use the pi zero caus of the power consumptiom.

There are some problems regarding the Pi Zero that you may encounter as documented here: T265 adding an auxiliary Serial & Power port for embedded systems. · Issue #4408 · IntelRealSense/librealsense · GitHub, T265 - Illegal Instruction - Raspberry Pi Zero · Issue #3490 · IntelRealSense/librealsense · GitHub

I believe the Compute Stick is supported, so long as you don’t plan to use 2 cameras at the same time.

The Banana Pi BPI-M2 Zero seems to work ok from @ppoirier’s experience , so that’s another option for you.

Very cool, building my parts list and going with the Banana first.

My use case is indoor, basically I want to fly a drone a route manual and ‘record’ the path, an afterwards let the drone follow the exact path in a (slow) given speed. I would think that with the T295 a lot of waypoints could be created. Is there groundcontrol software that would make it easy to manage this? I don’t need a full explanation but just a pointer so I don’t have to try out all of them. Any other bottlenecks you already see?

Mission Planner can cover most of your basic requirements https://ardupilot.org/copter/docs/common-planning-a-mission-with-waypoints-and-events.html

Are there plans to enhance the great work from last year using the T265 and possibly the RPi4? One thing I am not clear about - is ROS required? I just got a T265 and have built the libraries on RPi4 but, ROS is proving to be a little more challenging…

The support for RPi4 is already detailed in this post. You can even use the provided APSync image to setup fairly quickly.

No, not at all. For your reference, here are the related wikis:

- Without ROS: Intel RealSense T265 — Copter documentation

- With ROS: ROS and VIO tracking camera for non-GPS Navigation — Dev documentation

Hope this helps.

Fantastic! Thank you for the great work!

Please Help!!!

Whenever I try to take off my drone it is going straightway up very fast and finally out of control. Can not even land. I lost one drone like that outdoor. Finally, I am now testing with ropes diagonally connected to my drone but still offboard takeoff is so fast. Due to fast movement, I get “SLAM Error Speed” at the ROS node and no luck for weeks. Please guide me.

Hardware & Software details: Raspberry Pi 4 (8Gb), Intel Realsense t265 (USB 3.0), Pixhawk 2.4.8 (Firmware FMUV3), Ubuntu Mate 20.04.2 LTS. Thanks in advance.

First of all, start a new thread. You need to test the copter manually before trying to control it via ROS.