Introduction

Hello everyone. Last year, we successfully test flew our Nemo cargo drone, designed to ferry a 3kg vaccine box and other medical supplies over a delivery radius of 100-150km. We shared some of our experience and insights when we finally reached autonomous flight here. Following that milestone, we pushed on to validate the reliability of our system. Prior to the pandemic, we managed an unbroken count of 29 sorties, on a single aircraft, without invasive maintenance intervention. We defined 1 x sortie as a set of takeoff-flight-landing. Flight time varied from mins to an hour. Time in between sorties also varied from 15mins to 1 hour with refuel and some no-refuels, payload-on and payload-off in between. We also managed to rehearse doing “remote” landing operations. This involved recce, pre-landing flight and 1st landing flight in a spot far away in our test field, using only GPS coordinates from our smartphone to waypoint the landing.

With a system designed for an operational range of 150km, the next step was to ensure that we could maintain reliable communication with the drone over that distance. Not surprisingly, conventional point-to-point radios such as the RfD900 wouldn’t cut it. Instead, for our 1st iteration, we employed a cellular data solution: forwarding MAVlink telemetry via a free tier EC2 instance to and fro aircraft and a ground control station running MAVProxy.

Not so graceful but it works. For flight testing, this was good enough. It gave us low-latency, reliable data to monitor our drones in real-time, and our developers had no issues navigating through the terminal-heavy environment of MAVProxy.

But in a fast-paced, medical delivery operations where efficiency is key, such a solution is too clunky. While there were other GUI based alternatives such as Mission Planner and QGroundControl, neither could they fulfill Yonah’s operational requirements. These GCS were designed, largely, for the mission profile of a single aircraft and mostly surveillance-ish missions (i.e. take-off and landing from the same spot). Yes, there is stuff from FlytBase but we didn’t have time to look too deep - pricing was hard to find and we already have critical mass of skill to work with ROS and customize it to our needs. As such, we looked towards designing a custom Ground Control Station that fulfilled the following:

- User-friendly: At the end of the day, the ground controllers only needed a subset of information and commands to do their job; acccess to all MAVlink data is not essential. A efficient, user-friendly interface was more important.

- Multi-aircraft handling: Provide the ability to monitor and control several aircraft from a single GCS

- Flexible, long-range comms: Provide reliable, long-range communication that could adapt to the hostile conditions of rural areas; for example, regions without cellular coverage.

After 3 months of programming amidst pandemic-induced measures, we are happy to release our beta version of Yonah’s OGC. We decided to share our insights, both to seek guidance from the open-source community and in the hope that our work might benefit someone out there working on similar projects - if not the code then hopefully the design insights for such a mission profile.

The full documentation of our code can be found on our Github Wiki. In today’s post, we provide a brief overview of the overall architecture as well as our insights, and will make frequent references to the documentation.

Target users

We set ourselves a two-stage goal for the development of our Ops Ground Control:

Stage 1: The OGC should be usable by any of Yonah’s team members, regardless of whether they were mechanical, electrical or software trained.

That meant that anyone with a basic engineering education, but may not be familiar with software and terminal-based commands, should find our system simple to use.

This was based on the premise that Yonah’s field operations in the near future would be handled by our own engineers; they had to be comfortable with using our system with minimal help from the software team.

Stage 2: The OGC should be usable by non-engineering-trained personnel, such as a healthcare worker working in a rural hospital.

In the future, Yonah’s delivery services established in rural areas would be operated by the local community rather than our own engineers; we had to train the local populace how to operate our system. Not surprisingly, that called for a very user-friendly ground control system.

In this beta release, our efforts were geared towards the fulfillment of Stage 1.

Pre-requisites

We refer to the aircraft as the air side and the ground control station as the ground side

In our system, each aircraft operates a Cube Black Autopilot connected to a Beaglebone Black Industrial Companion Computer, which runs the bulk of the OGC software on the air side. The actual telemetry is handled by a RUT955 Cellular Router and Rockblock 9603 modem. Because of its complexity and cost, we deliberated a long time before deciding on including satellite communications into our system. Besides RockBlock 9603, there wasn’t other affordable choice for Short Burst Data service over Iridium’s network. Having observed other players’ (i.e. Zipline and Swoop) operational experience, we concluded that we cannot run away from the satellite mode of communication.

Of course, having redundant modes of communication without redundant power going feeding into the communication hardware. That will be touched on in another post.

The ground side runs a corresponding RUT955 and Rockblock 9603, connected to any Linux-based laptop that runs the bulk of the OGC ground side software.

See the required hardware and software sections for a full breakdown of the packages that we use.

Architecture

Our system is designed around the ROS framework in a Linux environment, and interfaces with MAVlink based autopilots through the MAVROS package.

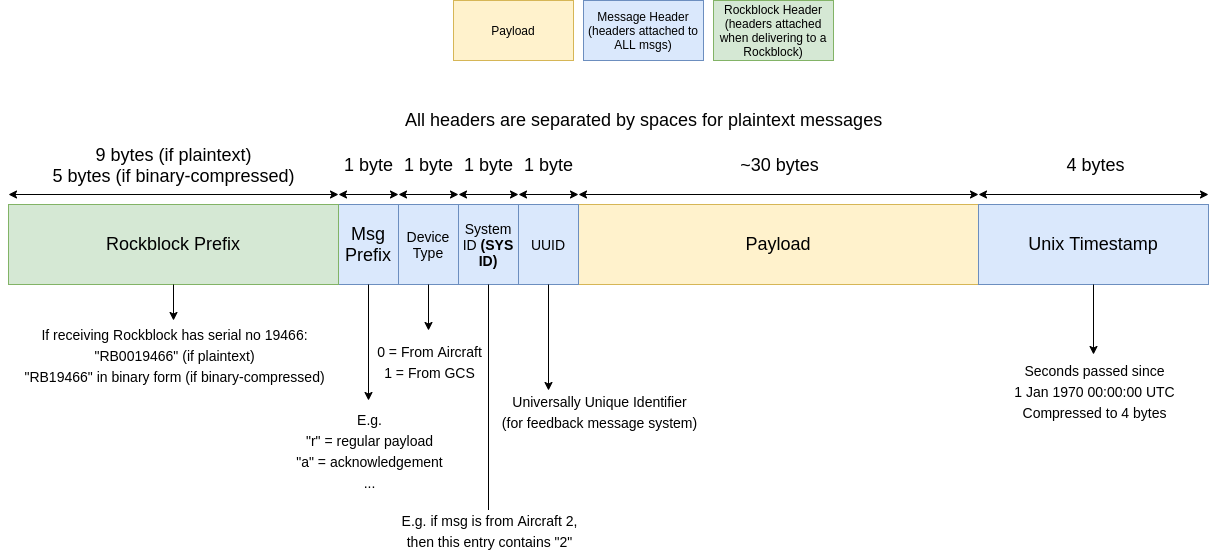

- Highlighted in green is the triple-redundant, dissimilar link system consisting of Cellular Data, SMS, and Satellite communication.

- The Telegram package handles communication over cellular data, using the Telegram API. This is the primary, low-latency link of the system when there is internet access

- The SMS package handles communication over SMS. This serves as a medium-latency backup when internet access is down.

- The SBD package handles communication via Iridium Short-Burst-Data (SBD) Satellite Telemetry. This is a high-latency backup when both internet and cellular connection are down.

- Highlighted in red are the despatcher packages. They serve as the “central unit” of the OGC system, where telemetry is consolidated to and from the three links.

- Fun fact: The despatcher package was originally meant to be called “dispatcher”, but one of us mispelled it, found that it was an recognized word anyway; hence the name stuck

- Highlighted in yellow are the endpoint packages: MAVROS for the air side, and RQT for the ground side

- MAVROS bridges the gap between the autopilot and the OGC packages by converting MAVlink data into “ROS format”, and vice-versa. This allows us to pick and choose the subset of MAVlink data and commands that the system should have access to. The despatchers communicate with the autopilot via MAVROS topics and services

- The RQT is the ground based Graphical User Interface which the ground operators use to operate the system

- Highlighted in blue are miscellaneous packages which perform a variety of “back-end” work, such as filtering messages, checking for authorized devices, etc.

Insights

Here are some takeaways that we had at the architectural level (more detailed insights will be talked about in subsequent follow-up posts)

MAVROS is a flexible and robust package that served our needs, but does not give us all the MAVlink info that we desire. For example, we would love a ROS topic giving the EKF status (similar to how Mission Planner displays the EKF status in a pop-up menu). That said, we currently have no bandwidth to consider messing around with MAVROS.

Developing the system in the middle of a pandemic, with limited access to the workshop, was a challenge. There were many times when we had to test the communication system remotely, with different hardware components in different teammates’ houses spread out across Singapore. At least, this was testament to the long-range nature of our system.

One thing that greatly accelerated our testing was the integration of ROS with SITL, which was a lot easier to test remotely than having to set up actual hardware.

Making something that works is the simple part. Making it simple to use is the difficult part.

Conclusion

This wraps up the introduction to Yonah’s Ops Ground Control (beta). We will describe our triple-redundant, dissimilar link system in greater detail in a follow-up post.

Our list of contributors can be found here

Signing out,

@disgruntled-patzer

@rumesh986

@huachen24

@ZeGrandmaster

Yonah