@chobitsfan, definitely sounds like something we need. I suspect we may need to put the delay parameter at a higher level but I’m just guessing. I’m at the HEX conference but I think we should try and get this into master next week somehow.

@chobitsfan and @rmackay9 why do you think that is necessary to have a lag parameter for vision system? I understood that this is automatically calculated by this method:

The linked method calculate the transport lag to correct the timestamp, maybe this new lag parameter has another purpose?

@anbello This only compensates for link lag. The sensor lag can be many times this (the time it takes to capture the image, process it, analyse it, calculate the required data, and then send it to the link).

Thank you @fnoop now I understand better. But if I use the timestamp of captured image in the header of the vision position estimate message I should have all the lag calculated by the aforementioned method. Am I wrong?

No, it only deals with the transport/link lag which will typically be a physical link latency of a few ms. A sensor/vision sensor lag could be anything from 10ms to 50ms to 100ms to much higher. If you’re using a fast onboard computer with a fast camera frame rate you may get a sensor lag as low as 10-20ms. If you were using eg. a raspberry pi+raspicam it may well be as high as 200ms, and it could vary from frame to frame from anything between 50ms and 200ms depending on a variety of factors at any particular millisecond of time.

Also the link lag converges on a sample of latencies over a period of time, I would have thought if you have a vision based estimation system that you would want to take into account individual frame latencies rather than trying to smooth out jitter. The higher the lag the higher the jitter is likely to be.

Your system looks really cool, I’m looking forward to more details as it’s something I’ve had on my todo list to try for a long time

Thanks, on post #32 above there is a link to some details of my system, I am waiting to have enough time to put together all the necessary info and source (only little diff vs aruco_mapping package) to put it on github and here as a post. I have very little time to do this kind of work but I will do for sure.

And in any case I have yet some problems to solve on my system as you can see from the videos.

Ciao

You are using fake GPS msg, right?

because I am doing the same thing, but the position hold doesnot work fine with me.

Can you please share with the steps that you have made?

I created my ROS node to get the position and publish it as the HIL_GPS msg.

what parameters should I change, should something with mavros??

Your help really matters, thank you.

Hello Andrea,

I finally have started to experiment with aruco/ vision_position_estimate

I am using an Intel based Companion Computer with a calibrated USB Camera

Launch:

roslaunch usb_cam.launch

roslaunch aruco_gridboard detection.launch

roslaunch mavros apm.launch fcu_url:=/dev/ttyUSB0:115200

rosrun mavros mavsys rate --all 100

On my glight controler BBBMINI

sudo ./arducopter -C udp:192.168.7.1:14550 -D /dev/ttyO4

So the pipeline is camera-CC-Serial to BBBMINI - udp to - Mission Planner

I connect to mission planner, set these:

AHRS_EKF_TYPE 2

EKF2_ENABLE 1

EKF3_ENABLE 0

GPS_TYPE 0

EK2_GPS_TYPE 3

COMPASS_USE 0

VISO_TYPE 0 == or 1?!? , I tried both

I set the Home/EKF home and get the vehicle displayed

I can get good movement correlation on display when moving my quad-camera

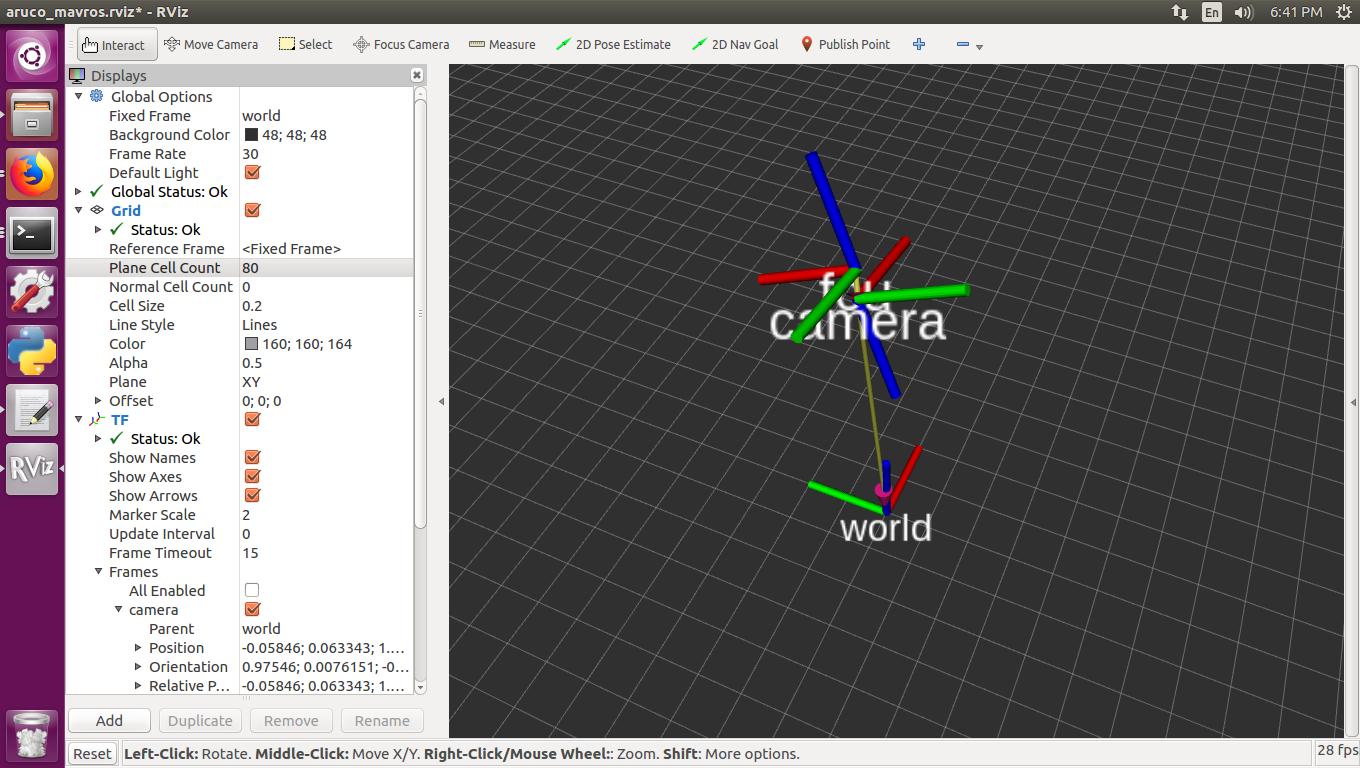

But I cannot read the mavros local pose in RVIZ

I have WORLD as reference so I guess I am missing a couple of TRANSFORM here…

OK found it here

/launch/ detection_rpi.launch

Have you made the complete TF for your frame ?

No, to be honest I did not understand well all the TF stuff in ROS, I have to study more.

Anyway I added offset parameter for the camera but I did not push it yet, I will do asap.

[EDIT]

VISO_TYPE 0

ok good, I might make one for my frame and will update here.

BTW Andrea I added the missing debug logic for the display

parameter in detection.launch

modified node.cpp line 408

if(debug_display_) {

cv::imshow(OPENCV_WINDOW, imageCopy);

cv::waitKey(2);

}

Making it easier to switch display on/off

Well, that was a nice sunday experiment

Here is the Launch config

…lol it does not read xml !!!

Local origin to world

node pkg=“tf” type=“static_transform_publisher” name=“tf_local_origin”

args="0 0 0 0 0 0 world local_origin 100

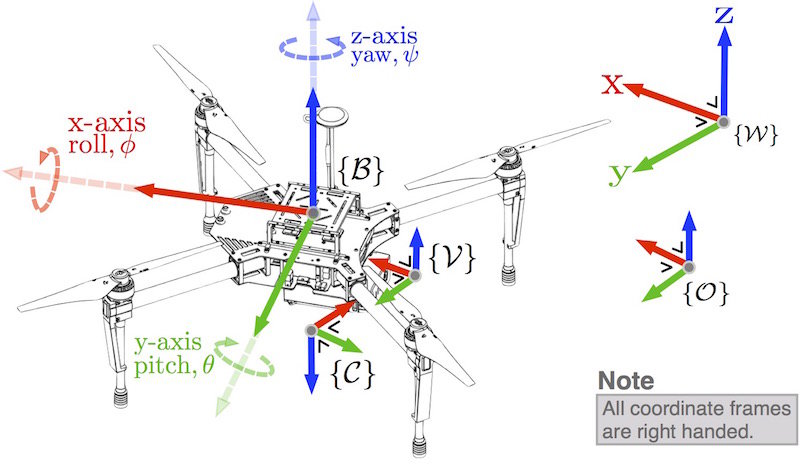

Rotate the IMU in reference to camera

arg name=“pi/2” value="1.57079632

arg name=“imu_rotate” value=“0.05 0 -0.08 $(arg pi/2) 3.1415926

node pkg=“tf” type=“static_transform_publisher” name=“camera_fcu”

args=”$(arg imu_rotate) camera fcu 100

according to this:

please note that I have a forward camera setup (not down-looking) this is why there is a rotation about the red axis == still need some tweaking but I have the general setup working

This is not true, I already pushed the offset parameter commit, but only for the offset along the back-front axes (and I have forgotten to have done it ![]() )

)