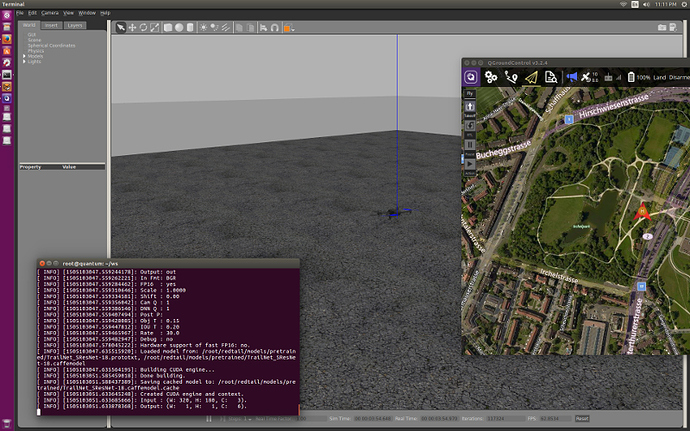

The recently released Redtail DNN framework running in a docker container in Gazebo. Gotta get a joystick rigged up so I can fly it around a bit but first sleep. Then I need to pull that nasty px4 stuff out of it and convert it to run on the new ArduRover.

I’ll be updating this as I make progress on it.Awesome Dan. Ardupilot / Ardurover + Nvidia Jetson and Redtail DNN that’s a win for sure!

Sounds really interesting.

Dan, have you found any pretrained models for rover, or you plan to build it from scratch using a similar camera rig as they used for trailnet?

Going to try and use their pre-trained model at first. Since its low altitude just might work. However I also started gathering video today with a rig I put on my mobility scooter so I can make my own expand it to urban areas as well. Not everyone has a forest path to use. The sim environment isnt difficult to set up but you really need to watch the versions of the libs it asks you to load for cuda support. The version of ubuntu in the container is 14.04 that is the version you need to grab libs for. Not the 16.04 they say to use for the base machine os. As long as you get that right the install is straightforward. I have digits and the other software on that machine to create models as well. I’m using 3 webcams and a tx1 with some open source software for multicamera video recording to get the video for my models. Setting up a jetson for inference can be difficult. Check out Dusty-nv github site for a nice set of instructions and scripts for building the various softwares you will need. Torch Caffe Tensorflow full system. I heard that one of the devs is working on this as well for the flying bots. Getting it moved over to ardupilot instead of px4 is another task to complete. I have a little experience now with that part of it so I might be able to get that done myself. The ROS part is small and easy to implement. There are some scripts in the git for pulling the frames out of videos for Digits to use. Calibrating the video set up and taking the distortions out of the wide angle video. Everything you need to do this is there. Just add hours and hours of futzing around with it to get it all set-up and you will have the latest and greatest on your bot. I like the futzing around part

Hi Dan,

Glad to hear the setup process went well! Re: 16.04: we recommend using 16.04 as the host OS when setting up GCS just because it’s the latest LTS version of Ubuntu so people should hopefully have better experience overall. But since we try to use Docker as much as we can, some of the Docker container will use 14.04 (e.g. ROS).

One more thing: we have an experimental and very primitive support for rovers which we tested on Erle Robotic rover with APM. The “primitive” part basically means the vehicle is controlled via RC override messages rather than proper pose messages. Let me know if you are interested in it.

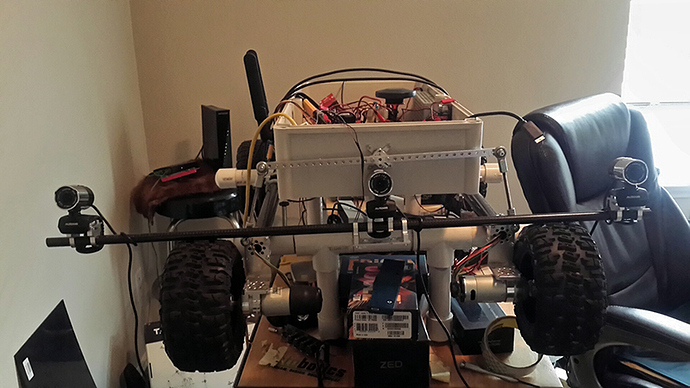

Yea very much so. I’m thinking of an even stranger method of getting the control messages to the PIX. This is for the new ArduRover. Getting the 3 camera rig set up now. I am doing it on a mobility scooter. Tried it with a single camera yesterday worked great. Using web cams and tx1’s to record video. I had most of that stuff in my parts bin. I’ve done quite a bit of video in the past getting them all lined up and all that so that part should be pretty straightforward. Looks like the calibration is very similar to ROS camera calibration which I’ve done before. Yea I’m using the docker container but also have it going on a native install now. Need more gpu for Digits though. On the video how do you get the colored display and the dnn meter at the bottom? I know the guy that does the ros interface for the rover code. Maybe we can get this all working properly with APM. I’ve been playing with it a bit myself. Great job I was looking for just such a package. Been trying to get it all figured out but having this as a roadmap will really speed things up.

DNN activation visualization is based on VisualBackProp paper from NVIDIA. Though we haven’t shared the script in our official release, I think I’ve seen PyTorch implementation of this technique. Alternatively, you can look at DNN activation visualization in DIGITS (check “Show visualizations and statistics” checkbox while testing on an image) - it should provide some insights on what features are learned by different feature maps.

The slider on the bottom was basically just a visualization of final control output.

Thanks. Almost finished with the 3 camera rig. Should start gathering data in the next couple of days. Shooting a burst of 5 frames every second in case there are any misalignment issues or timing or bad picture problems. TX1 handles 3 cams that way no problem using fswebcam. When I check that box I get errors something not right with cuda/tensorflow still. Otherwise Digits works fine although training is a very long process.

Just about ready to start gathering data now. Having the large target printed and mounted on foamboard that I need for calibration. Finished up the camera mount. Added 4th cam for video since I’m shooting frames with the webcams. Actaually a burst of 5 once each second. Less noise when they are combined. Not sure if the speed will blur them might have to adjust that value. TX1 on a carrier to grab the data from the cams using a crontab script and fswebcam utility.

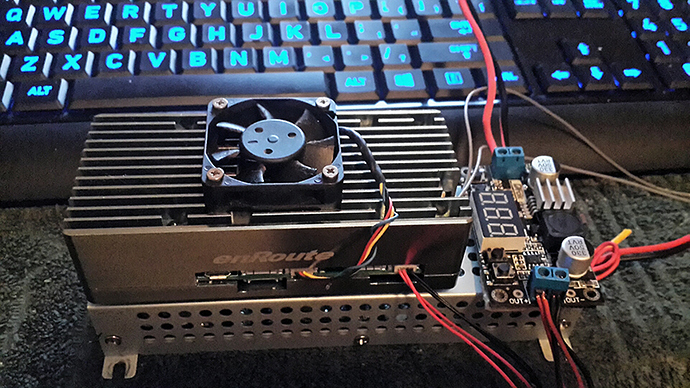

Using this computer setup to gather the data. TX1 buck converter and a 5v converter for the powered usb hub for the cameras.

ArduScooter! Look forward to seeing it working

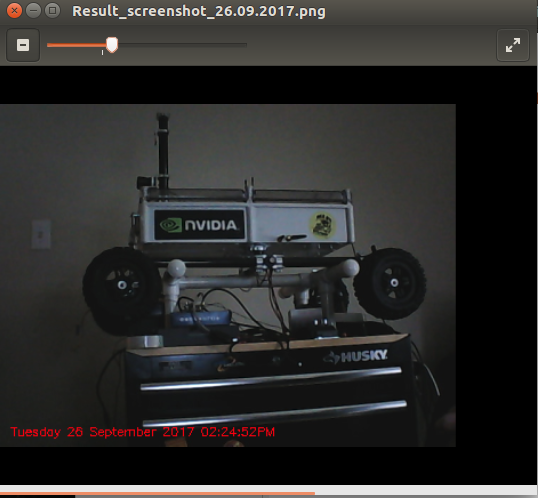

I got into robotics to make that scooter a self driver. But this is just for data gathering. The model is for these two.

Camera calibration is a real PITA. Found some better software than the commonly used opencv and ros camera calibration set-ups. Kalibr is what I’m using now. Multicam calibration focus calibration generate targets and verify your yaml files are correct after doing the calibration. Also does camera/imu calibration. Its on github .

https://github.com/ethz-asl/kalibr Decided to use the aprilgrid target instead of the checkerboard. No worries about the software mistaking top from bottom with aprilgrid.

Decided not to use the scooter. Rover will be here in a few days. I have some clamps that will work just fine to mount the camera array to the rover so it will be at the correct height. Kalibr worked great to calibrate the 3 cameras. Also managed to stitch two of them in real time with a little bit of python code and opencv.

Does a decent job makes some assumptions for speed.

Rover arrived today. Noticed the github has been updated with the experimental rover code. Thanks Alexey. Getting the electronics installed in the rover tonight. TX2 and J120. Sweep lidar. Zed camera.

I have a pair of clamps that will clamp the 3 camera rig on top of this rover for gathering data for the model.

Awesome work Dan, really looking forward to RedTail with ArduPilot!

Wheels are a turning under DNN control. One parameter really messed with me but finally figured it out after reading through the mavros code. I’ll try and make a vid tomorrow if the rain holds off. CheckID script is a lifesaver.

Awesome! Great work Dan.

Awesome! So Great Dan

Hi Dan,

Great work! I am also trying to put Redtail on a rover (3.5.1), but I am getting stuck with the TX2/mavros part.

When I start mavros, I get timesync warnings:

level: 1

name: "mavros: Time Sync"

message: "Frequency too high."

hardware_id: "/dev/ttyTHS2:921600"

values:

-

key: "Timesyncs since startup"

value: "525"

-

key: "Frequency (Hz)"

value: "19.845852"

-

key: "Last RTT (ms)"

value: "568.014294"

-

key: "Mean RTT (ms)"

value: "8951868336.123940"

-

key: "Last remote time (s)"

value: "1566553226.082881451"

-

key: "Estimated time offset (s)"

value: "1566576896.402069092"

Using joystick, the rover will respond to commands but very delayed (i.e. moving right for 1 second, then ~30 seconds later, the rover will start moving right).

Did you encounter this problem or know some way to fix it? I am not using APSync because it did not seem like it would solve this problem.