Introduction

Hello, I am Asif Khan from Jaipur, India. I am extremely delighted to share that I have been selected as a contributor in GSoC, 2024 in Ardupilot with @rmackay9 and @peterbarker as my mentors. I will be working on Visual Follow-me using AI project this year. In this blog i will be discussing my project in detail.

Scope Object tracking

While drones are increasingly utilized for dynamic tasks such as sports filming, event coverage, and surveillance, There is a lot of scope in field of object tracking using a Camera. The ability to accurately follow and capture moving subjects opens up new opportunities for advanced applications in search and rescue missions, wildlife monitoring, and autonomous delivery systems. Enhanced object tracking capabilities can also improve security and traffic management, providing real-time data and insights.

Problem Statement

Majorly this project is divided into 2 parts Those are:

1. Adding Visual Follow-me support for all ArduPilot camera gimbals

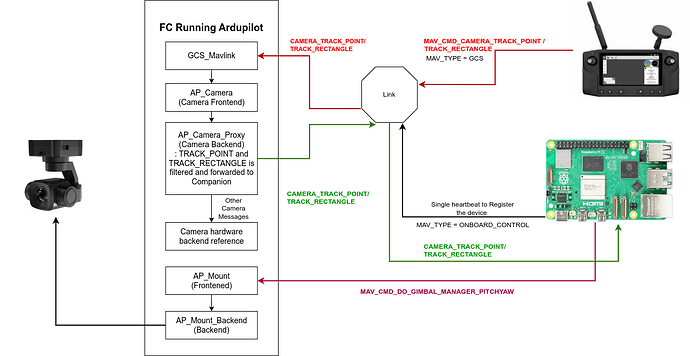

The gimbal will follow the target the process is as follows-

- The GCS will send a MAV_CMD_TRACK_POINT or MAV_CMD_TRACK_RECTANGLE messages to the FC.

- The FC will then pass the message through a set of libraries and will forward the request to companion computer.

- The companion is responsible to track the point and send the roll pitch and yaw commands. a separate controller script will handle the control algorithm.

- The commands thus got will be fed into the fc in form of mavlink messages to control pitch and yaw and would try to put the object into the image center. see here the message definition GIMBAL_MANAGER_SET_PITCHYAW

2. Making the vehicle to follow the object

In this the drone will follow the target object. ArduPilot already supports calculating the lat, lon and altitude of what the camera gimbal is pointing at. So by using that we can easily get the lat lon and alt of the target, and feeding that information to the follow mode will eventually make the drone to follow the point.

Here is an ideal message flow diagram which may be helpful to understand the flow (this may change a bit with time).

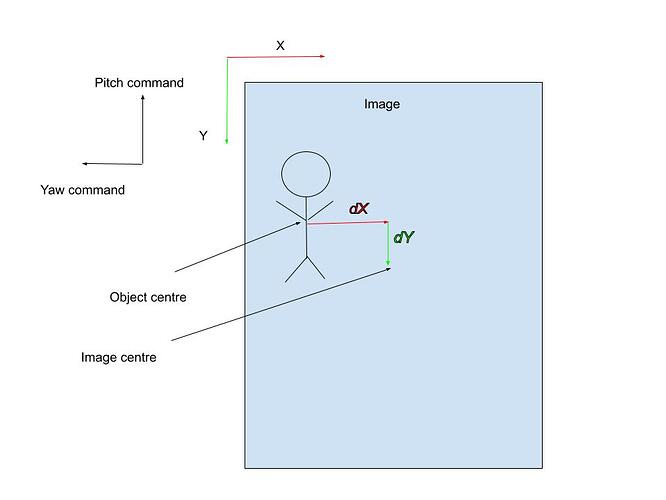

Now to the Companion side the object tracking algorithm will be working with a PID controller calculating the pitch and yaw rate, For object tracking Yolov8 or OpenCV can be used, both are compatible with development boards which supports Linux like nvidia jetson series, RPi etc. The basic controller that i proposed will work like this -

dX is the error in the X axis and dY is the error in the Y axis. Those will be the input to a separate controller dedicated for tracking. Here dX will be input for Yaw control and dy will be input for Pitch control.

What if an object goes outside the image range ?

If it happens the last object coordinates will be used to move the gimbal but within a constant time difference. If the Object is found again the tracking will continue. But if it’s not found within a search limit then the gimbal will be stopped at the last location statically.

Well thanks for reading the blog this far. I would like to receive more suggestions and insights, If you want something to add let me know by providing your valuable feedback.

ThankYou!