Hello

I’m not doing this often, but this time I just can’t figure this issue out and would like to seek your input:

I do have a ArduPilot fork with special additions to operate with a STorM32 gimbal. A key element is that ArduPilot has to send a MAVLink message (AUTOPILOT_STATE_FOR_GIMBAL_DEVICE) to the gimbal with 25 Hz and with as little jitter as possible. Some few ms jitter are ok, but it shouldn’t be much worse and messages should not be skipped or other weird things happening.

My implementation works perfectly fine for copter, but NOT for plane! For plane I do see massive jitter and drop outs, and for the heck I cannot figure out why.

In copter, what the code is doing is to use the mount->fast_update() function, which is called in copter’s fast_loop(), and to count down a counter to decimate from its 400 Hz loop rate to 25 Hz. This gives perfectly fine results, as I can directly proof by measuring the incoming data as the STorM32 see them by using the STorM32’s own logging capability (more below), but also in other ways like spying the messages on the mavlink channel, or some dedicated dataflash logging messages which I have added to the ardupilot code (also more below).

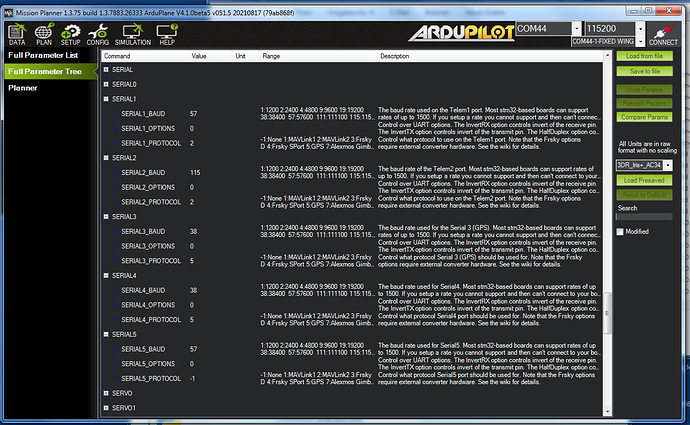

In plane, the very same code is used, except that the mount->fast_update() function is hooked into plane’s ahrs_update() function (as plane doesn’t appear to have a fast_loop()). The code adapts to the different loop rate in plane of 50 Hz. I have tested however that the issue does not go away when changing to different loop rates (tested up to 400 Hz to match copter). I even made plane to also use the fast_loop() function to make it as close as possible to copter, but which the very same results.

The copter code I test on a MatekH743, and the plane code on a pixhawk1, but I have tested that I get the very same results when I run the plane code on the MatekH743, and I also briefly tried a AUAXV2. I have no indication whatsoever that the type of flight controller matters. I should say that I do not have a plane and hence do only bench tests for plane and am also substantially less knowledged with the plane code (copter I think I know reasonably well).

I have added logging messages to the ardupilot code, which stores some timing values, such as the time then the “do the message” code part is called, the time differences which give info on the jitter, and the time it takes from the point the “do the message” is entered until it has done it’s job, i.e. after the call of mavlink_msg_xxxx_send().

These ardupilot logs yield perfectly regular timing, for both the copter and plane implementations. Yet, while for copter the STorM32 receives the messages at perfectly regular times, it doesn’t at all for plane.

From the fact that all I change is the code in the flight controller I’m convinced that the issue is not with STorM32 but something related to plane. From the fact that in both copter and plane I use exactly the same code additions, except that the mount->fast_update() is hooked in differently, I am led to think that the issue is not directly in my code additions.

In fact for plane it looks as if sending out the message on the uart is in some way not occurring when the mavlink_msg_xxx_send() function is called but delayed in some irregular ways (it actually looks a bit like beating, as if this is done by some incommensurate frequency) - but this so only in plane but not copter! I frankly admit that this doesn’t make any sense to me, given that all this is in the library part, so it’s entirely speculation, but it would be an interpretation.

Any idea or hint towards what the origin of the issue could be would be very much appreciated !

(I’m working off my ass to figure this out since several weeks now, so I’m really desperate)

Many thx, Olli

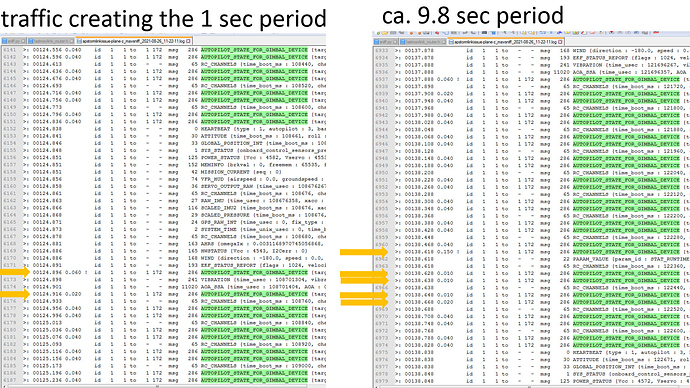

Here some representative plots, to illustrate the issue.

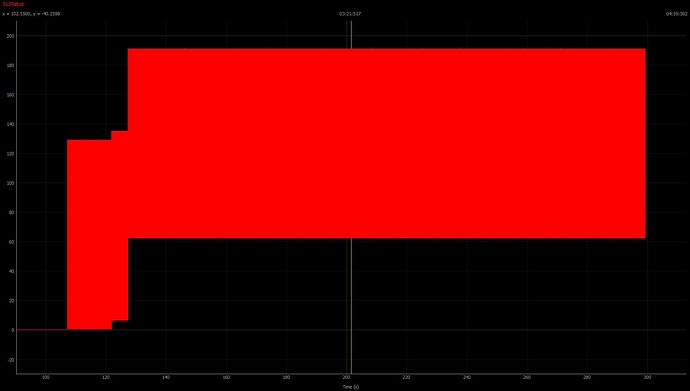

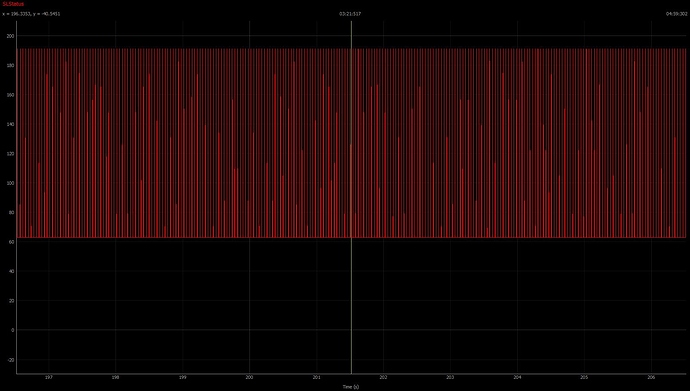

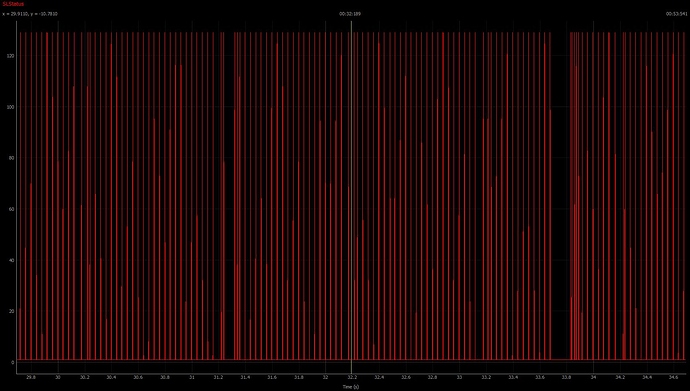

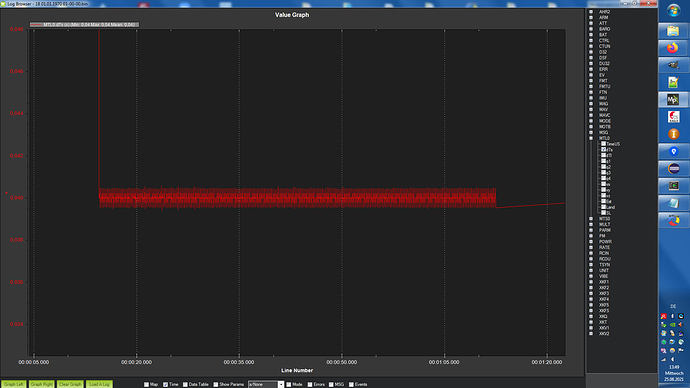

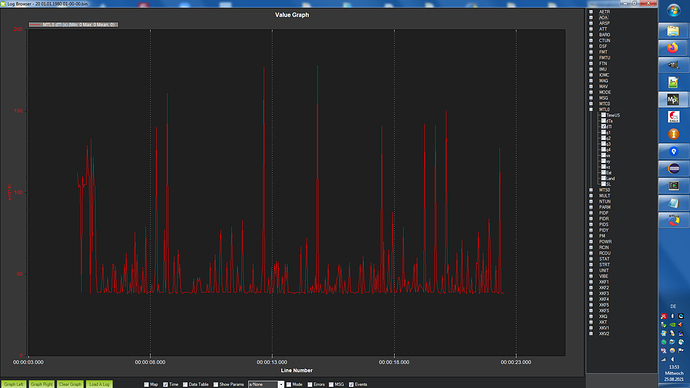

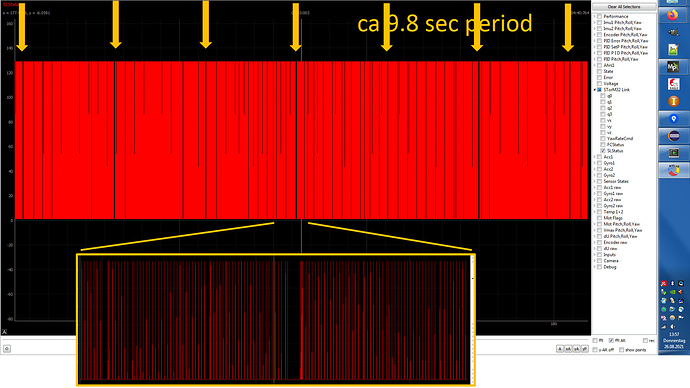

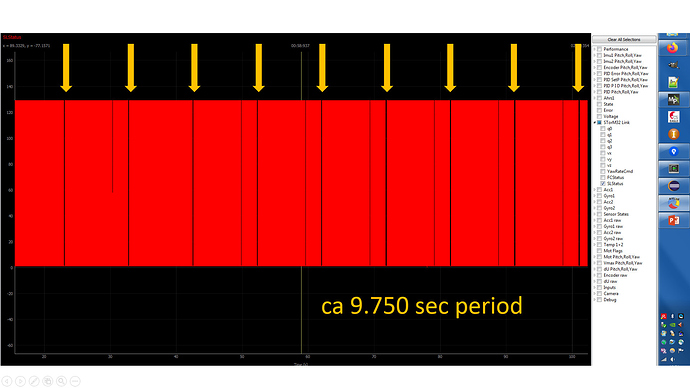

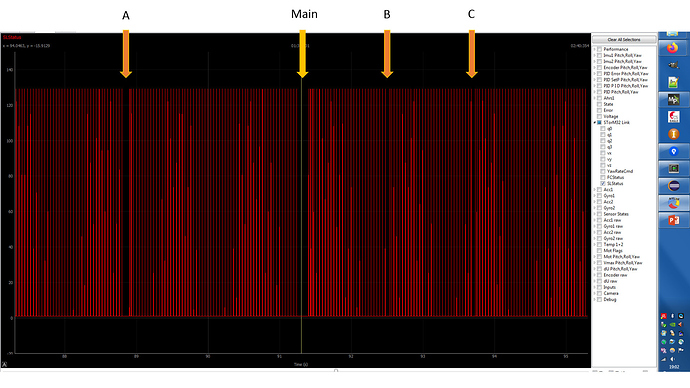

1. STorM32 logs. It’s not important to know what exactly it shows, only that each spike means that the STorM32 has received and digested said message. So, look at the regularity of the spikes. (for each three zooms are shown)

1.a Copter

1.b Plane

The irregularity for plane should be obvious, especially when comparing to copter. It also seems as if there is some periodicity, the large gaps seem to reappear every ca 9.5 secs or so.

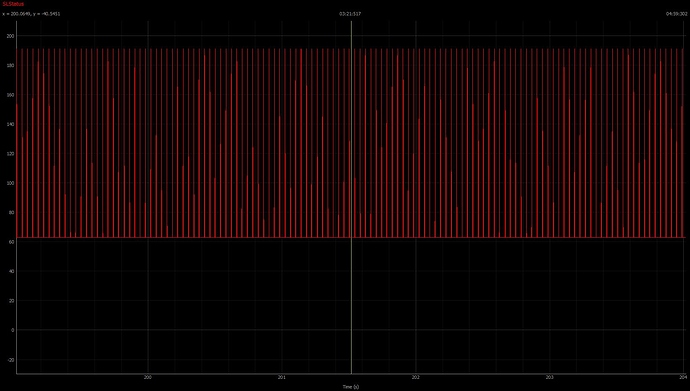

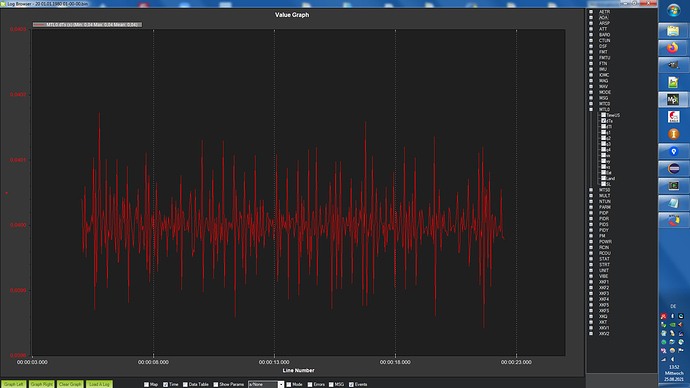

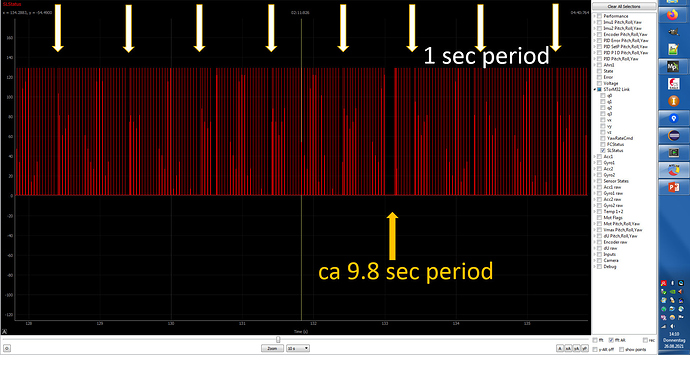

2. DataFlash logs. The plots show the time differences between each entry of the “do the message” part. The y axis is in secs.

2.a Copter

2.b Plane

For both copter and plane the time differences between each call is very close to 40 ms, corresponding to 25 Hz

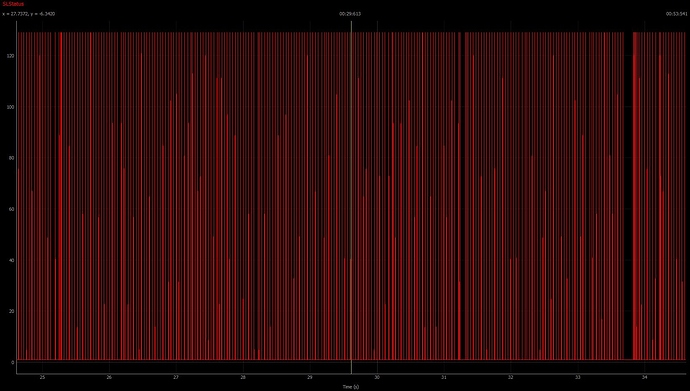

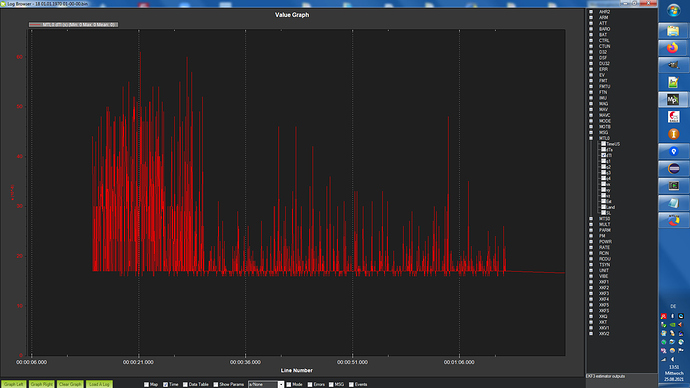

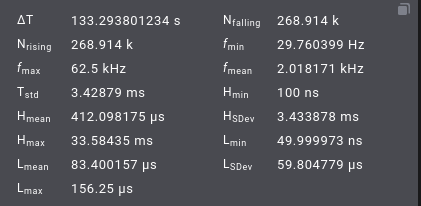

3. DataFlash logs. The plots shows the time from entry of the “do the message” part to after the call of mavlink_msg_xxx_send(). The y axis is in secs, but note that the scaling is 1e-6, i.e., the values are microsecs.

3.a Copter

3.b Plane

For both copter and plane the “execution” times are very similar, and especially never take longer than few 100 us or so, so never reach multiple ms or more to account for the observed jitter in plane.