Hello community,

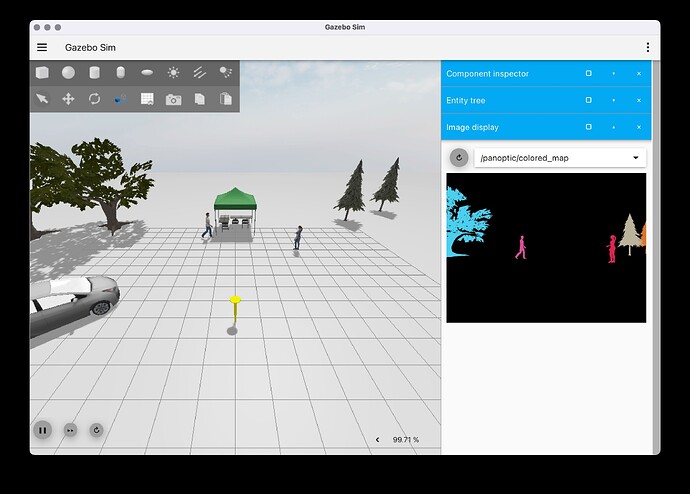

I am currently working on integrating a Realsense D435 with my drone in the ArduPilot Gazebo simulation environment. My goal is to implement obstacle avoidance during the drone’s mission. I have been using the ardupilot_gazebo package.

Maybe you could tell me how to combine all these things step by step. I will be extremely grateful

Here are the specifics of my setup:

Ubuntu 20.04 ROS kinetic

ArduPilot Gazebo version: GitHub - ArduPilot/ardupilot_gazebo: Plugins and models for vehicle simulation in Gazebo Sim with ArduPilot SITL controllers

Depth camera model: GitHub - issaiass/realsense_gazebo_plugin: || The realsense gazebo plugin for intel cameras from PAL Robotics || d435 I think i can use this : https://github.com/thien94/vision_to_mavros/blob/master/scripts/d4xx_to_mavlink.py

I could not successfully integrated the depth camera with the drone in ArduPilot Gazebo . I would appreciate any guidance or insights on how to proceed with this task.

Specifically, I am interested in:

How to configure ArduPilot to utilize the depth camera data for obstacle detection.

Are there any existing plugins or tools that facilitate this process?

Any code snippets or examples demonstrating obstacle avoidance using depth cameras with ArduPilot in Gazebo.

I have already reviewed the relevant documentation, but additional insights from the community would be immensely helpful. Thank you in advance for your assistance!