Introduction

Hello AP community! I am Sanket Sharma, a second-year undergraduate student currently pursuing B.E. Mechatronics from India. I am glad to announce that I am selected this year to work with Ardupilot under GSoC’22 with my mentors @rmackay9 and @jmachuca . In this blog I’ll be discussing my GSoC project to update ROS integration for Non-GPS navigation and off-board path-planning.

This project requires testing the instructions for non-GPS navigation and path planning on a multicopter or a rover, updating the base code for the same and based upon time feasibility, integrating the offboard object avoidance with ArduPilot Auto mode. This project would involve development and modification of scripts for Ardupilot and cartographer SLAM along with setting up a companion computer to make everything work as a whole for the multicopter and rover.

As per time allowance, integration of offboard object avoidance with ArduPilot Auto mode that would require modifying and updating mavros package for mavlink protocol.

Current Scenario

Ardupilot as of now has instructions for setting up non-GPS navigation and path planning on the website but they are incomplete in some sense making it difficult for new users to set up navigation properly.

Terms

Definitions of the important terms:

-

ROS - Robotics Operating System is an open-source robotics framework that provides a range of libraries, device drivers, and high-quality developer tools that helps students, hobbyists, and enthusiasts to develop their robotics softwares.

-

Cartographer SLAM - Cartographer SLAM is a combination of two connected subsystems, Local SLAM and Global SLAM. Local SLAM builds successive submaps. Global SLAM’s main work is to find loop closure constraints between nodes and submaps and then optimising it. The core concept on SLAM is pose graph optimization.

-

Occupancy grid - We would be using occupancy grid for defining the area mapped using cartographer SLAM. Occupancy grid divides the area into map-pixels, also known as cells and assigns them as occupied or free using a binary method. ‘1’ for occupied and ‘0’ for free. This method is also called a binary occupancy grid method.

-

Costmap - Costmap is a grid in which every cell gets a value(difficulty or cost) based upon the area to be transverse. In the case of a rover, moving in rough ground, the costmap could be a grid where cells with lower values represent flat surfaces and cells with higher values represent rough areas or obstacles or walls.

-

Trajectory Rollout and Dynamic Window - Trajectory Rollout and Dynamic Window is a reactive motion planner that takes local information available within a sensor footprint and a global objective defined in a map coordinate frame to identify a locally feasible path to follow that is collision free and makes progress to a goal.

Approach

I have divided the approach into 2 step tasks:

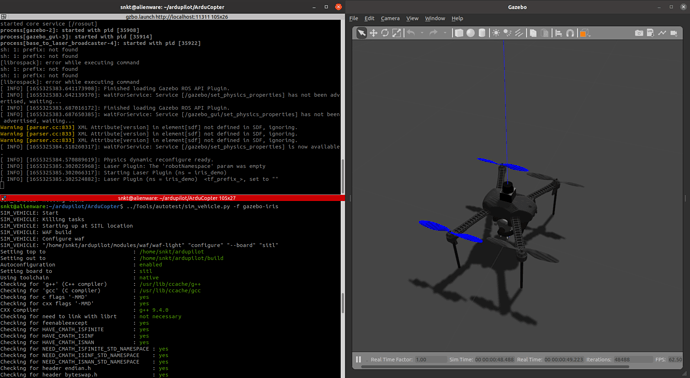

Step 1 - Setting up SITL simulation environment, non-GPS navigation and path planning:*

This task revolves around setting up the environment, the dependencies, and configuring ardupilot’s build system along with ROS integration and set up a drone(software testing(SITL)).

In this task, we’ll set up a simulated environment with either a multicopter and a rover and set up non-GPS navigation and path planning for the vehicle. It will help in making major and minor modifications to the already available code base before testing on real hardware.

This task would be accomplished in the following subtasks:

-

Setting up ROS Noetic in Ubuntu 20.04

-

Setting up ardupilot SITL and ardupilot_gazebo

-

Installing the majorly required ROS packages in our ROS workspace

a. cartographer

b. cartographer_ros

c. move_base

d. ap_navigation / ros_navigation

e. mavlink & mavros -

Setup and modify the required files as mentioned on the ardupilot docs.

There were required changes needed to be done to configure ardupilot to support positioning though visual odometry instead of relying on GPS. All the changes are/will be mentioned on this page: -

Modifying the parameter in the lua file for tuning the cartographer SLAM.

For cartographer to properly map and localize the rover/multicopter, its parameters are required to be modified.For tuning cartographer, the following parameters are needed to be modified while testing the LiDAR:

➢ TRAJECTORY_BUILDER_2D.ceres_scan_matcher.translation_weight

➢ TRAJECTORY_BUILDER_2D.ceres_scan_matcher.rotation_weight

➢ TRAJECTORY_BUILDER_2D.real_time_correlative_scan_matcher.translation_delta_cost_weight

➢ TRAJECTORY_BUILDER_2D.real_time_correlative_scan_matcher.rotation_delta_cost_weight

➢ TRAJECTORY_BUILDER_2D.real_time_correlative_scan_matcher.linear_search_windowAll the parameters and configurations are/will be updated in this PR.

-

Test the mapping and navigation part.

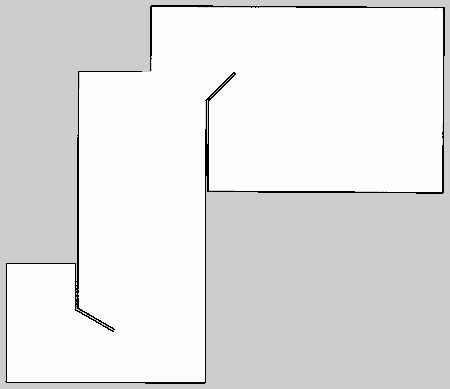

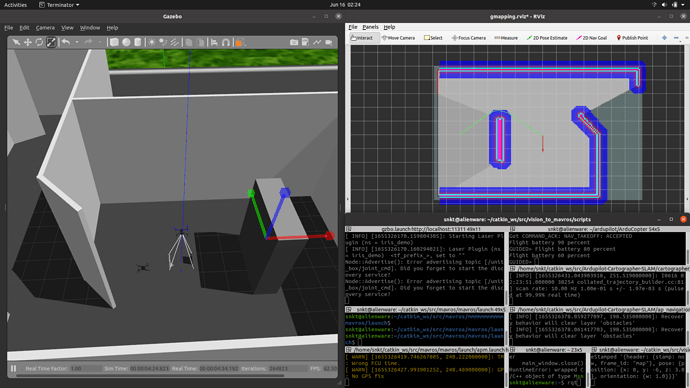

Full map generated by cartographer using LiDAR data from multicopter:

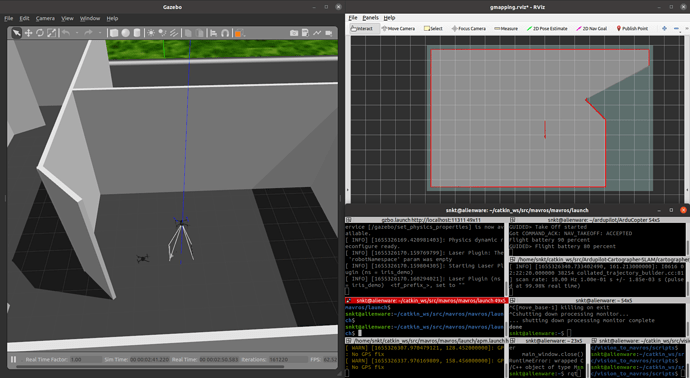

Path planning and obstacle avoidance:As we’ll be using the move_base package from the ros_navigation stack, we’ll be dealing with tuning parameters for local and global costmaps and trajectory planner for base_local_planner ros package.

The ap_navigation package from the ardupilot community has some of the parameters required for tuning trajectory planning and costmaps but various other parameters are required to be added and modified.

After adding and modifying some of such parameters the result:

The green line here depicts the planned path and for motion planning, velocity commands are given to the vehicle.

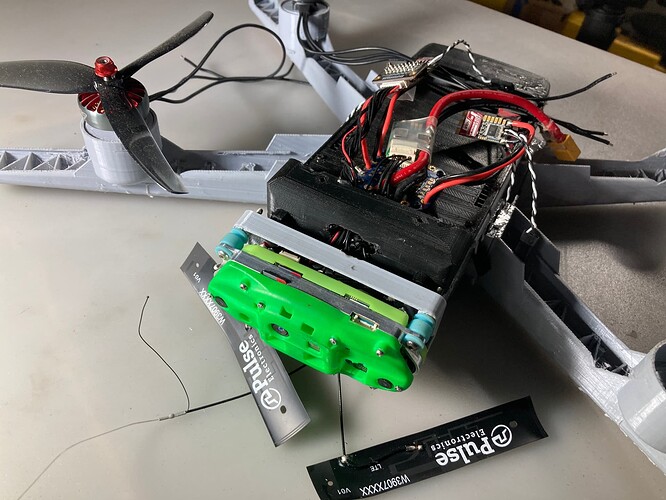

Step 2 - Setting up the vehicle and companion computer environment:

Here we’ll setup companion computer normally with Ubuntu 20.04/18.04 with ROS Noetic/Melodic and make all the necessary connections with autopilot flashed with ArduPilot.

Almost all the packages will be installed similar to what have been done in simulation except for rplidar_ros package if we’ll be using RPLiDAR to get the LiDAR data as a ROS topic.

After all this, majority work is done in tuning the cartographer SLAM. All the parameters needed to be modified are discussed above in simulation part. Generally two types of parameters are required to be modified:

-

CeresScanMatcher: It interpolate the already created submap and through the initial guess it made, it finds a place where the scan match fits. If sensors are trustworthy enough, then this method is preferred.

-

RealTimeCorrelativeScanMatcher: It can be enabled if we don’t have much trust in our sensors. It matches the scan to the current submap using loop closure. Then the best match is used in CeresScanMatcher.

Git link: https://github.com/snktshrma/Ardupilot-Cartographer-SLAM

Integrate the offboard object avoidance with ArduPilot Auto mode

If the time allows, the final task would be to integrate the offboard avoidance with Ardupilot Auto mode. For this task, we need to modify the mavros plugins and scripts.

-

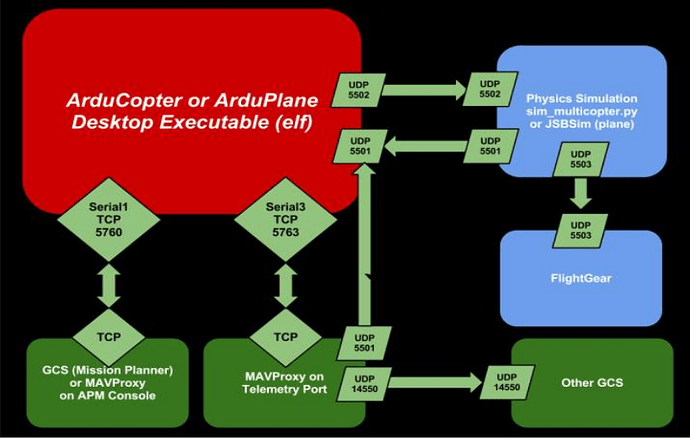

MAVLINK: MAVLink is a very lightweight messaging protocol for communicating with drones (and between onboard drone components).

MAVLink follows a modern hybrid publish-subscribe and point-to point design pattern: Data streams are sent / published as topics while configuration sub-protocols such as the mission protocol or parameter protocol are point-to-point with retransmission. -

MAVROS: MAVROS is a ROS package that enables MAVLink extendable communication between computers running ROS for any MAVLink enabled autopilot, ground station, or peripheral.

Note: The above architecture for ardupilot is for the SITL environment but connection with GCS or mavproxy remains the same in real life usage.

Ardupilot communicates with the simulator (e.g. Gazebo) to receive sensor data from the simulated world and send motor and actuator values. In real life, sensors and actuators will be directly connected with FCU.

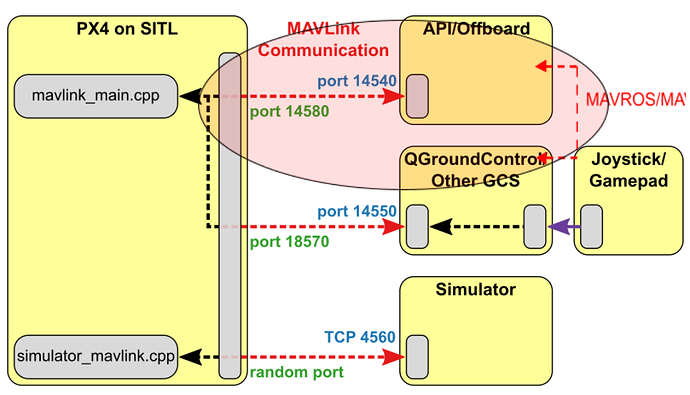

Architecture below represents the PX4 on SITL, that is the integration of ROS/Gazebo with PX4. The architecture is very much similar to the ardupilot except for the Simulator node which will be replaced in real drone by actual connections of actuators and sensors with the FCU.

Assuming the above architecture to be very much similar to that of Ardupilot after connecting with ROS through MAVROS. When ROS (API/Offboard) on a companion computer will be available, the communication of ROS with Ardupilot and GCS will happen over MAVLink using the MAVROS package. MAVROS package bridges ROS topics to MAVLink and Ardupilot conventions.

Ardupilot communicates with the GCS and an Offboard API (e.g. ROS) to send telemetry from the vehicle and receive commands.

For the completion of this task, modification of MAVROS plugins is required.

The general flow to solve this task is as follows:

- Final target sent from GCS/mavproxy when vehicle in Auto mode.

- Final target received by Ardupilot and ROS over MAVROS continuously at 1hz.

- ROS offboard path planning calculates the path around the obstacles and sends appropriate velocity commands to the ardupilot.

This problem is also mentioned in this PR .

The way I see it is to create a new MAVROS plugin that subscribes to POSITION_TARGET_GLOBAL_INT mavlink message, convert the position targets to ENU and publish a rostopic with the position target for the ROS offboard path planning to calculate velocity commands. Then these velocity commands will be sent back to FCU to command the vehicle around the obstacle.

It would be fascinating to hear what members of the community and the development team have to say about this approach. Suggestions and feedbacks are always appreciated, and we may discuss them in the comments section below.

Thank you!

![IMG_3952[1].jpg](https://discuss.ardupilot.org/uploads/default/optimized/3X/1/d/1d092e366f9ff886120a62b2828c5b74384d3980_2_357x476.jpeg)