Some of you might be thinking that why am I writing a blog post on collision avoidance. Isn’t it already there in Ardupilot? Afterall there is even a dedicated wiki to explain precisely how it works right? Well, I agree with you, but you see there are a few shortcomings with that approach. First, you need an expensive sensor like the LightWare SF40C to compute the distance from the obstacles. Let us assume that you saved a few bucks (~1k for LightWare SF40C) and managed to get it and took your drone out to play around or to brag about the cool sensor you just bought to your friends and you notice that the drone is still unable to fly through narrow passages or sometimes just fails to find a way around the obstacles. Well, I know the feeling. And then there is this thing which probably bothers me the most that why can’t I do autonomous navigation giving just the goal and copter does the rest even if it is in a maze-like environment. So I thought to myself that wouldn’t it be awesome to have a completely autonomous system which can use a cheap stereo camera (going for 60$ to 80$ these days) and do all of these things.

This summer, I am quite excited to be working on doing the same as a part of GSoC 2018! So basically my proposal is divided into two parts, first is the real-time 3D mapping and second is goal-directed planning for obstacle avoidance. The idea is to keep on generating the 3D map of the environment on the fly and try to reach to the goal point by reactively computing intermediate waypoints to the final goal which avoids the obstacles registered on the map. The computation of map and the trajectories can be done on a companion computers like Raspberry Pi or Odroid.

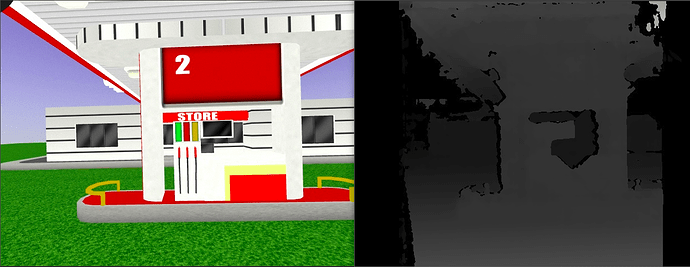

Let’s talk some more about the 3D mapping framework and how it will work. As mentioned above, I am using a stereo camera which gives us the depth information of the environment. The way this works is that we try to figure out where exactly an arbitrary point say [x, y, z] projects into both the cameras (pixel coordinates). Using this information for every point in the left and right pair of images we create a disparity map like this:

This disparity map can then be projected into a point cloud if we know the baseline between the two cameras. Thankfully all of this is can be implemented using simple OpenCV functions. The end result is that for every image frame we have a 3D point cloud which can now be used for mapping.

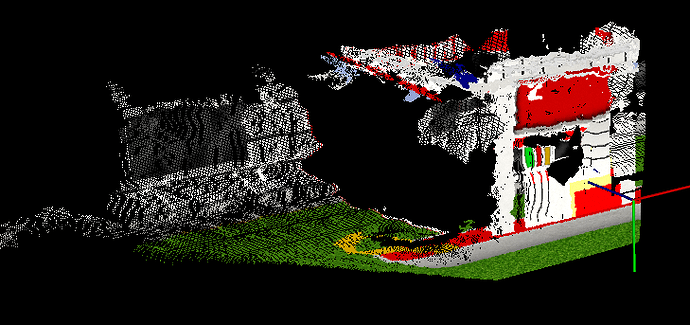

The idea behind mapping is simple. Firstly, we will need a reference frame. For now, let us assume it is the Local NED frame which means that our map is aligned to the north as its +X direction with its origin at the local frame. Next, we need to transform the point cloud data from the camera frame to this map frame before integrating it with our final map. This transformation comes from the odometry data from the drone hence the quality of the map will be directly proportional to the accuracy of the odometry. This should give us a fused 3D point cloud which looks like this:

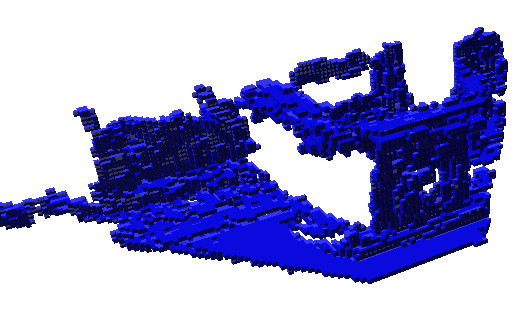

But wait, our ultimate objective is not just to create a 3D map but also to use it for planning. This is where a different map representation called occupancy map comes into the picture. The idea behind an occupancy map is to discretize the world into grids and use the Bayes theorem to compute and update the posterior probability of each cell being occupied using observations from current measurements. This method of mapping serves two purposes. First, we can easily find a path to the goal avoiding the cells which are occupied. Second, we are not just dumping the information from the past and utilizing it to improve our map at every sensor feedback iteration.

But the story isn’t over yet, this kind of representation scales well in 2D like in case of a rover but in 3D it becomes quite difficult to keep a track of each and every cell in the map. It also poses a serious memory overhead as the size of the map increases. To resolve this issue an interesting data structure was proposed called Octrees. An Octree encodes the 3D grid information efficiently in the memory and makes the operations like traversing on it very fast. Hence I am currently using a library called Octomap which does all this for me. So the input to this tree is a point cloud and after thresholding the probabilities we get a binary representation of occupancy map consisting of information about all the occupied and unoccupied cells. Here is how it looks like:

The next steps involve conducting some more tests on SITL simulations for calibrating some parameters to improve the quality of the map and extracting only a small bounded region before giving it to the planner. I am also thinking of projecting this map in 2D and publishing it as a DISTANCE_SENSOR message for failsafe on AC_Avoidance library if the planner fails to find a feasible trajectory.

I am developing this framework without having any dependency on ROS to enable most of you to use it on any Linux based system for now, but I do have plans to do the same using ROS for folks (like me :P) who are into that. The teaser image is of a similar pipeline which I implemented on ROS a while ago [code]

I have yet to test this on my drone, just hoping for the weather to get a little bit better. I am hoping to share some more results on the drone with you guys soon. Once mapping framework is complete I will also share my ideas on how I will implement the planning module. I look forward to your suggestions and comments.

I would like to thank my mentors @jmachuca77 and @rmackay9 for their valuable feedback and also @khancyr and @tridge for helping me fix certain issues with the SITL simulations.