Get your popcorn ready and let’s begin the second part of the mapping and planning trilogy. I last left off showing the 3D occupancy map construction on Gazebo STIL simulation [Part 1]. Hence, before we begin with the discussion on planning, I think I should mention about some tests I attempted afterword on the companion computer.

Mainly, I was facing issues with the disparity map generation and pointcloud fusion into octomap due to limited onboard resources. Initially, it was taking ~1s to process one pointcloud and ~3s for disparity computation on Odroid XU4. After struggling for a while I managed to get it to work 5Hz. The major boosting factor was to enable the compiler optimizations. I also had to compile OpenCV from source with NEON and other architecture-specific optimizations. I also reduced the image size by half to bring the overall speed to 5Hz. Following @fnoop’s suggestion, I borrowed an Intel RealSense D415 and tried running it indoors and the results were quite impressive. So I think using a device like that would be ideal since it does disparity computation onboard which is the most expensive part of the pipeline. In fact, I think the remaining processing can be handled even on a Raspberry Pi. I don’t have any outdoor results to show for now due to bad weather but I’ll keep you guys posted as soon as I get it.

Alright then, since the past few weeks have flown by so fast (unlike my drone  ) so let’s quickly get back to our discussion on planning. In our case, planning is focused on satisfying two constraints which are avoiding collisions and reaching the goal. There are various motion planning algorithms for solving such problems which are categorized based on the approaches, for e.g., potential fields, reward based, sampling based etc. All these approaches have their pros and cons like the potential field-based approaches are fast but they have a tendency of getting stuck at local minima. Similarly, the reward-based approaches give an optimal solution but the reward modeling itself can become intractable. On the other hand, sampling based algorithms are sometimes slow to converge but are capable of finding a solution given the robot configuration space. The major advantage of using such algorithms comes when solving a problem with a large planning space bounds. These methods are probabilistically guaranteed to find a solution if it exists and if given sufficient time. Another major advantage is that they are widely used and are fairly easy to implement. Several algorithms like Dijkstra, A*, D*, RRTs, etc., all fall into this category of approaches.

) so let’s quickly get back to our discussion on planning. In our case, planning is focused on satisfying two constraints which are avoiding collisions and reaching the goal. There are various motion planning algorithms for solving such problems which are categorized based on the approaches, for e.g., potential fields, reward based, sampling based etc. All these approaches have their pros and cons like the potential field-based approaches are fast but they have a tendency of getting stuck at local minima. Similarly, the reward-based approaches give an optimal solution but the reward modeling itself can become intractable. On the other hand, sampling based algorithms are sometimes slow to converge but are capable of finding a solution given the robot configuration space. The major advantage of using such algorithms comes when solving a problem with a large planning space bounds. These methods are probabilistically guaranteed to find a solution if it exists and if given sufficient time. Another major advantage is that they are widely used and are fairly easy to implement. Several algorithms like Dijkstra, A*, D*, RRTs, etc., all fall into this category of approaches.

Now that we have narrowed down to the class of approach we will be using, let’s figure out which algorithm to choose from the list. Dijkstra is a well known shortest path search algorithm in which each of the nodes is visited and we try to find a set of nodes which minimizes a cost (distance in most cases) for reaching the goal. A* adds a heuristic function to Dijkstra to lead the search in the direction of the goal and reduce the convergence time. RRTs have a special feature to direct the search in the direction of large unexplored regions by sampling the space in the direction of tree expansion. This results in improved convergence especially when the sampling space is large. An increment to RRT is the RRT* algorithm which adds a notion of optimality to it. Hence, this algorithm not just searches for a path but also tries to optimize an objective function like path length. But wait! there is an even better variant of RRT* called Informed RRT* which improves the convergence rate further by reducing the search space everytime an improved solution is found [video explination]. This is the one which we would be using.

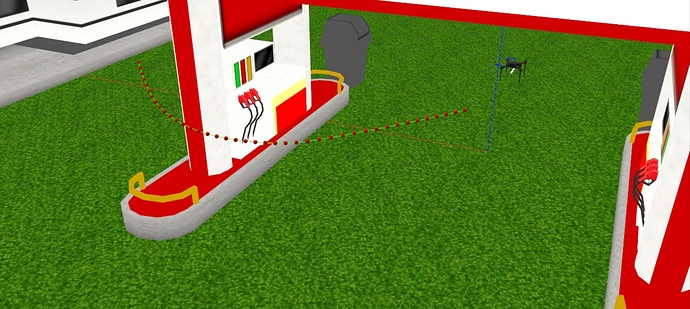

So that’s enough about the choice of algorithm, let us discuss more on implementation now. I am using the infamous Open Motion Planning Library (OMPL) and the Flexible Collision Library (FCL). For those of you who have used MoveIt might already be familiar with these. OMPL provides an efficient API making it extremely easy to compare and test amongst the rich set of planning algorithms available. FCL, as the name suggests is used for checking for collisions between the sampled state and the octomap. The pipeline begins by first generating an initial map of the environment by performing a 360-degree rotation maneuver. Once an initial map is constructed the planner is called in a new thread which takes in goal point and uses the current location of the drone to generate the plan. Since we are using Informed RRT* we only get a visibility graph. This visibility graph is discontinuous hence trajectory smoothening is required which is done using B-Spline which acts as the final path. As soon as a new plan is received an executor thread picks up the plan and starts giving position commands to the drone based on a constant velocity profile. The video below gives you the basic idea of how it works.

This would have been the end of it but there is one big problem here. We don’t have the complete map hence there is a good chance that the drone will collide especially when the goal is far. To resolve this we have to constantly process the pointcloud and generate octomap and replan if the previous plan becomes invalid due to collisions. Although, this might sound trivial but this involves a lot of concurrency making this task a bit challenging.

I am currently looking into resolving certain issues with improper usage of RAII in my code which hinders callbacks to multiple async workers ( >5 different threads run in parallel). I hope that once I resolve these bugs the results would be much more interesting. In the upcoming weeks, I will try to run this code on the drone with a companion computer onboard (hopefully the weather will get better).

I look forward to your awesome feedback and suggestions as always and thanks to everyone who have helped me in the process.