Nowadays using Convolutional Neural Networks for solving very complex computer vision problems is the new hype in the community, researchers are trying to equip CNNs for overcoming the problems that are too complicated to be solved using handcrafted methods. On the other hand, drones are getting very popular among researchers. This project is the intersection of these two.

In this project, we try to address the issues for designing and building a small form factor GPU enabled drone that is powerful enough to run a light-weight CNN onboard. This drone will further be used to perform some autonomous tasks using a monocular camera such as navigation and HRI.

For designing this UAV we have considered the following criteria:

- Monocular camera: Although using depth information for controlling a UAV makes the life easier, because of the limitations of such sensors (i.e. depth estimation range limit, operation environment limits for structured-light RGBD sensors, huge data streams, etc.) we are more interested in using monocular cameras.

- Embedded computation: It is possible to use an external powerful computer to do the computation offboard the UAV and send the control command to the UAV for execution. Our research group have previously used such a method for long range, close range, or even handsfree HRI using UAVs. We prefer to move the computation onboard the UAV so that it would be self-contained. The best currently available companion computer for this task is NVIDIA Jetson TX2 equipped with 256 Cuda cores, powerful enough to run a very light-weight CNN.

- Small form factor: The proof of concept project in which a drone was equipped with a Jetson module was NVIDIA’s Redtail drone. Since then, many commercial products tried to provide a UAV equipped with a Jetson module. However, most are extremely big and heavy, these drones cannot be used easily and freely for research and cannot fly near humans by many countries flight regulations. We considered our design to be small and light weighted so that it can be considered under the light-weight hobby drones category and could be used easily anywhere other small form factor camera drones are allowed to, but powerful enough to carry the Jetson TX2, a high-resolution camera, and any other needed equipment.

- ROS: ROS is becoming the standard framework for controlling Robots in academia and industry. We want our drone to be ROS enabled and have the ability to connect to the drone over WiFi to control it using ROS from an external computer if a higher computational power is needed.

The first phase of this project is designing and building a UAV considering all the aforementioned criteria. We then use the system to implement and perform some autonomous tasks.

In the NVIDIA Redtail project, the monocular camera trail following was done using PX4 firmware for the flight controller. The second phase of our project is to port the Redtail project on Ardupilot.

For our final phase, we are planning to use this system for another vision-based complex task. This phase consists of preparing a dataset, designing and training a CNN for the task, and compressing and optimizing the CNN model for the TX2 module.

Hardware design:

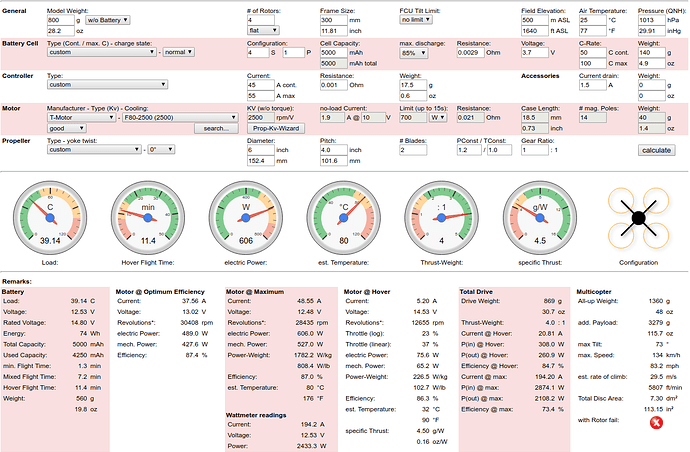

As mentioned before, we are trying to keep the design as small and light weighted as possible, simultaneously we need to make sure it is powerful enough to easily lift and carry all the required components for this project as well as some slack for extra unpredicted payloads if needed to be added later.

Here’s the list of selected components for this project:

- Flight controller: Pixhawk 2.1 the cube

- Companion computer: Nvidia Jetson TX2, which is equipped with 256 Cuda cores capable of running small CNNs. The Jetson TX2 development board comes with a big breakout board with exposes most of the available I/O’s and is good for development and testing purposes, however, this board is too heavy to fly especially on a small UAV. We used Auvidea J120 breakout board which is small and light enough to include onboard a small form factor UAV also exposes enough I/O’s we need for this project.

- Optical Flow Sensor: Same as original Redtail project we use PX4Flow to use optical flow for controlling the drone in GPS denied environments. The original 16mm lens of this sensor is replaced with a wider lens to have a more stable optical flow, which also has a built-in IR filter to filter out the laser rangefinder’s IR.

- Lidar: To compensate for shortcomings in PF4Flow’s ultrasound sensor for depth estimation and calculating the drone’s altitude, we use Pulsedlight single point laser rangefinder.

- Motor: As we want to use small propellers (5"-6"), we need to compensate for smaller propulsion by increasing the motors RPM. Most of the High KV motors used in FPV flying are designed for extremely light drones, however, Tiger Motor F80 2500kv is designed for generating high thrust (up to 1640G on 6045 propellers) on a 4s lipo as well as being light weighted.

- ESC: From the same company, F45A ESCs are designed especially for the aforementioned motors, they are very lightweight with low resistance, and provide 45A (55A burst).

- Camera: A Logitech C922x Pro USB camera is used for high-quality, high FPS video stream, with built-in H.264 encoder.

- Landing gear: We used DJI Matrice 100 landing gears which are pretty robust and durable, they are equipped with suspension systems to avoid damaging the drone on rough landings.

- Power distribution board: The battery’s output is being monitored and limited by a 200A current sensor, also a 12V/3A regulator is used to isolate the J120 board’s input (although in J120 datasheet is mentioned anything between 7V to 17V is ok).

Mechanical design: As mentioned before, I tried to keep the design as small as possible, however, the frame is designed such that the propellers size can be increased up to 8" (with a small modification such as replacing the GPS, if for any reason we decide to increase the propellers size and decrease the motors speed).

I have tried to keep the area directly underneath the propulsion disk as clear as possible so that the motors can produce maximum thrust. Although the height of the drone is a bit high (I couldn’t fit all the components in a single level with the constraints I had in mind, such as size limit, and having the Pixhawk and PX4Flow’s lens exactly at the centre of the drone) the centre of mass is kept as low as possible.

The 3D printed vibration damper is unnecessary for Pixhawk 2.1 and could easily be removed.

P.S: This is my first mechanical design ever, your comments can help me learn a lot

Hardware assembly and tuning

For assembly and tuning, I mostly followed the instructions for using each component with ArduPilot. I will spare the detail of each part and except for the problems that I encountered and couldn’t find a straightforward solution in the community which I’ll include them and how I have fixed them that might help other people.

Lidar Lite: I have seen a lot of arguments about Lidar Lite adding a sudden 13m offset. Tried to reproduce this issue on bench tests. It is easy to mess the sensor readings by oscillating the input voltage a very little amount around 5v, and if these oscillations are fast enough the 13m offset appears and never goes away until you restart the sensor. It is very easy to reproduce if you are using an external power supply that you can change the voltage on the fly. I also tried with 5 different voltage regulators. Based on my personal experience this issue never happened using linear voltage regulators but was seen a couple of times using switching regulators.

Switching regulators are known to provide noisier outputs, but linear regulators drain your power faster. Most of the UBEC’s I’ve seen being used in drones and the ones on ECSs are using switching methods to save energy as much as possible. I still need to do more test on this matter, but as far as my experiments go: If you want to rely on laser scanner data very much (in our case position control based on Optical flow without GPS), try using linear regulators in exchange for waisting a bit of your battery power and less flight time, or find UBECs that don’t produce much noise (usually you can see a huge capacitor one these ones). Luckily, my ECSs don’t have BEC thus it is easier for me to isolate the voltage for the lidar. You can even have a completely separate power source for the lidar, just be sure you have shared the GND.

Again, these are my personal experiences on bench test and I have never actually crashed any vehicle because of lidar failure, just taking some precautions. Any input or other experiences on this matter is appreciated.

PX4Flow Sensor: I found this sensor work more reliably on its latest firmware with PX4, than on ArduPilot using the only firmware available for it. Most of my tests were performed in an indoor environment which naturally has less light than outdoor.

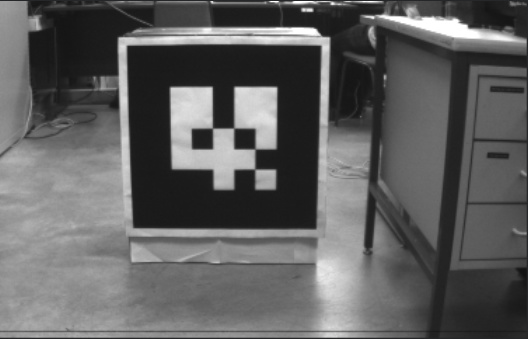

Adopting some code from the never original firmware, I could manage to increase the exposure of the sensor, which changed the sensor from “not working at all indoors on surfaces lacking very vivid features” to “working indoor, but noisier especially outdoors”. To cancel out noises, I used the method which is suggested in the original Redtail project: using a wider lens, after all, we are planning to fly very near the ground (~2m). We tested the sensor using 5 lenses with different focal length (Original 16.0mm, recommended by Nvidia Redtail 6mm, and three wider lenses 3.6mm, 2.8mm, and 1.5mm). Following images show the result for all these lenses accordingly. Note that the augmented reality tag is 60x60cm (57x57cm the black area) and is placed ~200cm away from the centre of the lens.

Based on our tests, we found the 3.6mm lens most suitable lens for our work, which would eliminate a lot of noises but still provided very good results up to 10m above the ground on less featureful environments.

Sensor calibration was performed as instructed on ArduCopter PX4Flow page. A very small tip, be sure your lidar is calibrated very well before calibrating the optical flow sensor as this sensor relies on the rage sensor measurement a lot. My PX4Flow calibration was way better after calibrating the lrange finder by changing its scaler to 0.95. Here’s the calibration result:

APSync: Not all the APSync scripts for Jetson TX1 are compatible with TX2 on Ubuntu 16.04 and JetPack 3.2.1. Some needed changes and some had to be performed manually.

The rest of the issues I encountered were solved by mentors help and searching in the ArduPilot community discussions.

PID Tuning: On the original design we had considered 6" propellers for this drone, however in practice, we found it very overpowered and hard to fly or tune the PID enough to be able to run auto-tune on it. To overcome this issue we changed the propellers to 5" (5040x4).

We also moved the GPS module from the back of the drone to the front to move the centre of gravity more toward the centre, as the battery pack is mounted on the back. The GPS mounting pole needed to be shortened to eliminate a slight vibration on the GPS module.

NVIDIA Redtail

The second phase of this GSoC project was making the NVIDIA Redtail project compatible with ArduPilot. We have made the necessary changes and the compatible version could be found from my fork of this project. This version is being tested thoroughly on simulation and seems to be ready to deploy on the drone.

For anyone interested in testing Redtail on ArduPilot, we have prepared a Docker image that installs all the components and compiles the project for you, as well as SITL+Gazebo simulator. I spare the details here, please refer to the full step-by-step documentation on how to build the Docker image, create a container and run the full stack Redtail project on a computer equipped with Cuda compatible GPU in Gazebo simulator. This code is also successfully tested on Jetson TX2 as the main computer. If you decide to test it, any input or bug report is appriciated.

In the following video, we have used a small footage of SFU Mountain Dataset as the input to TrailNet DNN. This video was recorded with a Point Grey Firefly camera mounted on a Clearpath Husky UGV. TrailNet calculates the movement command based on the current frame of the video feed, although the video is not actually following the command from the DNN, it is clear that the SITL drone which is controlled by TrailNet output is trying to follow the trail path.

I have to thank my mentors for this project, @jmachuca77, @khancyr, and @rmackay9 for their support, patience, and great feedbacks.

Update log:

- 06/14/2018: Initial post and idea

- 06/18/2018: Hardware design

- 07/12/2018: NVIDIA Redtail + Hardware Assembly