3D radar integration with arducopter 4.1dev finally completed. Connected the sensor through companion computer and sending data over mavlink. Next step is device drivers for the same for direct UART integration with FCU. Anyone interested to pursue can connect

What are the specs of the sensor? What distance can read ostacles at?

Good job.

Corrado

It can read 60 mtrs and extendable upto 80 mts. 120 degrees FOV. Costs about 125 USDs. Super small form factor. Check this out https://www.ti.com/tool/IWR6843AOPEVM. 3Tx and 4Rx antennas packed in this small chip.

You can certainly develop your own interface but as described here:

https://ardupilot.org/dev/docs/code-overview-object-avoidance.html#providing-distance-sensor-messages-to-ardupilot

For developers of new “proximity” sensors (i.e. sensors that can somehow provide the distance to nearby objects) the easiest method to get your distance measurements into ardupilot is to send DISTANCE_SENSOR message for each direction the sensor is capable of.

You need a CC for pre-processing the raw radar echo and probably implement some kind of Machine Learning for obstacle identification, so using the Distance_Sensor message makes thing a little easier.

…btw, reading up to 80 Meters makes this option quite interesting ![]()

@ppoirier I actually helped him set up the new OBSTACLE_DISTANCE_3D message we have since this sensor actually gives 3D data and it’s working fine with CC I guess.

However, I think we can actually have direct support for this sensor inside the proximity library (without the need of CC). The sensor actually (as far as I could tell, I don’t have the sensor) returns some sort of filtered obstacle vector, along with obstacle velocity automatically, which is amazing!

@rishabsingh3003 thanks for the insight.

I looked up the documentation for the new OBSTACLE_DISTANCE_3D message and I am not sure how to use it in the best way.

I am running a yolo implementation with my ZED stereo cam and I can retrieve the bounding box plus the mean distance of an object detected. How would I feed this into the object database?

Alternatively, I can obviously provide the point cloud with the x,y,z coordinate of each point, however I dont think that this would make sense since I would be clogging up the API with that amount of data.

Any further information on how to use the new API would be appreciated.

Hi @mtbsteve.

The way OBSTACLE_DISTANCE_3D (ArduPilot 4.1 only, you won’t find the documentation in the main Mavlink base), or any of the other mavlink messages we support (OBSTACLE_DISTANCE/DISTANCE_SENSOR), is that it automatically processes the incoming data and sends it out to the object database and body-frame boundary.

The body-frame boundary stores the obstacle in body frame, and is used for simple avoidance.

The Object Database stores the obstacle in Earth-Frame and is used for Path Planning libraries (Bendy-Ruler).

Please note that this is just for your insight, however, the user of these messages doesn’t need to bother with all this. In short, just send the detected obstacles body frame {x,y,z} in FRD coordinate frame packed in OBSTACLE_DISTANCE_3D along with a time-stamp and let ArduPilot handle the rest!

Make sure you set all the PRX_ , AVOID_ and OA_ parameters correctly.

If you have more questions, Discord’s vision-projects channel is a good way to show off your work and ask for help!

@rishabsingh3003 thanks again for getting back to me.

I am looking at the preliminary description of the OBSTACLE_DISTANCE_3D ( #11037 message and I am afraid that I am still not getting it.

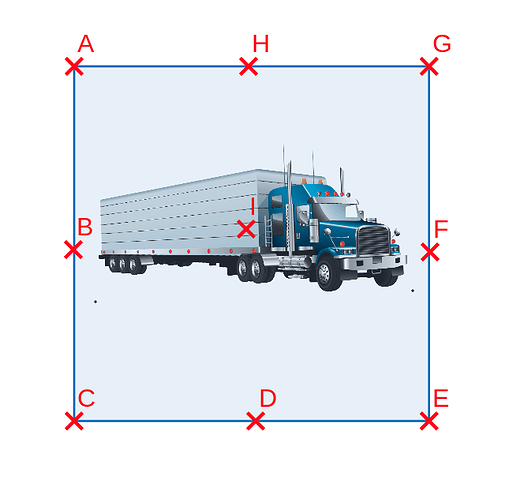

Imagine the sensor detects a truck in front of the drone. What coordinates am I supposed to send over Mavlink?

- bounding box plus depth?

- the outer contour?

- a point cloud?

just a single xyz distance vector as it is currently described in the API doesn’t seem to make sense to me.

Whereas the current OBSTACLE DISTANCE function is consistent and works well- I create a SLAM map and send the 72 distance points measured over the angle scanned to the FC.

@mtbsteve I think I understand the confusion. I need to improve the documentation of OBSTACLE_DISTANCE_3D

Anyhow, I hope this clears it:

Each OBSTACLE_DISTANCE_3D message only contains one obstacle distance vector. But we use multiple of these messages to get a complete picture of what the environment of the drone is. Each message has a timestamp. So if you want to send in 30 obstacle vectors from one camera frame, all you have to do is send those 30 different OBSTACLE_DISTANCE_3D messages with the same timestamp. As long as all those messages are received within a few ms of each other, ArduPilot backend will treat it as if you have sent all 30 vectors, at once.

Let’s take your scenario of a truck in front of the drone. There are many ways you can tackle this. Here is how I would do it:

Since you already know the bounding box, figure out coordinates A - I marked in “red cross”, and convert these to an FRD body-frame Obstacle Vector. Then send in those 9 vectors, one by one, with the same time-stamp. Please note this is just an example, you can send in 100 different vectors, all over the box, as long as your connection to the flight controller can support that traffic. ArduPilot will process and filter this, and store the obstacles appropriately for path planning and avoidance. You can even send over an entire point cloud but I don’t think we’ll be able to process that many points on standard flight controllers.

When you have a new frame available from the camera, repeat these steps, and send the 9 vectors again with a new timestamp. You can of course use OBSTACLE_DISTANCE, but that is still a 2-D message. We are constantly trying to make our systems 3D. When we came up with the message a few months back, we thought this was the best way to do it. User has the freedom to send in as many or as little 3D vectors as they want, and we take care of the rest.

I hope I make sense, please feel free to comment again if you still have doubts.

Support for this sensor is a great addition! I’ve just been watching Rishabh’s obstacle avoidance presentation from the dev conference and I’m quite keen to play with it. Yash what companion computer have you used?

@rishabsingh3003 thanks for the explanation. I also read your blog posts on that matter in the meantime. Well done!

So in my humble understanding, I would just send the 3 vectors defining a planar face positioned in front of the obstacle for max performance. This information I can get from the yolo object detection.

Next question: in one of your blogs you mentioned that the avoidance algorithm automatically adjusts the evaluation of the 3d obstacle database by the current lean angle of the drone. Is that configurable?

I have my ZED camera mounted on a gimbal, so the point cloud is always leveled and a further adjustment would lead then to wrong results.

@mtbsteve, regarding the 3 vectors, don’t hesitate in sending more than that  We do some initial filtering right at the beginning so that we don’t process redundant vectors.

We do some initial filtering right at the beginning so that we don’t process redundant vectors.

Yes, for Obstacle Database (only used in BendyRuler, not Simple avoidance), the lean angle is automatically adjusted. So your 3D vector will be rotated by the pitch, yaw, roll. 99% of the users use vehicle attached lidars so we didn’t really think about this bit earlier…

But the good news is that it’s extremely easy to add a parameter to disable this (< 10 lines of code I think). If you want to make a custom build for yourself and add this parameter I can guide you, else you can wait for me to add it sometime later when I have the time, but I can’t promise when

@rishabsingh3003 Thanks. Please add a parameter to disable the automatic lean angle adjustment.

The way it’s currently implemented makes sense if you use eg a bulky 360 lidar which can’t be mounted on a gimbal.

But when you use a stereo camera like a ZED or Realsense, you better adjust the lean angle during the image processing. Those cameras have a vertical FOV of 60 degrees and more, so it is very easy to dynamically calculate exact distance measurements for lean angles up to 30 degrees out of the point cloud, even when no gimbal is used.

To do this in the database of the FC on a small subset of data compared to whats available in the camera is the last resort if lean angle independent data can’t be provided by the sensor.

Edit: Thien has done the lean angle compensation nicely with the realsense camera here: https://github.com/thien94/vision_to_mavros/blob/master/scripts/d4xx_to_mavlink.py

I have tested the config on Raspi 4 and Jetson Nano. Both have worked well. Working on the documentation part. Will update soon with screenshots and config after testing and validation. Stuck due to covid related restrictions.

This is terrific! mmWave radars are great when the weather is an impediment for laser based technologies and cameras. Is there a github repository or another website where I can see the code? We have done some preliminary work with this technology, but we stopped because it was not supported for Arducopter. If it is the case, we want to collaborate since this technology is actually very common in modern cars for features such as advanced cruise control.

Any updates to this project or possibly direct firmware support? I’m looking at mmWave radar as a possible solution for Rover obstacle avoidance, and this seems promising!

I found this GitHub repo, which I assume belongs to the OP. Failing any response here, it will at least provide an excellent starting point for my own dive into the topic.

@Yuri_Rage me and Yash worked on this one last summer, but I don’t think anyone of us had any updates after that. Getting direct support won’t be too hard, but I do not have this sensor with me to try it out. This one needs a companion computer as of now…

That’s what I expected, Rishabh. I will have a couple of them on hand this week and am willing to get involved with writing a driver if you are busy on other projects. Initial testing will be via RPi and serial comms.

Great presentation on OA earlier this year, by the way!

I will be happy to help you! It’ll be a fun project. I don’t have one in hand so I won’t able to directly write the driver. Feel free to directly reach out to me if you need help