I would be surprised if the noise were a problem at say 300ft but I have no idea exactly how loud the generator would be. My quads and hex get pretty quiet at 200ft away.

Not just the generator but also 8 props turning really fast. In the bush at night you will be amazed at what you can hear.

I do wish you the best of luck, it really isn’t an easy task. We had many other obstacles not mentioned to overcome like aircraft and system reliability (a big challenge, but one can overcome them). Enjoy, it should be fun.

Thanks, under no illusions as to how difficult this road is going to be and what this will cost to implement.

Will follow with interest, please keep us updated.

Will definitely do so. What ever this costs, we have to try everything we can otherwise soon the only rhino may be in museums

@panascape We http://www.ardupilotinitiative.com/whoweare/ have previous experience working in Africa using ArduPlane and machine vision systems to autonomously identify elephants and poachers. http://elephantswithoutborders.org/what-we-do/surveys/

I’d be very happy to have a chat with you Robert and see if there is an opportunity to collaborate.

Hi Craig, that would be great, thanks. I will message you with my email.

VERY interesting. I eagerly await further test results. I have other ideas of what to do with poachers, but will no comment on them here.

The biggest hole we still need to patch is to get a UAV with a camera to take up station above an acquired target and track it. WE have the ability to identify targets but we don’t have the ability to move the camera to keep them in frame or to position the UAV.

There seem to be a number of projects built around keeping an object in frame using a pan and tilt camera but keeping the UAV in position seems to be more elusive. There are two other anti poaching UAv projects I know of and after having chatted to them, they too haven’t figured this out and will leave it till later which is a really bad idea.

So here is my idea and would love your input and aso if any of you want to help implement it, the assistance would be most welcome.

This would rely on using a fixed wing UAV and a 2-3 axis gimbal that can provide information about its angle of pan or tilt. If we don’t have such a gimbal, we would need code that we could use to calibrate the pan and tilt and then accurately keep a record of the angle we have moved it to.

If we fly our patrol patter with the gimbal panned at 45° from the nose and tilted 45° down (could be a different tilt angle) once we acquire a target we can do a very rough calculation of position taking the height from the ground via LIDAR and the angle of the gimbal. I know this won’t be accurate, but it doesn’t need to be.

As we keep flying the gimbal will track towards 90° to the nose and we start to to build a rough circle of loiter estimated for the gimbal to be at around 90°. Once we get close to 90° we initiate the loiter circle.

Now we will be out but we use various predefined points in the circle to make calculations based on height and gimbal angle and we use these to refine the centre of loiter with the gimbal tracking keeping the image in frame while we refine the centre of loiter. Once we have the centre within in an acceptable tolerance we will use the same method to move the centre of loiter as the targets move.

This may not work for say tracking a car but it should work for a human on foot, especially one who doesn’t know they have been detected and once we have established a good centre of loiter tracking them if they run should be far easier.

What do you all think?

If I am not wrong then the Solo’s I.mx6 has an object tracking feature in one of the smart shots. I think Tarot and Storm32 gimbals are supported. You could salvage a Solo I.mx6 and get your work done.

Nitesh

thanks, checking it out now

How’s it going Robert? @panascape

So far so good, we made some fundamental changes to the propulsion system to increase vertical lift power and reduce drag and should be able to test these soon. The camera tracking and drone positioning is fully specced and we have a team looking to implement this soon. The aim is that the UAV will begin taking part in exercises at the reserve at the end of March so that image recognition system can be further refined.

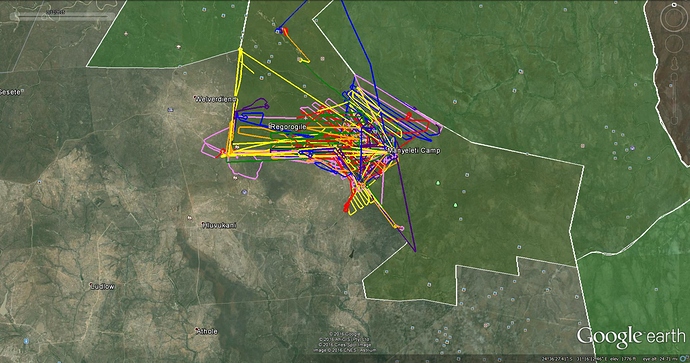

Further to your PM: We did try prioritize our flights over borders and fences, I flew the Pretoriuskop fenceline south down to Luphisi in the Stolsnek section every night for 8 weeks. Pic shows a few of our flight paths:

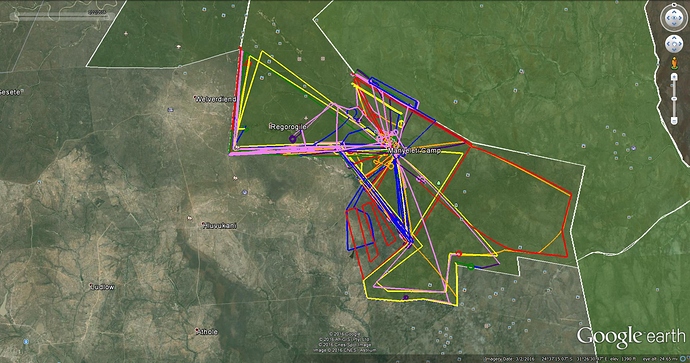

We then moved to Phabeni gate and flew the Sabie river from Mkhuhlu to Belfast for 4 weeks then onto Manyeleti where we flew borders, fencelines and villages extensively.

These pics below show some flights done in 2015 & 2016, as you can see a “few” flights were conducted. Not seeing any poachers was certainly not for lack of trying.

regards

It’s certainly going to be interesting when we are up there and if we don’t get it right, hopefully we can learn why and make corrections.

Just a progress update.

We have finalised the prototype design as Tiltrotor Skywalker X8. For now only the front motors will tilt and the rear will be fixed until we can find 15x5 CW/CCW props that would feather backwards when the rear motors tilt backwards. We are using Lobot LD-220MG servos, with custom mounting brackets, for the tilt mechanism.

Motors will be 4 x T-Motor 3515 400KV with 15x5 propellers providing a total of 9.8kg thrust.

Battery is a Li-Ion 32000mAH 120A 6s battery

We have also figured out the autonomous tracking system which will consist of two onboard computers, one to control the image identification/gimbal tracking and a second to calculate the coordinates of the target and ensure that the UAV can remain on station above it. We opted for two computers to ensure we have enough processing power and to ensure that if one fails, we only lose one aspect of the system which can easily be picked up by a pilot where as camera tracking and flight control becomes tricky if you have to manage both manually. UAV positioning will be a PI3B+ running Maverick and the Image identifications computer will either be the same, or a TX2, with intel Movidius Neural Net USB sticks.

Have to thanks Craig Elder and Fnoop for always pointing us in the right direction.

Don’t forget to document the build so writing the SACAA maintenance manual will be easier.

Fully documented in great detail so that the build can easily replicated to build additional units

They will need it for that manual