Sorry About “almost the same but I just can’t understand…”

I have some question about External Pose Estimation. The situation goes as follows:

I use a 2D lidar mounted on a gimbal for indoor positioning, an algorithm is written by me to get the position of my quad (not pose). And MAVROS is used to publish the position data into Pixhawk with the mavros/vision_pose/pose topic.

The accuracy is verified. I think Under no circumstances will its data have a sudden change above 1 meter.

I took a reference to Tuning Loiter on copter using external position estimate (i.e. NonGPS). In this issue, anbello confirms it did work with this way, i.e., using mavros/vision_pose/pose topic.

I changed my parameter as he said, such as turning off GPS, and modifying positioning accuracy (I think my algorithm has the accuracy), also I sent home position to Pixhawk, GPS grid is my city, Xiamen.

But the problem is, the quad is not under control. I can successfully get into Loiter mode, but initially, the quad go down as if it haven’t had enough lift, when I put up throttle, it just lean forward of backward with a large pitch value then crush.

Above all, I have some questions:

1 in the mavros/vision_pose/pose topic, what kind of (x, y, z) grid should I publish to flight controller?

** Is it body frame or earth frame. What does it look like? If it’s body frame, is X points toward the quad;s front, **

** as well as Y points toward right? If it’s earth frame, is it NED or ENU?**

I’ve looked up MAVLink XML, it says it’s global frame. So I used NED for this.

while(ros::ok()) {

if (is_ctrl_rc_updated) {

// Vision

vision_pose.header.stamp = ros::Time::now();

vision_pose.pose.position.x = -x_body / 100.; // y_body / 100. // x_body

vision_pose.pose.position.y = y_body / 100.; // -x_body / 100. // -y_body

vision_pose.pose.position.z = alt_global;

vision_pose.pose.orientation.x = current_imu_raw.orientation.x;

vision_pose.pose.orientation.y = current_imu_raw.orientation.y;

vision_pose.pose.orientation.z = current_imu_raw.orientation.z;

vision_pose.pose.orientation.w = current_imu_raw.orientation.w;

vision_pos_pub.publish(vision_pose);

// Fake GPS/ENU

vision_pose.pose.position.x = -x_gnd / 100.; // lat

vision_pose.pose.position.y = y_gnd / 100.; // lon

//vision_pos_pub_mocap.publish(vision_pose);

cout << "x_body: " << vision_pose.pose.position.x << "\t y_body:: " << vision_pose.pose.position.y << endl;

is_ctrl_rc_updated = false;

}

last_request = ros::Time::now();

ros::spinOnce();

rate.sleep();

}

I used a crude way to set orientation and make it NED.

vision_pose.pose.position.x = -x_body / 100.;

vision_pose.pose.position.y = y_body / 100.;

2 Is there any detials I missed for vision position inject?

I think there are only three steps:

1 Set parameters in the flight controller, for example turning off GPS, BUT the visual odometry is no need to

use in this situation.

2 Publish home position to flight controller.

3 Publish vision pose to flight controller. In this step, I have another question, is quaternion necessery for

the dataframe? If I just subscribe it from flight controller and publish it back, is there something bad

happening?

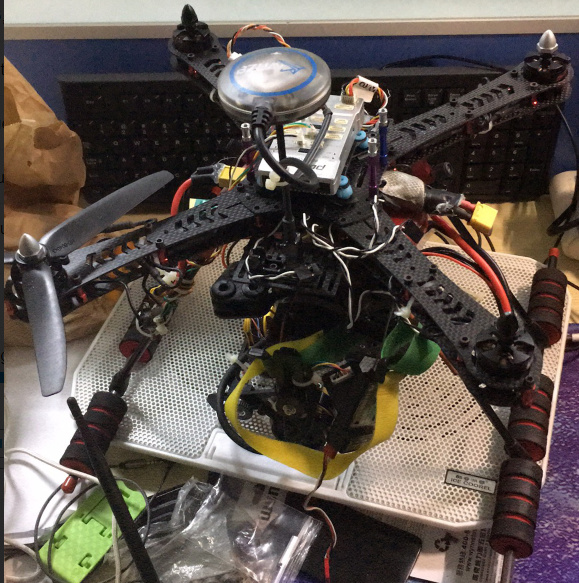

This is my quad, a DYS gimbal is installed and the lidar is mounted on the gimbal.

Sorry about my English and the markdown grammar, but help is really needed