As an experiment in autonomous navigation with Ardupilot, I built a rover capable of autonomous path following.

It uses the ML/AI technique of semantic segmentation to determine where the desired path is, on a live video feed. Using this on a Jetson Nano, a relative bearing is generated to stay on the path. This bearing is sent to Ardupilot, which (in GUIDED mode) turns the rover to that bearing.

It’s a good example of using Companion Computer to do the vision processing and high-level planning, leaving Ardupilot with the lower level vehicle control

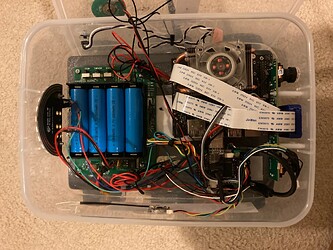

Hardware used

- Bogie Runt Rover chassis

- Roverbase power and motor controller board

- Jetson Nano with Pi Camera V2

- mRO X2.1-777 flight controller (any controller will generally work)

- Ublox M8N GPS

The flight controller and Jetson were connected via UART, for MAVLink telemetry.

The layout is shown below:

Note: A stereo camera was not fitted, but not used.

Software layout

All processing was done in realtime on the Jetson, which would sent out MAVLink messages (with desired speed and heading) to ArduPilot.

When ArduPilot was in GUIDED mode, it would move the rover as per the MAVLink messages.

The Rpanion-server software was used to manage telemetry streams, with the custom “segmav” software to process the video stream and output a desired heading and speed. Note the speed was hard-set at 0.5 m/s.

Segmav software

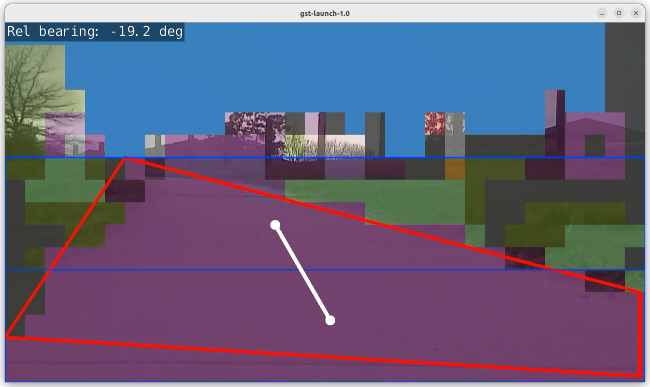

The segmav software is designed to use Semantic Segmentation to determine the correct heading for a rover to stay on a road or path.

Segmav uses the following steps during processing:

- Semantic segmentation is first used to separate the captured image into category areas: “Sky, Grass, Road, …”.

- The largest area (contour) with a “road” category (purple) is then found (red polygon).

- This contour is then split into two - halfway in the horizontal plane (blue boxes).

- The centroid of each half is calculated (white points).

- A line is drawn between the two points and the angle calculated (white line).

- The angle is put through a 3 point moving average to smooth out any large changes.

- This angle (yaw) is encoded into a MAVLink message

SET_POSITION_TARGET_LOCAL_NED, in Body (relative) frame and the forward velocity and yaw components. The velocity component is hard-set to 0.5m/s.

The segmav source code is available from GitHub.

Performance

I could get 7fps using the fcn-resnet18-cityscapes-1024x512 dataset, with less than 300ms latency. This gave a low enough latency at speeds of 0.5m/s. For higher vehicle speeds, a faster Jetson (or smaller dataset) would be required.

A video demonstration is available below:

Issues

The accuracy was not great. The rover would occasionally dive off the path, due to an incorrect segmentation. A better tuned or larger dataset would likely reduce this from occurring.

The Runt Rover frame itself wasn’t good over grassy terrain. When running over grass, it would frequently get bogged.

Conclusions

This project is a good proof-of-concept for using semantic segmentation based navigation for following paths or tracks. Better datasets and a more powerful Jetson could make the system reliable enough for general use.