So I think I was looking more on the MAVROS side of implementation details, and here’s what I found. Please correct me if you find any faults:

- Manually fly the drone and bring the target in camera’s fov

- Put the drone in GUIDED mode using

serviceClient<mavros_msgs::SetMode> ("mavros/set_mode")

- Start sending waypoints to the drone using

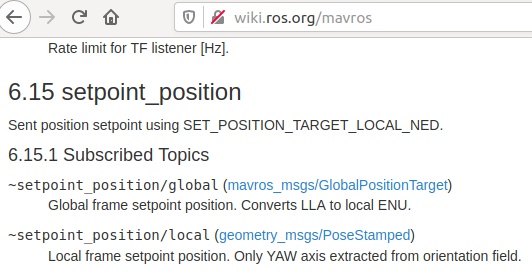

SET_POSITION_TARGET_LOCAL_NED

by publishing to topicmavros/setpoint_position/global - The above message is of GlobalPositionTarget type, which I will populate with waypoint lat, lon, alt.

Question? Assuming the above is correct. Can I just populate the above message with just velocity (Vector3d)? (Instead of the lat, lon, alt)

LINK to mavros setpoint position

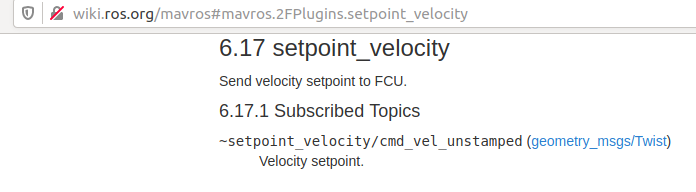

or should I use setpoint_velocity