We now have a new version of APSync with APStreamline built in for the Raspberry Pi! This version of APSync is built with the latest version of Raspbian (June 2018) and is now compatible with the Raspberry Pi 3B+ out of the box.

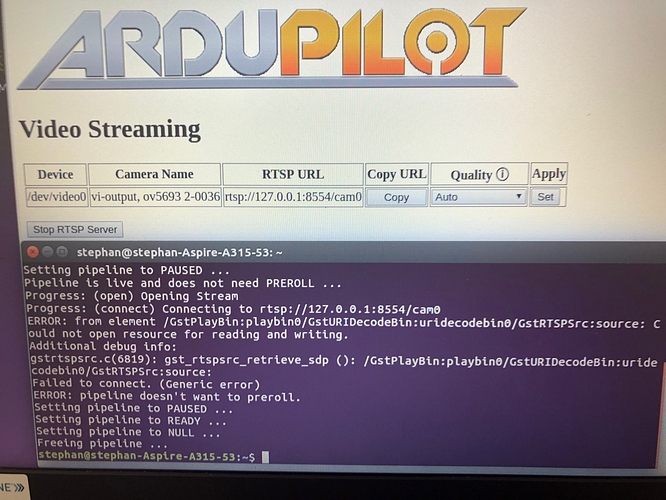

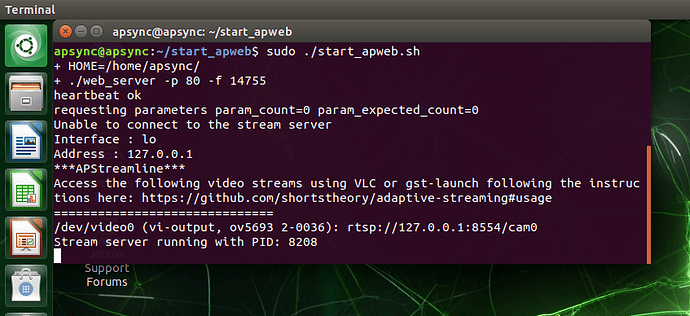

APStreamline adds several improvements for live video streaming from UAVs and other ArduPilot robots. It is directly available under the ‘Video Streaming’ tab in the APWeb config page at 10.0.1.128:80 on the Companion Computer. APStreamline has the following features:

- Automatic quality selection based on bandwidth and packet loss estimates

- Selection of network interfaces to stream the video

- Options to record the live-streamed video feed to the companion computer

- Manual control over resolution and framerates

- Multiple camera support using RTSP

- Hardware-accelerated H.264 encoding for the Raspberry Pi

- Camera settings configurable through the APWeb GUI

- Compatible with the Pi camera and several USB cameras such as the Logitech C920 and others

APStreamline doesn’t have any strict hardware requirements and can work with WiFi routers, WiFi dongles, and even over LTE with the right hardware.

Download APSync with APStreamline built in from here (Raspberry Pi Only)!

Please note that being a beta release, there may be bugs with this image. For any bug reports and/or suggestions, please comment on my pull request here.

) . I’ll post back when I have a chance.

) . I’ll post back when I have a chance.