Hi all,

I would like to show you my approach for indoor navigation using a realsense t265.

I have scoured the internet and this forum trying to implement the indoor navigation using vision_position_estimate. but that did not lead anywhere, I had so many error related to compass.

I moved away to another mavlink message that uses motion capture information. This work is based on chobitsfan’s work using the optitrack system, which is very expensive. Since he used position x y and z and quaternions, the same information my realsense can provide me. Here is a video of test flight at home:

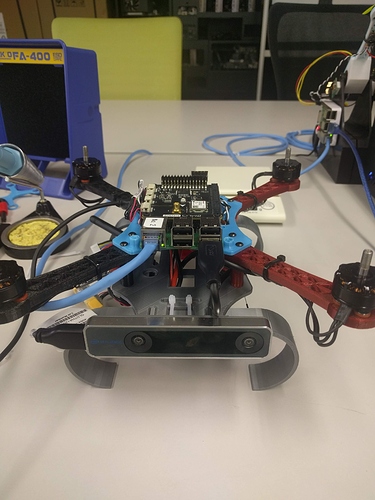

the setup is a 3d printed frame and a raspberry pi connected to realsense t265 and a navio2 board:

the script is very simple, a while loop that keep sending mocap message using dronekit:

def send_mocap(quat,y,x,z):

msg = vehicle.message_factory.att_pos_mocap_encode(

int(round(time.time() * 1000000)), #us Timestamp (UNIX time or time since system boot)

quat,

-x, #Global X position

y, #Global Y position

z yaw angle

#0 #covariance :upper right triangle (states: x, y, z, roll, pitch, ya

#0 #reset_counter:Estimate reset counter.

)vehicle.send_mavlink(msg) vehicle.flush()

The heaviest task is the installation of the realsense SDK and software on a raspberry pi:

The flight is no where near perfect, the drone definetly knows where he has to go back to hold its position, the small drift can be due to a small room size, or pid tuning, or time synchronization between companion computer and the camera… I’m not sure how to make it perfect, and I need your opinion on this. I did use the 3.7-dev branch of chobitsfan that include a parameter ek2_extnav_delay. I also reduce the variance of the position for the ekf… any comments and question are welcome !

I will upload parameters and log and the whole python tomorrow, promise.

Cheers, Ali