Btw, are you able to hover above the aruco marker now?

In this weeek end I had some time to do som test and now I have the quad hovering above the Arduco marker, it is not very stable, I have to optimize both Arducopter parameters and the pose estimation from the markers. Anyway I have the quadcopter in Loiter mode over the marker quite stable.

Is the pose obtained from aruco marker noisy? Because for me it looks really noisy and if I do some filtering then it adds more delay to the pose estimation.

@anbello What is the frequency of the topic /mavros/vision_position/pose that is coming out of your aruco_mapping package? For me it is about 10 Hz, isn’t it too low?

I got near 30Hz for /mavros/vision_position/pose topic the same as for /camera/image_raw that is the same as the framerate for video stream from the raspberry on the quadcopter. Anyway the frequency of the /mavros/local_position/pose topic is 5Hz so maybe 10Hz for /mavros/local_position/pose could be enough.

I don’t understand why I am getting 10 Hz even though my input image is 30 Hz. The noise in X-Y axis is too high, so when I am giving a set point to track, say (0, 0, 1), the Z axis is being tracked pretty well but the noise in X-Y axis is making the drone do strange actions and eventually move out of the area from which the markers will be visible.

@anbello, How is the image transmission over wifi? Does it lose any packets/images or is it continuous ?

And when you are hovering over the markers do you send any /mavros/setpoint_position/local? or you just switch to the loiter mode and it holds the position?

It is continuous, from what I see.

For now only Loiter, when I will have the time I will try with /mavros/setpoint_position/local

16cm, I tried also with smaller sizes (12cm) but the estimation is worse. I think it could be better using a board of marker:

http://docs.ros.org/kinetic/api/asr_aruco_marker_recognition/html/classaruco_1_1BoardDetector.html

Thanks for your response. I am using markers sized 14.1 cm and the estimation is very noisy at heights greater than 60 cm. I am going to try with markers with sides of length 30cm and see if it works

@anbello, I tried with bigger markers with sides of length 45 cm and the results look little better but there is considerable toilet bowling effect as mentioned by @chobitsfan.

Here is a video: https://youtu.be/D0vMxI3H5BY

There is some description in the video but I can write the same thing here too:

The pilot(me) takes off the drone(using the remote control) in ALT_HOLD mode to a certain height so that the Aruco markers are well visible and the external navigation starts. Then switches to the LOITER mode. There is considerable toilet bowling effect and things go bad when wifi transmission of images is momentarily lost probably due to poor quality of wifi module over skyviper. When that happens the pilot switches to ALT_HOLD mode to stabilize the drone and brings it over the aruco markers, then again switches to LOITER mode when wifi connection is re-established. The topics mavros/vision_pose/pose(RED) and mavros/local_position/pose(GREEN) follow each other closely, more so in Z direction but the trends in X and Y are also similar. I believe that the controller should be tuned now so that the toilet bowling effect is reduced. I am using Arducopter-3.7-dev. Any help with that will be deeply appreciated. I am adding some graphs here:

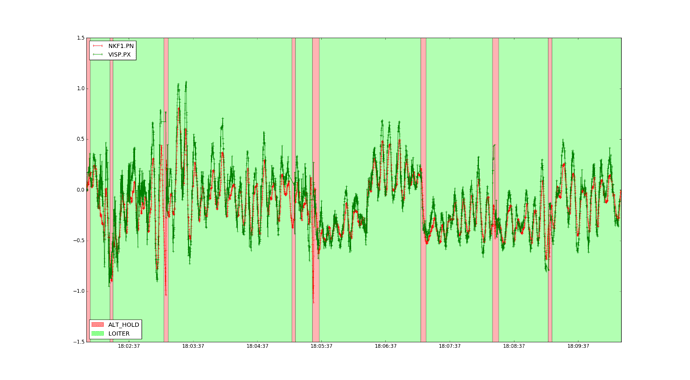

The graph below is for X, there is toilet bowling and also very noisy estimation from aruco markers, I dont know if toilet bowling happens because of noisy estimation or any other thing.

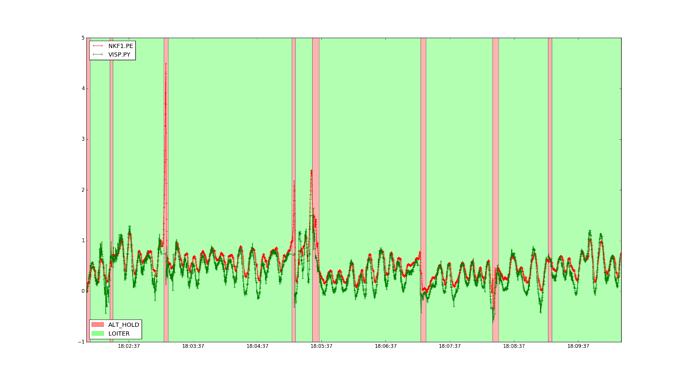

Here is Y and it looks more or less the same as X:

The Z looks OK:

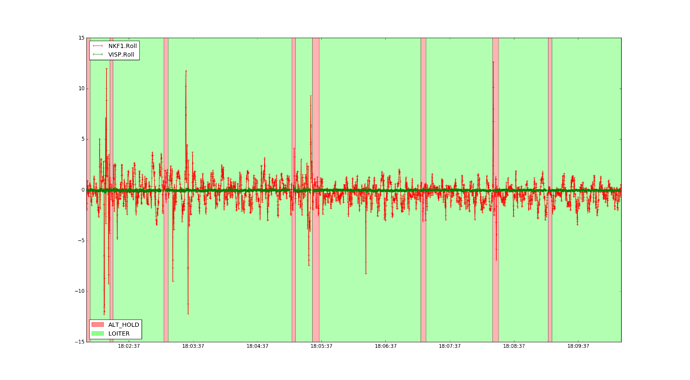

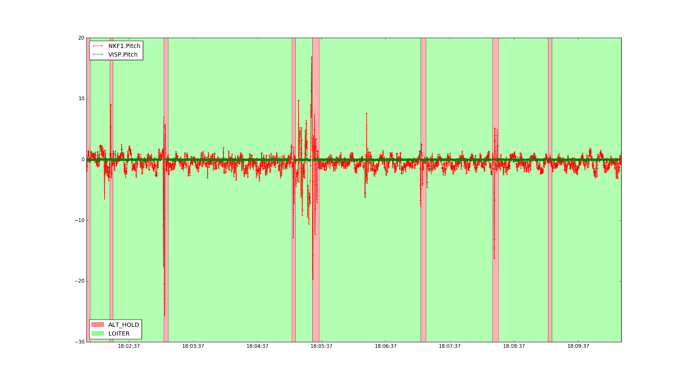

The Roll and pitch are noisy but centred around zero(ofcourse).

I dont understand why Yaw o/p from EKF (NKF) and Vision(VISP) are off by 90 degrees. Any insight on that will be praiseworthy.

I also attach here the associated data flash log. Here it is: https://drive.google.com/file/d/1eA1Bt_E1m6UZMvTBh7ziBCwM_GESkCPn/view?usp=sharing

Requesting @rmackay9 @chobitsfan and @vkurtz to kindlly take a look and give me their invaluable feedback.

I suspect that the instability is due primarily to poor tuning of the inner loop (rate control, attitude control) PID controllers.

I ran into similar problems while using a similar setup with a mocap system for localization. Here is what things looked like on our 3DR Iris using the default parameters. You can see a similar toilet bowling effect in the position (e.g. POS.TPX vs POS.PX). While the attitude tracking (ATT.DesRoll vs ATT.Roll) is okay, there is a lot of noise.

Here is a log from the same system using these tuned parameters. As you can see in the log, the results are much better and the quad felt very stable in the air. Note that the attitude tracking (ATT.DesRoll vs ATT.Roll) is much tighter than in the previous log or the log that you shared.

Unfortunately I don’t have much experience tuning these inner loop controllers, though there are some instructions here. In fact, we have another older custom quadrotor in the lab that I’ve been trying to get this system to work on, but I haven’t been able to get the same good results with. If you are able to tune your system and get good results, I would love to hear how you went about the tuning process!

As far as the strange yaw offset goes, I’m not sure why that’s there, but I have similar results. I don’t think that’s the problem that leads to toilet bowling though, since I would expect much worse behavior if there really was a 90 degree shift.

Hello,

I’m new here, so let me introduce quickly myself. I’m interested by the vision method for localization (e.g. SLAM). My project is to make navigation in indoor environnement for drones, and as I suppose you know, there is big challenge about it !

I’ve tested AprilTag (which are visual marker similar to Aruco) as only source for positioning system. And I had the same situation of big noise in thoses axis.

From what I understand, this method has a major problem : When the camera is coplanar to the tag (especially when the camera is in front of the tag), there is a situation of " singularity " generating this noise.

The library Aruco v3 has an “estimation” function which tries to reduce this phenomena - it is remembering the last pose for the next one in order to avoid the noise (if you need, I can provide you the reference). It is better, but the noise is still here (even with adding filters).

For me, I’m moving for another solution. Keeping in mind that AprilTag/Aruco are great and cheap method, but will not be used for unique source of positionning in the outter loop.

I hope that you have found a way to fix it.

Hi

With a board of marker instead of a single marker there is way less noise.

http://ardupilot.org/dev/docs/ros-aruco-detection.html

Anyway aruco_gridboard use aruco lib from opencv3 that is aruco v2 and from what I read from the aruco site v3 if better and faster.

Also UcoSLAM is really interesting, a SLAM library that uses both keypoints and aruco marker.

I have seen the amazing project of UcoSLAM, and seems to be a nice way to merge Aruco and SLAM (including monocular and stereo), improving at the same time ORB-SLAM2.

The only bad point, is that UcoSLAM Cmake is not design for ARM architecture.

Precisely, the library designed by the team of " rmsalinas " called Fbow is only for Intel processor (like x86)

I’ve been in contact with him, and if I have time, I will try to make it compatible with ARM. And, cherry on the cake, see if it can be optimize for GPU (like ORB-SLAM can do with CUDA).

It would be great if you succeed with this porting and if you keep us informed.

Thanks

Yes, I will let the community follow the evolution.

For now, I’m trying to use SLAM from the ZED Stereo Camera and use the /local topics from mavros (instead of /global ones, which needs GPS) as primary source of positionning. And then, if it’s working, using UcoSLAM.

I’m facing some issue, but I will create a specific thread about it if I don’t find a solution, because I think you have more experience than me for this integration.

In any case, I have just join this forum, I’m following now the gitter “https://gitter.im/ArduPilot/VisionProjects”, so I will be more active to participate of this research field  This forum seems to be dynamic, and I do appreciate it !

This forum seems to be dynamic, and I do appreciate it !