Last week the TAP-J team competed in the Japan Innovation Challenge 2017 (a search and rescue competition) and won Day 4’s Stage1! Above is a picture of the dummy we found taken from 60m above. Below is a debrief of our team’s experiences at the event.

The Japan Innovation Challenge (facebook, youtube) is a search and rescue event held each year in late October in Kamishihoro, Hokkaido, Japan. It is somewhat similar to the Australian Outback Challenge in that the main objective is to find a mannequin/dummy in a large search area using UAVs. It differs though in a number of ways:

- the event is 5 days along and all teams fly at the same time!

- there are three stages:

- find the dummy hidden in a 3km diameter area within 1hr (500,000 yen prize per day, $4k USD)

- deliver a 3kg package between 3m ~ 8m of the dummy (5,000,000 yen prize, $43k USD)

- recover the dummy (20,000,000 yen prize, $175k USD)

- the vehicles do not need to be autonomous

- no restrictions on the number of vehicles or cost

This year there were 14 teams in total, we used ArduPilot of course, all other teams used DJI. Our equipment included:

- five eLAB 470 quadcopters (for searching) and one eLAB 695 hexacopter (for delivery). All copters had 18" propellers, 350W batteries, LoRa telemetry, The hexacopter also had a 1W analog video system.

- Pixhawk Cube 2.1 autopilots running ArduPilot Copter-3.5.3

- Raspberry Pi3 companion computers with 8MP ArduCam cameras (M12 and CS lens versions) mounted on Tarot gimbals. Images were stored to 16GB thumbdrives.

- two windows PCs running Mission Planner for mission planning, simulation and inflight monitoring

- three Ubuntu PCs for running CUAV for post-flight image analysis to find the dummy

How we did it

Each night we planned and tested the missions we wanted to fly the next day.

When the event started at 10am, we launched our quads one after the other on autonomous, terrain following missions.

Missions were all 10min ~ 15min so as the copters returned, one of us would grab the copter, take it back to the tent, copy the images and tlog from the USB thumb drive to our PCs and begin the search using CUAV.

When the last of the copters returned, the remaining team members joined in looking through the images.

When we were able to find the dummy we emailed it’s location (provided by CUAV) to the organisers.

What went well

Copter’s terrain following using only SRTM data (no lidar) worked much better than expected. It’s hard to be sure of the exact accuracy because there was no lidar on the vehicles but from the images we captured, it appears the altitude-above-terrain was accurate to better than 10m.

Mission Planner’s built in simulator was extremely useful in testing our missions before we actually flew. It gave us a lot of confidence that the mission would go smoothly and how long they would take.

APSync/APWeb on the RPI3 including it’s wifi telemetry, live video (used to focus the cameras) and web browser based file manager worked great.

The team worked well together, very organised, efficient and fun!

What didn’t go so well

Our major problems came from the UCTronics camera which simply stopped working when it’s temperature dropped below 5C (some stopped working at even higher temperatures). Our testing had been mostly performed in warmer environments and it took us a couple of days before we had identified the most reliable of the cameras and methods to keep them warm (including heating them before take-off and wrapping them in cloth).

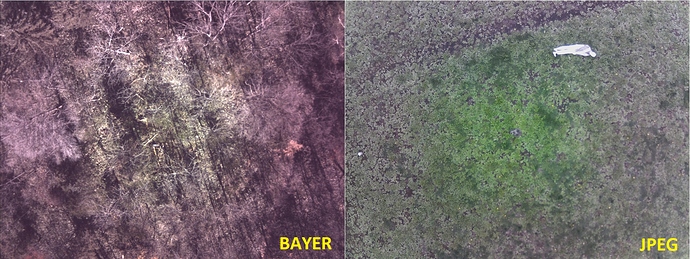

The camera also suffered from colour distortion (they cannot see green around the edges) especially when using the raw Bayer images. Below are two images, the left is from the competition using Bayer, the right is using normal jpeg taken before the competition. The colour problem is visible in both.

The images also had some jello caused by the rolling shutter although it possible better gimbal tuning could resolve this.

Competing with the DJI teams

It was interesting and challenging competing against the DJI based teams because they were so different. They had a short-term advantage in that their copters had beautiful gimbals, cameras and high bandwidth telemetry links meaning they could very quickly get their vehicles in the air and begin searching in real-time (using FPV) while we instead had to first fly our copters, then download the data and begin analysis.

Our advantage came in that we had a longer telemetry range (LoRa provides 4km+) vs the DJI 1.4km albeit with a much smaller bandwidth but because we were flying autonomously and using terrain following missions our vehicles could search much larger areas and much further away. Our team also had the most vehicles - we flew 4 copters at once while most other teams only flew 1.

We did not win the delivery competition although we did successfully drop the 3kg package quite near the dummy (in fact, one time we dropped it right on the dummy). Sadly it was never exactly within the 3m ~ 8m range required. A significant number of the other teams, flying manually, did manage the delivery.

Things to do for next year:

- we hope to find a better quality camera (i.e. more reliable, less distortion)

- improve the camera integration with the autopilot including recording the location and attitude of the vehicle in the image using EXIF.

- run CUAV in real-time. 3G/LTE should be legally usable in Japan by this time next year.

- ease-of-use improvements to CUAV including showing on the map where an image was taken when using the “Image Viewer”.

The Team:

Core members of the team who went to Hokkaido for the event were Kawamura-san, Kitaoka-san, Komiya-san, Matsuura-san and Mastumoto-san but others helped as well including Professor Kaizu (winch), Nohara-san, Yamaguchi-san, Murata-san.

Thanks To:

Peter Barker, Tridge and Michael Oborne for some last minute changes to CUAV and Mission Planner to make the competition go more smoothly.

Drone Japan (which runs a ArduPilot software training school)

eLAB (my main sponsor) which provided all the frames, batteries, telemetry links, etc. Thanks!!

Extra Info:

- the missions we used on day 4

- zip containing the tlog and images captured by the winning copter

- dummy locations for each day (stored as a rally point file)

- search area (polygon fence file)