I’ll have to out myself as the “vehicle engineer” in question.

Definitely will dial it back to Dshot 600 or so. I didn’t really have time to dive into the intricacies of Dshot at the time and just selected the one T-motor referenced.

Mihai can attest that I also nag them regularly to let me update some of these drones but once the drones are “In the field” I need to make sure I’m available to debug any issues that may come from an update. Rest assured any drones that leave my hands after being worked on are always going to be on the latest or near latest stable firmware.

We have been trying to find someone that can take the reins as a " field engineer" so to speak for these vehicles. Difficult as a university since most of the work needs to be done by students and by nature students are temporary. I have to divide my time between designing / building these drones and another project I work on. Because of that I’m usually not in the field on regular flying days.

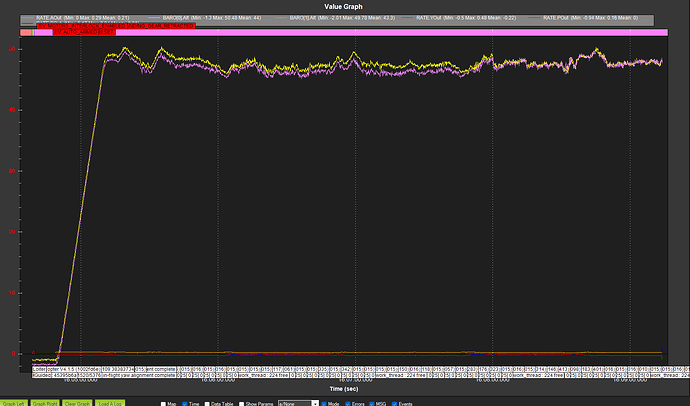

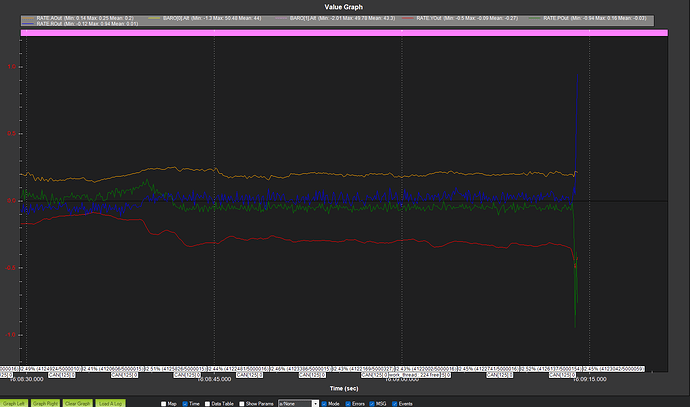

I got the carcass of said failed drone and did some testing…

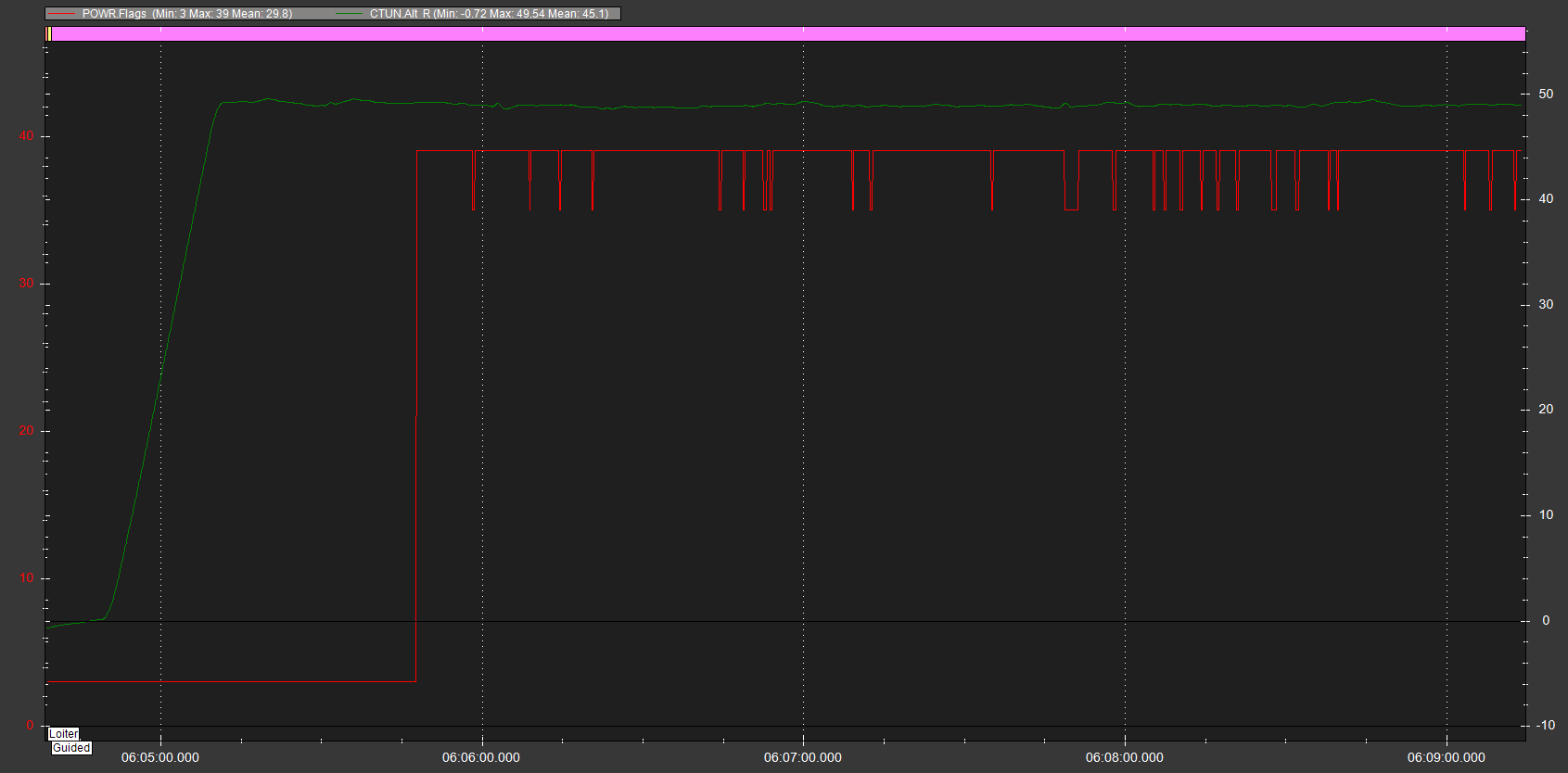

Everything points to a very sudden loss of power or some sort of transient that caused the cube to reboot.

I had a suspicion there was a ESC failure partially inspired by a sudden increase in current consumption in one of the last measurement points in a small corresponding voltage sag. Unfortunately I put what remained of the drone back together on the workbench and at least with no propellers attached the ESC has no issues spinning the motors and there’s no visible damage on it or any other electronic components.

The ESC is a T-motor F55A pro II

And as he already mentioned the cube VCC looked fine up until the last moments.

It seems very likely something caused the cube to reboot in flight and it was probably already booting back up by the time it hit the ground or shortly after, they would have almost certainly missed the watchdog message anyways.

One suspicion I have is the payload has a computer on board connected via USB, I don’t have any way of verifying this that I can think of, but I’m suspicious that that may have contributed to a power issue. Let me explain:

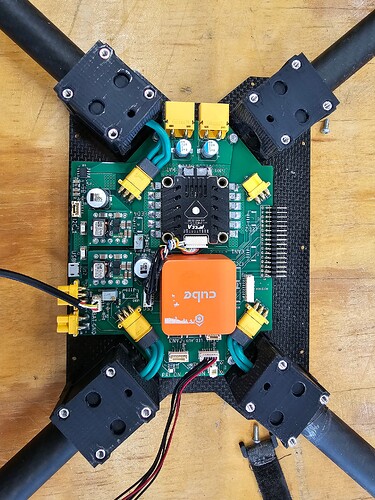

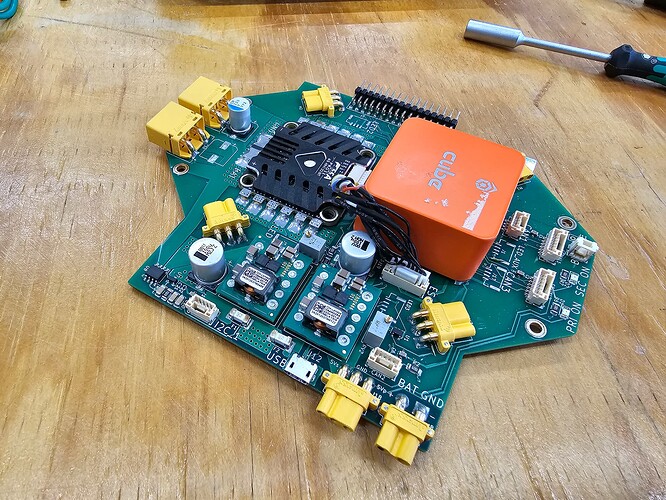

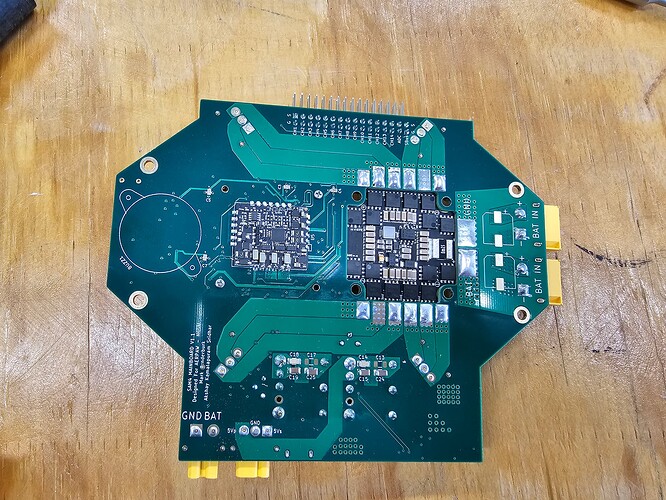

The custom carrier board we have for the cube in this drone has two high quality 8A TDK buck converter modules. These feed into the power one and power two inputs on the cube power management unit. They also power many other things on the drone but are never being used anywhere close to their limits.

There are potentiometers on the board for adjusting the output voltage of these converter modules, in the haste of building a bunch of these drones last year the voltage got left at what turned out to be the default of about 4.95V…

Now under normal circumstances this is fine for the cube even though they recommend 5.3V, why is it that they recommend 5.3V? Because if you have USB connected their integrated power selection circuit will always choose the higher voltage, that’s why.

I know from experience a typical USB port will not power the cube and connected peripherals on this drone without some very strange behavior and often random rebooting.

So my theory is that at some point the power selection circuit used the USB power from the companion computer rather than either of the onboard 5 volt converters, and the USB port was not able to power it successfully and a reboot happened.

Now obviously the PMU should have gone back to using the other voltage rails when the USB power sagged and I can’t say why it may not have done that, but it’s not hard for me to imagine if it was working right on the edge that there was perhaps some back and forth and hysteresis in how quickly it was willing to switch back. Hopefully what I’m trying to say makes sense…

Hard to say without doing a lot of testing and or having a schematic for the PMU.

Here’s some pictures of the carrier board for reference, if you spot the missing capacitor don’t worry about that, I knocked it off when disassembling the drone.