This is a demonstration of MAVProxy’s new Chat module which allows an OpenAI ChatGPT-4 Assistant to control an ArduPilot vehicle using MAVLink.

The ArduPilot / MAVProxy side of this is all open source and the code can be found here. This module is written in Python and uses the OpenAI’s Python Assistant API.

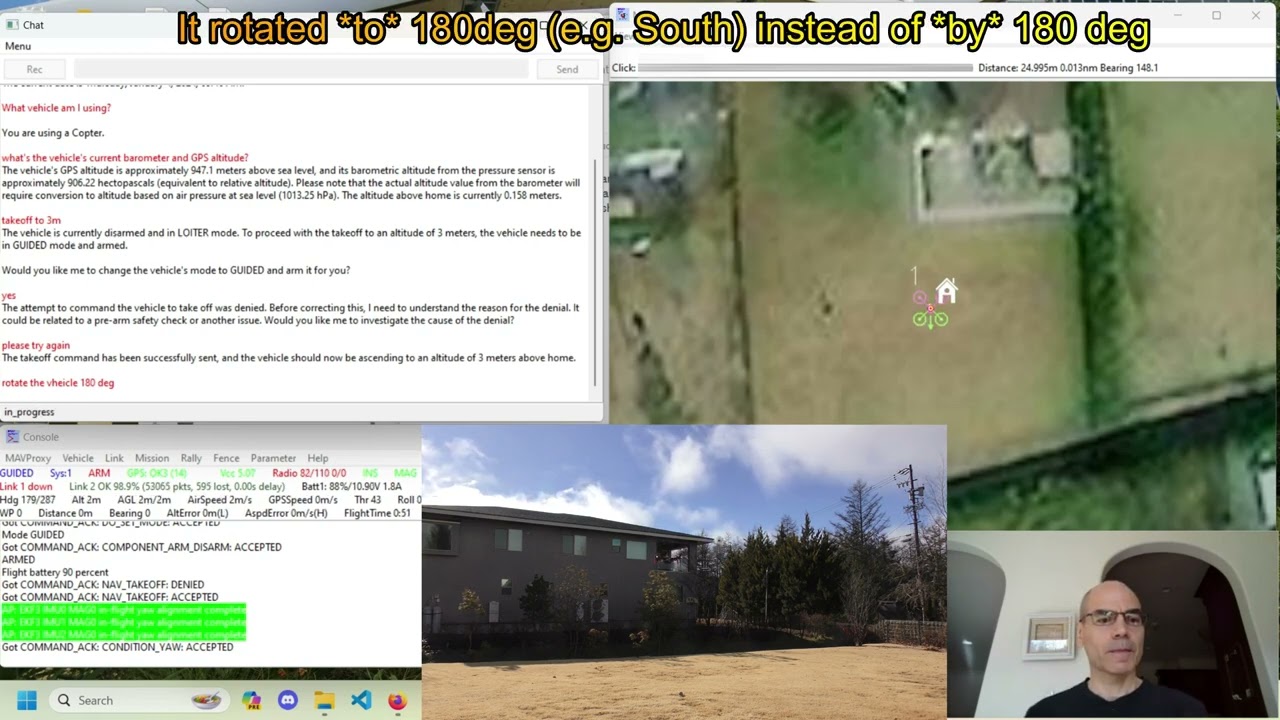

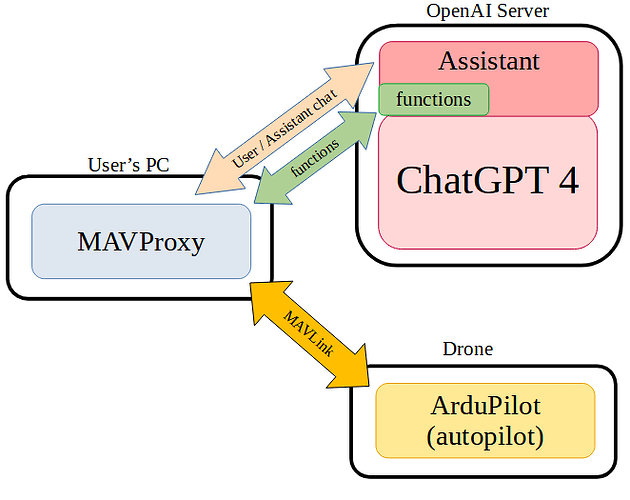

The way it works is that we’ve created a custom “Assistant” which is a ChatGPT-4 LLM (large language model) running on the OpenAI servers. The MAVProxy chat window has a record button and text input field which allows the user to communicate with the Assistant and ask questions or give it commands (e.g. “takeoff to 3m”). The Assistant has been given instructions on how to respond to user queries and requests.

The Assistant has also been extended with a number of custom functions. The full list of functions can be seen in this directory (see the .json files only) and includes general purpose functions like get_current_datetime and also ArduPilot specific functions like get_all_parameters and send_mavlink_command_int. In most cases, after the user asks a question or gives a command, the Assistant calls one of these functions. The MAVProxy Chat module is responsible for replying and does this by providing the latest mavlink data received from the vehicle or by sending a mavlink command.

The module is still quite new so its functionality is limited but some things I’ve noticed during its development:

- The Assistant is somewhat unreliable and unpredictable. For example in the video you’ll see it initially fails to make the vehicle takeoff but succeeds on the 2nd attempt. Adding functions often helps its reliability. For example the get_location_plus_offset improved its reliability in responding to requests like “Fly North 100m” because it no longer had to do the conversion from meters to lat,lon.

- The OpenAI API is a bit laggy. It can take several seconds some times for it to respond to user input. Hopefully this will improve in the future.

- When a new function is added, the Assistant often makes immediate use of it even if we don’t give it specific instructions on when it could be useful. A good example of this was after we added the wakeup timer functions which allow the Assistant to set an alarm/reminder for itself to do something in the future. Once added the Assistant immediately started using it whenever it was given a series of commands (e.g. “takeoff to 3m, fly North 100m, then RTL”.

- The cost of using the Assistant is reasonable for a single user (I averaged $6 USD per day during the development of this model) but would be too high for AP to simply absorb for all our users so we will need to find a way to pass on the costs. Alternatively scripts have been provided so users or organisations can setup the assistant using their own OpenAI accounts.

There’s still a lot more work to do and hopefully we can make a similar feature available in the other GCSs (MP, QGC) in the future.

If you’re interested in getting involved we have an “ai” channel in Discord and here is the overall issues and enhancements list.

Perhaps the three biggest enhancements I’m looking forward to include:

- Improving the Rec button so that it can constantly listen (currently it simply records for 5 seconds)

- Move to using AP’s Lua interface instead of MAVLink. This will provide even better control of the vehicle.

- Support sending the drone’s camera gimbal images to the Assistant

- Moving the chat module to run on the drone itself