This is the first couple of test flights of ArduPilot (Copter-3.6-dev) paired with LAB enRoute’s open source (AGPLv3) vision system called “OpenKai” which uses the ZED stereo camera to allow the vehicle to control it’s position without requiring a GPS.

More testing is required of course but it seems to hold position quite well especially when there are objects within 5m ~ 10m in front of the vehicle. Even when objects are further away it works fine although we see movement of a few meters especially when the vehicle is yawing. It’s possible we may be able to improve this with a better camera calibration and other software improvements.

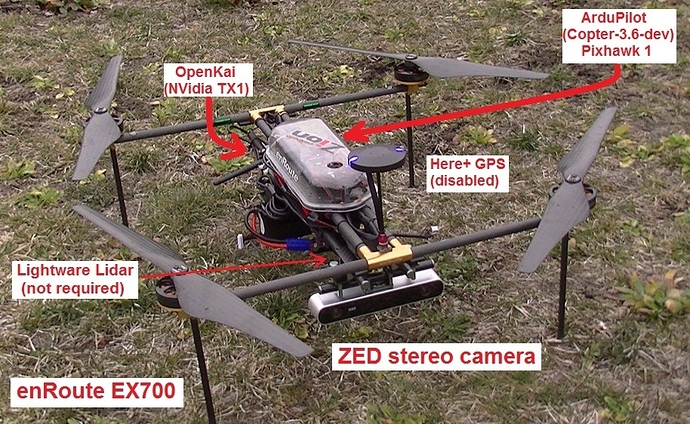

You’ll see from the picture below, I’m using my regular enRoute EX700 development copter which has a StereoLab’s ZED camera mounted facing forward. On the back of the vehicle is an NVidia TX1 mounted on an Auvidia.com J120 board and connected to a Pixhawk1 as shown on our developer wiki. There is a Here+ RTK GPS on the vehicle but it’s disabled (see the blue LEDs) and it’s there because I wanted to continue to use the external compass. There’s also an (optional) downward facing LightWare lidar.

This is a bit of a breakthrough because we are taking the 3D visual odometry information from the ZED camera at 20hz and pushing it into ArduPilot’s EKF3 which consumes it much like it consumes optical flow data. The advantage over optical flow is that the position estimate is 3-dimensional instead of just 2-dimensional and also because the camera is stereo, we do not need a range finder. It’s also possible to simultaneously use the stereo camera’s depth information for object avoidance although during these early tests we ran into performance problems on the tx1 when trying to simultaneously do both visual odometry and object avoidance so we may need a tx2 for that :-).

Because the camera’s data is integrated into the EKF, all the existing flight modes work just as they do now. Also this can be used together with a GPS which we hope will improve position reliability and hopefully allow flying seamlessly from a GPS environment to a non-GPS environment. Imagine autonomously flying a vehicle up over some hills to a railway tunnel, then fly right through it and out the other side.

In this case the camera was pointed forward but once we push this to “master” it will support at least 6 orientations (up, down, left, right, forward, back).

Note that this approach is a little different than SLAM because we don’t create a 3D map to determine the vehicle’s absolute position in the environment - we are instead integrating position changes. This is slightly simpler than a full SLAM solution but in any case it allows flying indoors.

I plan to make a tx1 image available so people can get going quickly but until then, I used APSync as the base image and then installed and built OpenKAI as described here.

The code is currently here in my ardupilot and mavlink repos but will go into master once it has been peer reviewed. It should be released official as part of Copter-3.6.

Thanks very much to Kai Yan and Paul Riseborough (EKF) for putting this together (I helped integrate).